12 Questions to Ask Before Hiring an AI Development Company

A practical guide for business leaders who want real results and positive ROI (not just hype).

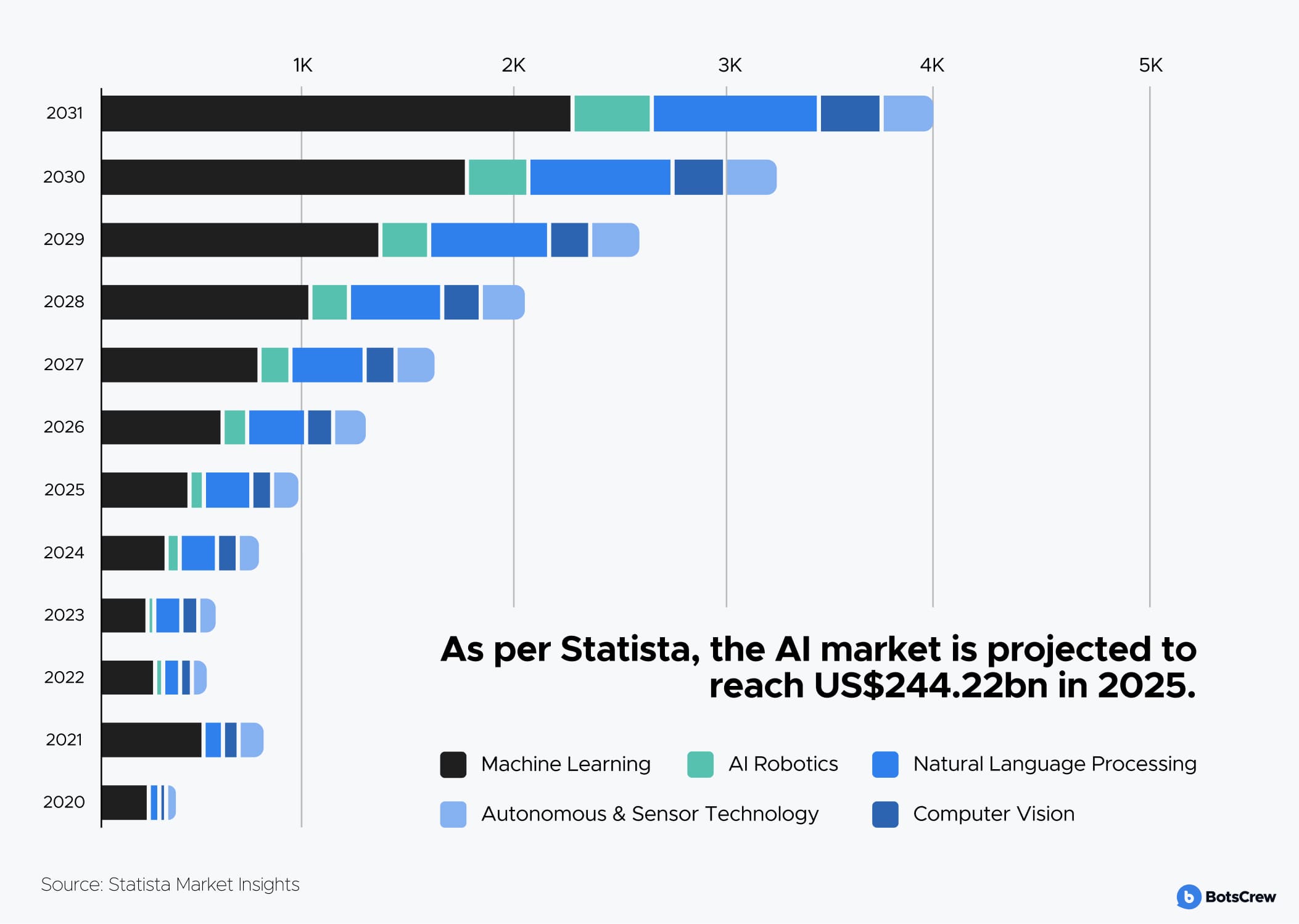

According to Statista, the AI market is expected to reach $244.22 billion by the end of 2025. Furthermore, it is projected to grow at a compound annual growth rate (CAGR) of 27% from 2025 to 2031, reaching a total volume of $1.01 trillion by 2031.

The right development partner can help you tap into that growth, boosting efficiency, sparking innovation, and giving your business a competitive edge. While with the wrong one, you risk wasting budgets, missing opportunities, or worse — a flashy model no one actually uses.

This guide gives you 12 must-ask questions for evaluating any AI development company — including us — before signing a contract. You'll also find red flags to watch for and insights into how our team handles common challenges.

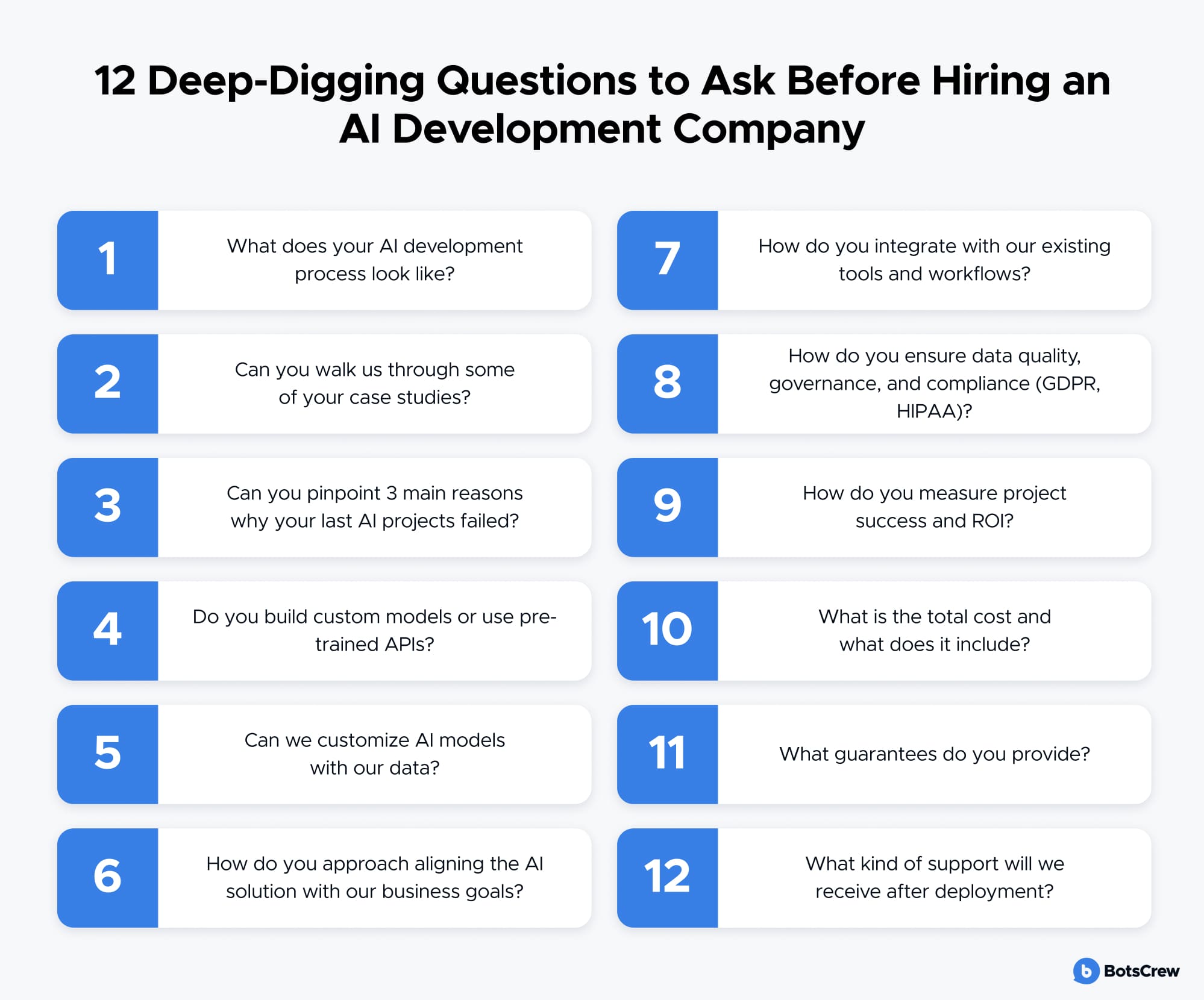

What Should You Ask an AI Development Company Before Hiring and Signing a Contract?

Before making any hiring decision, it is essential to understand how a potential partner works, what they bring to the table, and how they align with your business goals. The following questions will guide you through evaluating any AI development company with clarity and confidence.

#1. What does your AI development process look like?

Every vendor has its own internal workflows and methodologies — from the way they collect requirements to how they test, release, and maintain solutions. Before you commit to a partnership, take the time to understand how structured and transparent their process really is. A professional and well-managed vendor process typically includes:

— Clear phases: discovery, planning, development, testing, deployment, and support

— Defined roles and responsibilities: who will be on the team and what they will do

— Leadership and oversight: presence of a project manager or account lead on their side.

If your goal is to minimize involvement, you need a partner who doesn't just execute tasks but takes full ownership of the outcome. That means organizing and managing the project internally, solving problems proactively, and keeping everything aligned with your business goals, without needing constant input from your side.

Here is where many vendors fall short: they don't offer true end-to-end project management. If there is no project or account manager provided, someone on your internal team will need to step in — tracking progress, running meetings, managing the backlog, and unblocking issues. For many organizations, especially large enterprises, that is an unnecessary burden and a potential risk.

#2. Can you walk us through some of your case studies?

Case studies are more than just marketing materials — they are proof of capability, insight into a team's problem-solving process, and evidence of real-world impact. AI projects often operate in uncertain and complex environments. Unlike traditional software, AI solutions require:

- Experimentation and hypothesis testing

- Data handling and preprocessing

- Iterative model training and tuning

- Integration with existing systems

- Continuous adaptation to changing inputs and requirements.

A well-documented case study can show how a vendor approaches each of these steps. More importantly, it highlights how the team responds when things don't go as planned — which is often the case in AI development.

A strong case study should clearly outline:

— The problem. What was the client trying to solve? Was it a prediction task, process automation, a personalization engine, or document classification?

Look for evidence that the vendor understood the business problem — not just the technical request.

— The approach & solution. How did they structure the project? What models or algorithms were used? How was the data handled? Did they run pilots or POCs before scaling?

Pay attention to how the team navigated ambiguity and iterated on the solution.

— The results & outcomes. What was the measurable impact? Did the AI solution reduce costs, improve speed, increase accuracy, or unlock new capabilities?

— The process. How was the project managed? What tools were used? How frequent were the updates and check-ins?

Look for patterns in feedback, especially around professionalism, flexibility, and reliability. If possible, ask to see a product demo that shows the system in action.

Red flags to watch out for:

❌ Vague or generic case studies. If the examples are high-level and lack specifics, that may signal limited experience or a lack of transparency.

❌ Reluctance to share references. While some NDAs limit disclosure, an experienced vendor should have at least a few clients willing to vouch for them.

#3. Can you pinpoint 3 main reasons why your last AI projects failed?

Not every AI project reaches its full potential. Sometimes, it's not a complete failure, but the results fall short of expectations. This can happen even with experienced teams.

The safest path is iterative development. We start with a Discovery phase and Proof of Concept (POC), validate ideas in small, manageable chunks. Moreover, we define a clear set of test questions to measure model quality and pivot if something doesn't work as expected.

What to look for here:

- Honest reflection (not PR spin)

- Understanding of why the project failed (poor data, misaligned goals, wrong assumptions)

- Clear process changes made afterward.

#4. Do you build custom models or use pre-trained APIs?

In AI development, there is often a temptation to build a custom LLM model from scratch. However, that path is expensive, time-consuming, and risky — and it doesn't guarantee results. Especially if your goal is to launch a functional, scalable solution quickly and within budget.

What to look for when choosing a vendor:

✅ Do they have experience working with multiple LLMs?

✅ Do they consider client-specific constraints around security, licensing, and hosting?

✅ Can they justify their model selection, or do they simply follow industry trends?

✅ Can they adapt the architecture to your stack, instead of forcing you into theirs?

We at BotsCrew follow a strategy that balances quality, security, and efficiency: leveraging pre-trained models from leading vendors or the open-source community. We are model-agnostic — we don't push one specific model. Instead, we evaluate your job-to-be-done and then recommend the most suitable model. This could be:

- A commercial closed-source model (e.g., OpenAI, Azure OpenAI, Google Gemini, Anthropic Claude)

- A robust open-source model (e.g., Mistral, LLaMA, Mixtral) that can be self-hosted

- Or a combination of models, depending on the project's needs.

How do we choose the right model:

— Solution goal — is it an internal assistant, a public-facing integration, or generative analytics?

— Architecture and hosting — will it run in the cloud (Azure, GCP) or on-premises?

— Licensing constraints — especially relevant for nonprofit or public sector clients.

In one of our projects for a team of journalists, data privacy was non-negotiable. Even in the case of legal action, no information could be disclosed. We completely ruled out using OpenAI, Azure, or Google. Later we evaluated self-hosted models such as Mistral and LLaMA and provided full control over the infrastructure and ensured strict anonymization.

🚩 Red flags:

- The vendor promotes only one model or their proprietary tech

- No hands-on experience with open-source or self-hosted models

- No clear framework for assessing your job-to-be-done

- Little to no attention to data privacy and compliance requirements.

#5. Can we customize AI models with our data?

Fine-tuning a model with your proprietary data — whether it's customer support chats, product documentation, medical records, legal content, or transaction logs — can increase relevance, accuracy, and value. Custom AI delivers more accurate answers in your niche domain, better alignment with brand tone and voice, and a competitive advantage through proprietary intelligence.

What to look for:

✅ Fine-tuning capabilities. Can the vendor fine-tune LLMs (OpenAI, Claude, Mistral) or use techniques like LoRA, RAG, or adapters?

✅ Support for private data pipelines. Can they securely ingest and transform your data (PDFs, SQL databases, emails, knowledge bases)?

✅ Balance between accuracy and efficiency. Do they suggest the right approach (full fine-tuning vs. retrieval-augmented generation) for your use case?

✅ Governance-aware customization. Are they able to fine-tune within data privacy boundaries (GDPR/HIPAA-compliant workflows)?

🚩 Red flags:

- The vendor insists only on the use of a generic model, without evaluating domain-specific needs

- No track record of working with client-specific datasets

- Cannot articulate the difference between fine-tuning, prompt engineering, and RAG — or when to use each

- No security protocols for handling sensitive internal data.

#6. How do you approach aligning the AI solution with our business goals?

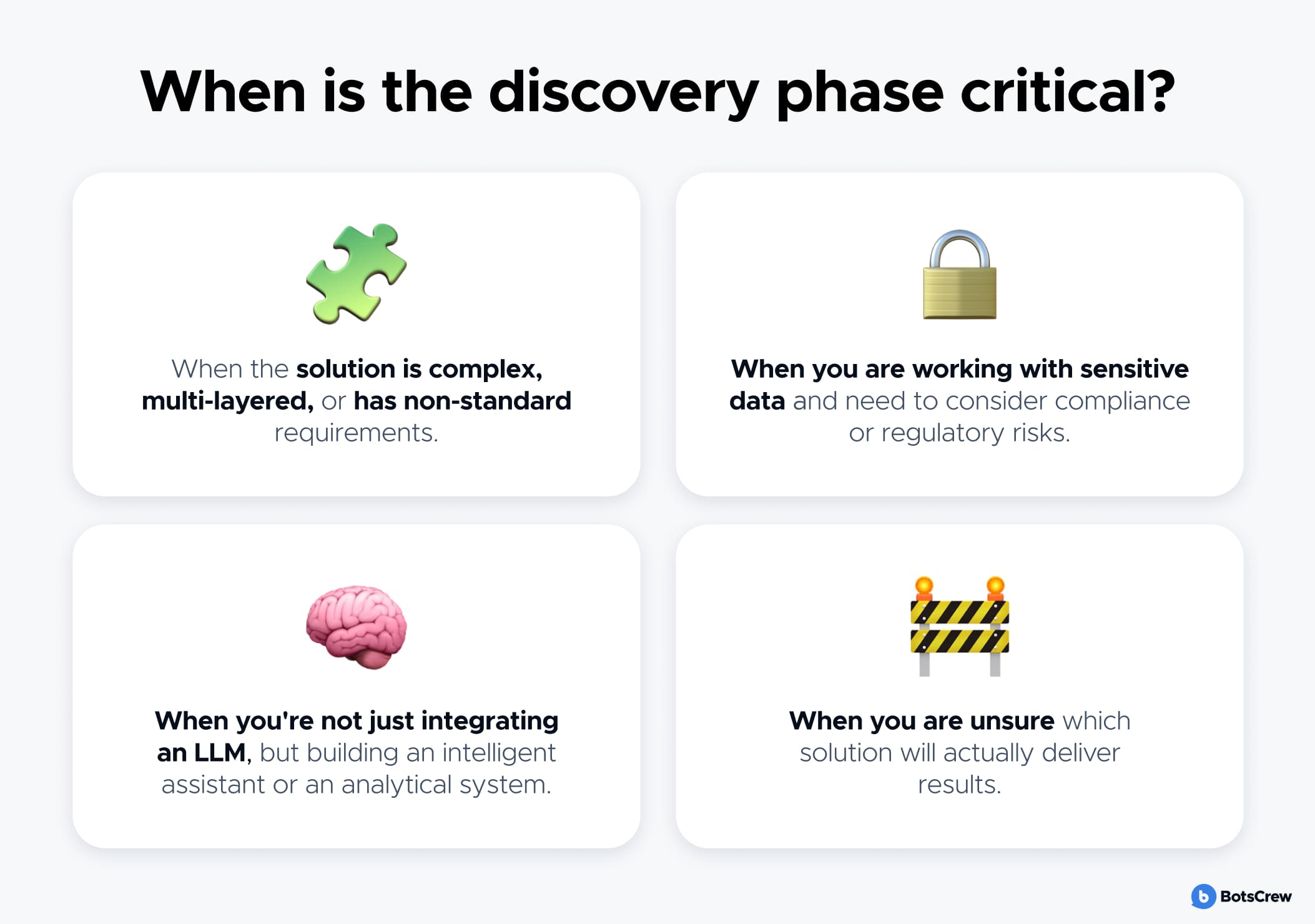

Without a clear understanding of the context, business goals, and constraints, any technically working solution can easily turn out to be useless. That's why a competent vendor should offer a discovery phase — a research stage where the vendor and client collaborate to clarify:

- What are the real problems that need to be solved?

- What data and processes already exist?

- What technical limitations, regulatory requirements, or architectural nuances must be considered?

- What possible solutions exist, and how can they be tested through a prototype?

This stage helps not only to avoid mistakes but also to save money by not spending the budget on the wrong implementation or unnecessary, expensive technologies.

What a quality AI vendor should offer:

✅ A quick discovery at the very beginning — an initial consultation or audit

✅ A complete discovery phase that includes research, prototyping, and hypothesis testing

✅ A clear explanation of why a specific approach is recommended, based on your context

✅ Flexibility — the ability to combine service expertise with ready-made product components.

🚩 Red flags:

- The vendor does not offer any discovery or research phase at all

- A "one-size-fits-all" approach applied to every client

- No explanation for why a specific architecture or model is being used.

Build smarter and move faster. Start with AI Discovery. Cut through the noise, define your vision, validate your idea, uncover risks, and create a roadmap that drives real results.

#7. How do you integrate with our existing tools and workflows?

AI becomes impactful only when it fits seamlessly into your existing workflows, pushes insights directly into your operational tools, reduces context switching for your employees, and automates repetitive processes directly where they occur (e.g., CRM, EMR, ERP).

If you are in B2B sales, and your AI-driven lead scoring doesn't show up inside Salesforce or HubSpot, it will probably be ignored.

What to look for in a vendor:

✅ Integration expertise. The vendor should be able to integrate AI outputs into systems like CRM (Salesforce, HubSpot, Zoho), ERP (SAP, Oracle, NetSuite), EMR/EHR (Epic, Cerner, Meditech), BI tools (Power BI, Tableau, Looker), and more.

✅ Tech fluency. Look for real experience with working with APIs and SDKs, building custom plugins, supporting frontend and backend integration (e.g., widgets, dashboards, alerts), secure role-based access, if integrating into internal systems.

✅ Workflow awareness. A good vendor will ask: Who uses this tool? What action should AI trigger or enhance? How often does this data need to be refreshed?

🚩 Red flags:

- The vendor suggests running the AI system in parallel instead of integrating it

- No clear experience integrating with primary tools (Salesforce, SAP, etc.)

- No discussion of user experience or how teams will interact with the AI

- Forcing the adoption of a separate UI.

#8. How do you ensure data quality, governance, and compliance (e.g., GDPR, HIPAA)?

In sectors like healthcare, finance, or legal, using sensitive or personal data comes with legal responsibilities — and significant risk. An AI vendor that takes data governance seriously will help you avoid biased or low-quality predictions, regulatory violations, reputational damage, and unethical model behavior.

What to look for in a reliable vendor:

✅ Data lineage and audit trails. The team should be able to track where the data came from, how it was transformed, and where it was used.

✅ Compliance-by-design mindset. Does the vendor design for privacy from the beginning (e.g., anonymization, opt-in consent, data minimization)? Do they regularly collaborate with legal or compliance officers?

✅ Industry-specific governance playbooks. If you're in a regulated sector, look for vendors with playbooks tailored to GDPR, HIPAA, or CCPA. These should include retention schedules, access control, DPO sign-off, and breach response protocols.

🚩 Red flags:

No mention of anonymization, consent, or retention. → These are the basics of working with sensitive data. They use production data for testing without safeguards. → This is a serious violation of data protection standards.

#9. How do you measure project success and ROI?

AI is an investment. Like any investment, it must deliver returns. Vendors who measure success only in terms of model accuracy are missing the bigger picture.

You need a partner who understands your goals and connects technical performance to real-world impact:

⏱ Time saved on manual tasks

💰 Operational cost reduction

📈 Revenue growth or upsell rates

🙋♂️ User engagement or retention

💪🏻 Process improvement or error reduction.

What to look for in a vendor:

✅ Early definition of success criteria. The vendor should help you clarify: What will change once the AI solution is in place? Who benefits and how? What does success look like at 3 months, 6 months, and 12 months?

✅ Alignment of AI metrics to business goals, such as: Accuracy and precision of model outputs (when relevant), Time to decision or process acceleration, Error reduction compared to manual tasks, Adoption metrics (number of users actively engaging with the tool), financial ROI (revenue impact or cost savings over time).

✅ Post-launch monitoring and continuous optimization. Do they offer dashboards or reports with real-time insights? Are KPIs tracked continuously and refined over time? Do they offer error analysis and retraining options?

✅ User-centric validation. Do they measure user satisfaction or NPS? Will they gather qualitative feedback for iteration?

🚩 Red flags:

- No metrics mentioned during project scoping

- Success is defined purely in technical terms, like model accuracy, without business context

- No plan for post-deployment tracking or continuous improvement

- Vague or generic definitions of value — "you'll save time" without specifying how much or how it is measured.

Work with our expert team to design intelligent, scalable solutions tailored to your goals. From ML models to end-to-end automation — we help you make AI work.

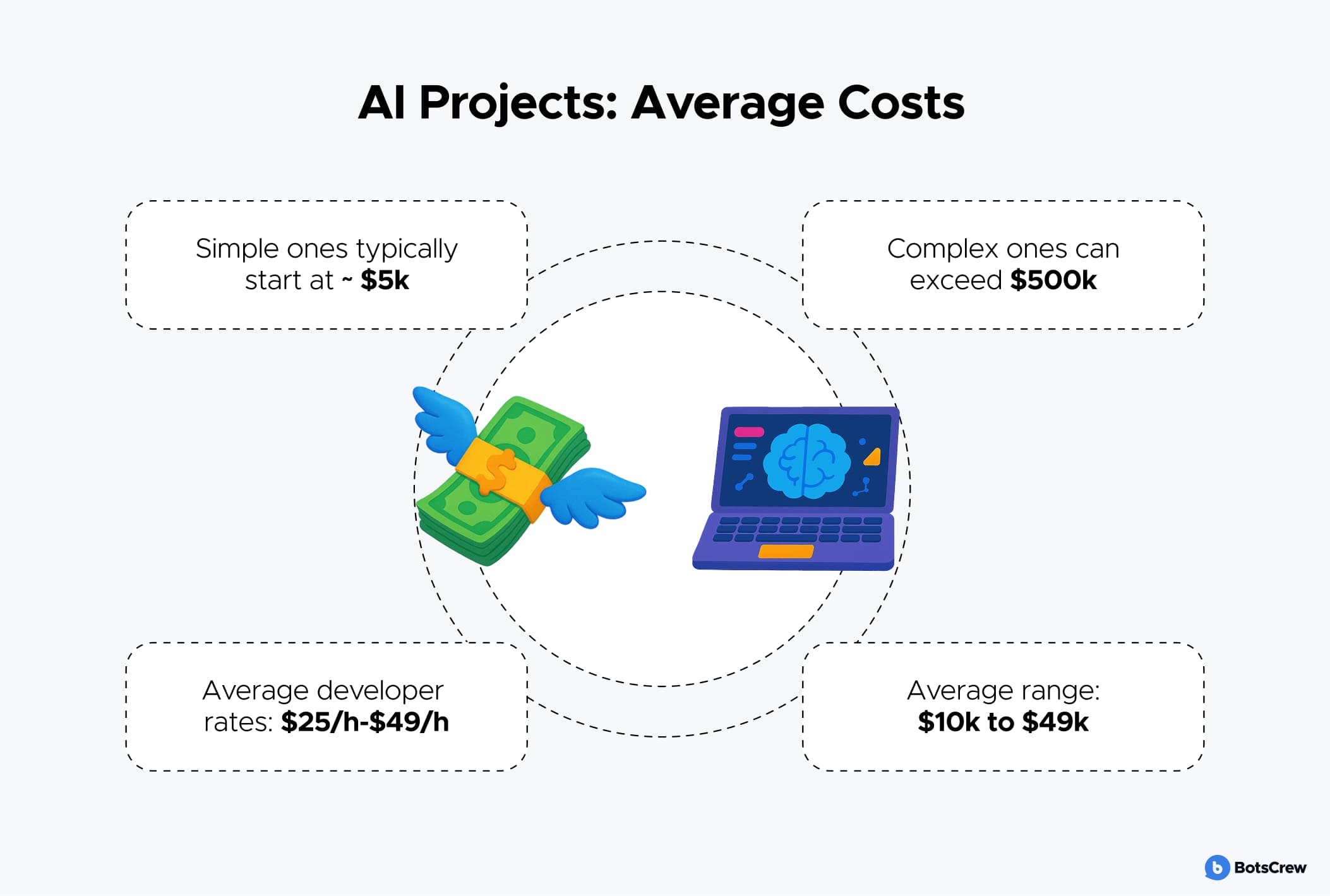

#10. What is the total cost and what does it include?

When selecting an AI development partner, cost is often one of the most important — and misunderstood — factors. Vendors may work under different pricing models. The most common ones include:

— Fixed price — the scope of work, deadlines, and budget are all agreed upon upfront.

This model works well for projects with clearly defined requirements.

— Time & Material (T&M) — billing is based on hourly or daily rates, and the requirements can evolve throughout the project.

This model suits complex, R&D-heavy, or dynamic projects.

— Part-time or Full-time engagement — where dedicated specialists are assigned to the project on a part-time or full-time basis.

What is equally important is the structure behind that cost, the transparency of the budget, and the vendor's willingness to explain what makes up that cost. Furthermore, final costs often exceed initial expectations. In many cases, it happens because of unpredictable factors related to real-world usage, technical architecture, or evolving business requirements that only become clear during implementation.

If these factors are not discussed at the outset, you may encounter hidden costs that significantly increase the total project budget. To prevent budget surprises, ask:

✅ How do you approach project estimation? Do you consider business requirements during the presale phase? Do you offer a discovery phase to make cost forecasts more accurate?

✅ What does your cost structure look like? What's included in the base estimate? What might be charged additionally?

✅ What third-party services or licenses do you use? Are they included in the cost, or will the client pay separately for things like OpenAI API usage, hosting, or integration platforms?

✅ What is your post-deployment support model? Who handles maintenance? How are support costs estimated? What metrics impact these costs (number of users, query volume, or logic complexity)?

We conduct a discovery phase during the presale stage. In this phase, we collect business requirements, assess the necessary resources, and use that to create a realistic budget. We separate development costs from post-deployment support and are happy to help you forecast ongoing maintenance expenses, taking into account expected system load and architectural complexity.

#11. What guarantees do you provide?

Legal aspects play a critical role — especially when working with SaaS products or entrusting a vendor with a business-critical part of your operations. In the field of AI platforms and products (primarily SaaS), the following legal documents are typical and essential:

— SaaS Agreement (Software-as-a-Service Agreement). Defines the collaboration model, roles and responsibilities of both parties, licensing terms, payment conditions, platform usage rules, security policies, liability limitations, and more.

— SLA (Service Level Agreement). A must-have for any SaaS-based solution. It includes uptime guarantees, incident response times, maintenance commitments, and bug-fixing policy.

— Data Processing Agreement / Annex (when personal data is involved). Ensures compliance with GDPR or other applicable regional data regulations.

— Scope of Work / Acceptance Criteria (especially for custom development). Defines what exactly will be delivered, and what requirements must be met for the results to be accepted.

What to expect from a quality AI vendor:

✅ A clear and transparent legal document structure

✅ Availability of standard SaaS Agreement and SLA

✅ Clarity on what is guaranteed — and what's not

✅ Acknowledgement of limitations — e.g., that absolute uptime is unrealistic, but structured responses to incidents are fully manageable and defined.

Discuss legal guarantees at the finalization stage of your agreement, not after the fact — doing so will help avoid future conflicts.

#12. What kind of support will we receive after deployment?

Only real-life usage after deployment reveals the true effectiveness of the solution. We provide a full range of support services and act as a dedicated team that stays with the client even after deployment. Our role is not just to keep things running, but to proactively analyze model responses, detect systemic issues, identify areas for optimization, and gradually elevate the solution to an even higher level of quality.

We offer different support models depending on how the AI solution was developed:

#1. The client is using our AI platform. In this case, we operate under a licensing model, where support is governed by the terms of the SaaS Agreement, including:

- Clearly defined uptime guarantees (SLA)

- Bug fixing and ongoing maintenance

- A dedicated account manager to support the client

- The original development team (in whole or in part) may be involved in support.

#2. We developed a custom solution from scratch. Together with the client, we define the level of involvement, areas of responsibility, and required resources. The support team typically includes:

- An account manager as the main point of contact

- A business analyst to review user journeys and chat logs

- A QA engineer to ensure consistent output quality

- An ML specialist who can adjust or retrain the model when needed.

This setup helps us to react quickly to business needs, adapt the model to new challenges, and ensure reliable long-term performance.

When choosing an AI vendor, be sure to ask:

✅ What does post-deployment support look like?

✅ Are there mechanisms in place to improve model output?

✅ Who will be responsible for supporting the project?

✅ How quickly and flexibly can the team respond to new needs?

If the answers are clear, structured, and backed by real examples — you are on the right track.

Final Thoughts

By thoroughly assessing a potential partner's expertise, experience, and approach, you boost your chances of landing a team that can ship results and scale your AI ambitions. Let the questions in this guide serve as a solid foundation for your evaluation process — but also rely on your judgment. Confidence in your chosen partner is essential. With the right crew, AI becomes not just a tool but a core growth lever for your business.

Want to make the right AI decision? We've worked with global brands, fast-scaling startups, and enterprise teams across retail, healthcare, and beyond.