Multi-Agent AI Systems: How to Move to Controlled Intelligent Systems

One AI agent can answer a question. But can it reason, plan, and review its work? Discover how to use multi-agent AI systems for complex business scenarios. Transform a single LLM into a coordinated team with multi-agent AI systems capable of planning, acting, self-checking, and scaling.

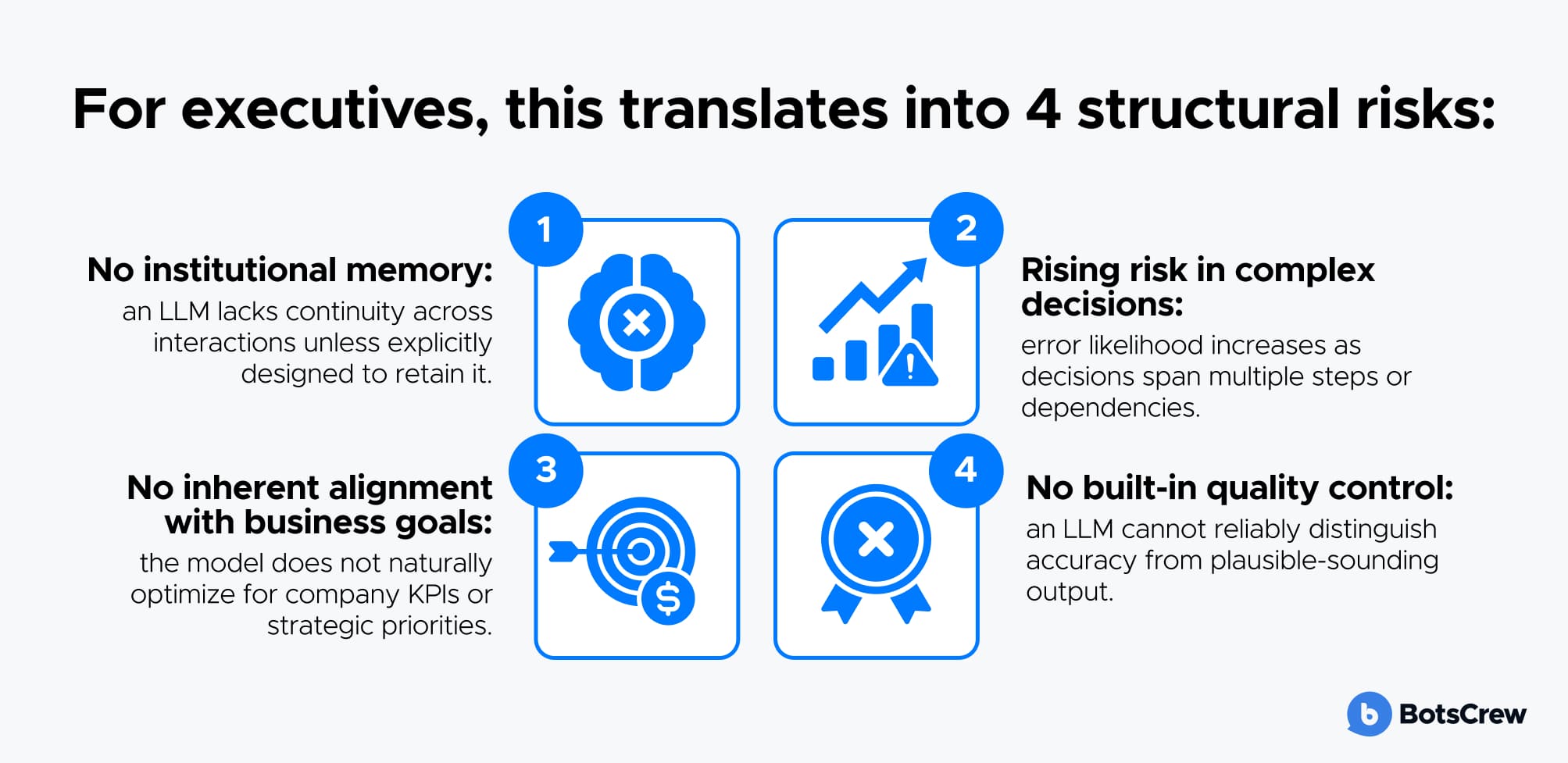

The first waves of LLM adoption in business were built around a simple assumption: take a powerful large language model, connect it to data, and it will start “thinking” on behalf of humans. In practice, this approach quickly ran into structural limitations.

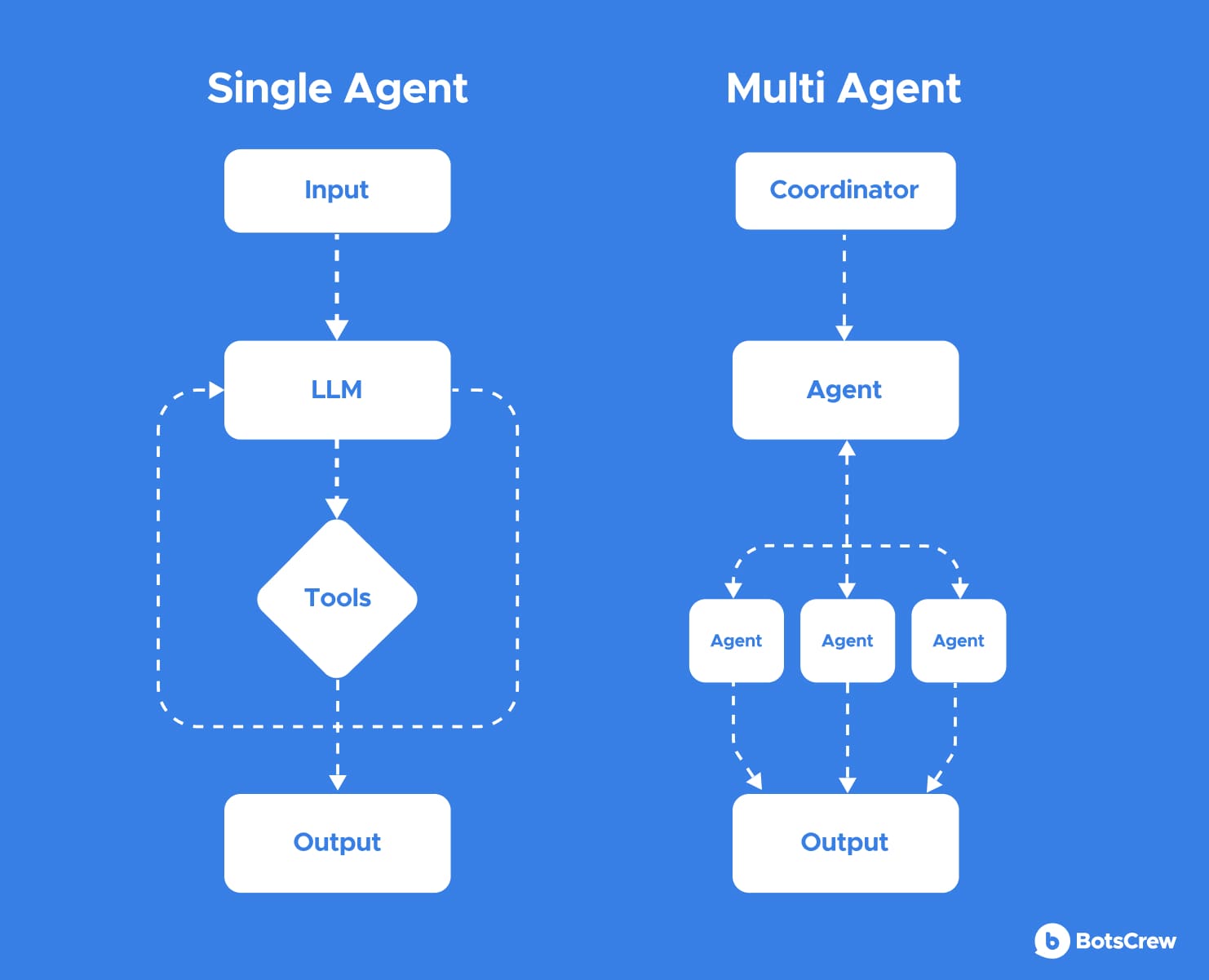

LLMs perform well as interfaces to knowledge, but they struggle to execute multi-step processes without external control reliably. In complex scenarios — such as business workflows, analytics, or operational decision-making — multi-agent AI systems become necessary. It is an architectural response to the fundamental limitations of LLMs.

Discover what are multi-agent systems and why they are becoming the foundation of enterprise AI — and how controlled, role-based agents turn LLMs into reliable decision-making systems.

In A Nutshell: What Are Multi-Agent AI Systems?

A multi-agent AI system is a setup where several AI agents work together, each with its role, skills, and goals, to solve a problem that's too complex for a single model.

Example: In an e-commerce company, one AI agent tracks customer behavior, another predicts demand, a third optimizes pricing, and a fourth manages inventory. They constantly share insights and adjust decisions in real time — so prices drop before demand dips, stock refills before shelves go empty, and customers see the right offer at the right moment.

Why a Single Agent Is Still a Structural Risk

But as business requirements evolve, complexity increases along multiple dimensions: more steps, more context, more dependencies, and higher decision risk.

As a result, any complex system built around a single LLM is forced to choose between constant human oversight and uncontrolled errors. Scaling the model doesn't solve these issues — it only makes mistakes more expensive.

Multi-Agent AI Systems as an Organizational Model

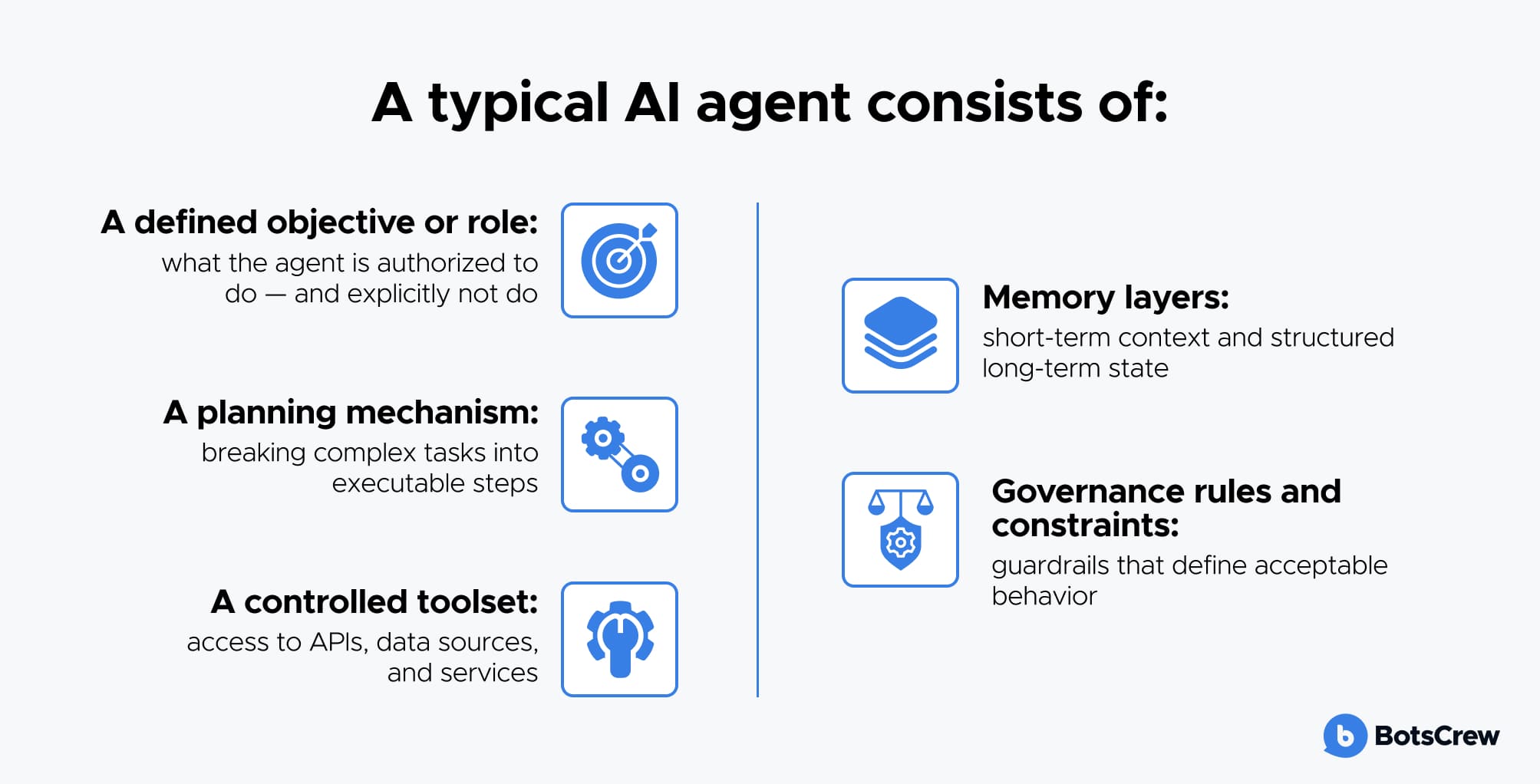

An AI agent is not a "smarter LLM," but a controlled software entity in which the LLM is only one component. A typical AI agent consists of:

A defined objective or role: what the agent is authorized to do — and explicitly not do

A planning mechanism: breaking complex tasks into executable steps

A controlled toolset: access to APIs, data sources, and services

Memory layers: short-term context and structured long-term state

Governance rules and constraints: guardrails that define acceptable behavior.

Critically, an agent does not "think" on its own. It executes a constrained workflow in which the LLM is used to make local decisions: what to do next, how to interpret an outcome, and which tool to invoke. This architecture transforms the LLM from a source of risk into a controlled interface for reasoning and execution.

In a mature multi-agent architecture:

→ Each agent has a narrowly defined mandate, limiting its authority and reducing blast radius in case of failure.

→ Agents operate with partial context, preventing unintended global actions and enforcing architectural boundaries.

→ Critical outputs are reviewed or cross-validated by independent agents, improving reliability and reducing hallucinations.

→ Human escalation paths are explicit, ensuring that high-impact or ambiguous decisions are reviewed by accountable stakeholders.

From a C-level standpoint, this approach improves:

- Governance: clearer ownership of decisions and actions

- Scalability: new capabilities can be added without destabilizing the system

- Risk management: failures are contained, observable, and correctable.

Rather than slowing execution, this structure enables AI systems to operate faster, safer, and with enterprise-grade reliability.

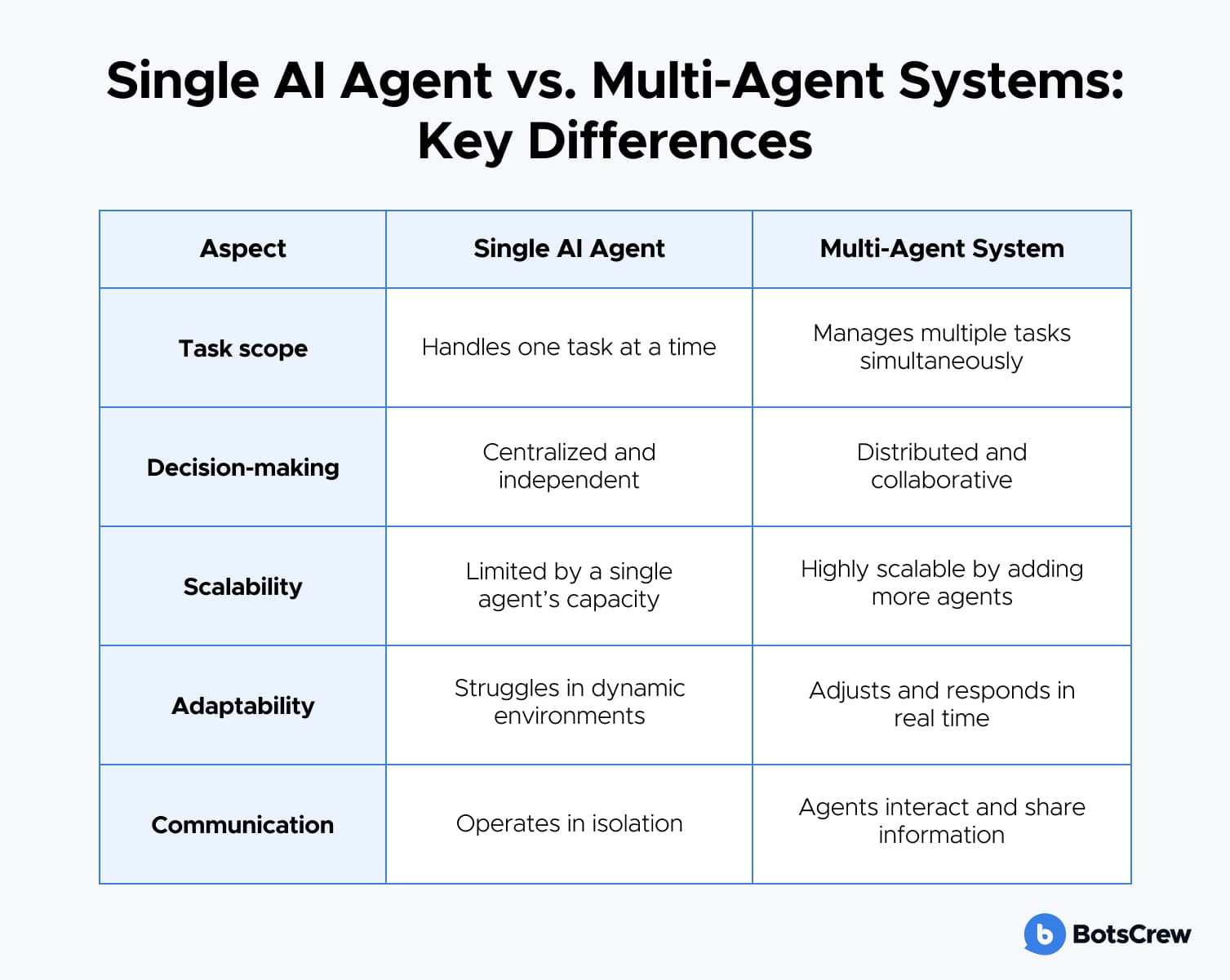

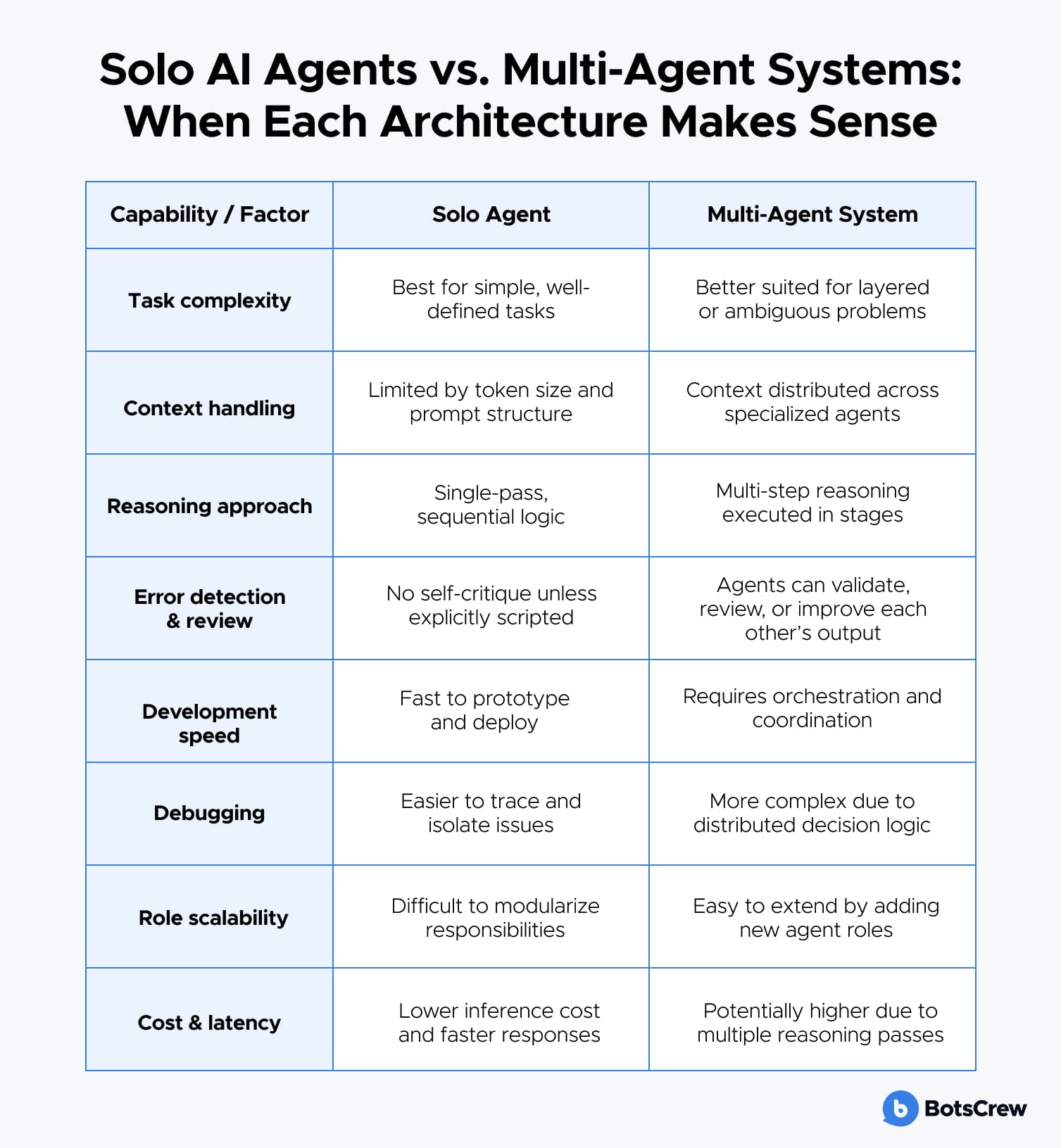

Why Multi-Agent Systems Scale Where Solo Agents Do Not

In a multi-agent setup one agent plans, others execute, another reviews or validates outcomes, and escalation paths are explicit. This structure mirrors how effective organizations operate. Specialization reduces cognitive load, independent validation reduces error rates, and clear boundaries reduce systemic risk.

Consider a customer-facing use case. A single AI agent might handle chat interactions reasonably well. But a multi-agent system can combine:

- a conversational agent

- a recommendation agent

- a fraud or risk agent

- a decision validation layer.

The customer experience becomes smoother — not because the AI is smarter, but because responsibilities are distributed and controlled. Here's how the two approaches compare:

Types of Agent Interaction: Core Architectural Patterns

Multi-agent systems are defined by the way those agents coordinate. Different interaction patterns determine execution flow, error propagation, observability, and system resilience. Understanding these patterns is critical when designing AI systems intended for production and enterprise use.

1. Hierarchical Model (Orchestrator–Executor)

In the hierarchical model, a central orchestrator agent is responsible for interpreting the high-level objective, decomposing it into structured subtasks, and assigning those tasks to specialized executor agents. The orchestrator also aggregates results, enforces execution order, and handles escalation when exceptions occur.

From a C-level perspective, the hierarchical model provides:

✅ strong governance and accountability

✅ predictable system behavior

✅ easier compliance with regulatory and internal controls.

This model is best suited for enterprise workflows, regulated environments, and mission-critical processes where reliability outweighs flexibility.

2. Pipeline Model (Sequential Processing)

In the pipeline model, agents are arranged in a fixed sequence, with each agent responsible for a specific transformation of the input: for example, data ingestion → analysis → validation → output generation. Each agent operates independently but passes its output to the next stage.

Technically, this approach offers high transparency and traceability at every step, clear separation of concerns, as well as easier testing and validation of individual stages. However, pipelines are inherently rigid: changes in one stage often require reconfiguration of the entire flow, and dynamic task re-planning is limited.

For executives, the pipeline model is attractive when:

- explainability and audit trails are mandatory

- processes are stable and well-defined

- operational risk must be minimized.

Typical use cases include report generation, compliance checks, and standardized decision workflows.

3. Peer-to-Peer Model (Decentralized Collaboration)

In peer-to-peer systems, agents communicate directly with one another without a central coordinator. Each agent decides when and how to request input from others, often based on partial knowledge or evolving context.

From a technical perspective, this enables high adaptability and emergent problem-solving, parallel execution and reduced bottlenecks, and resilience to individual agent failures.

However, these benefits come at a cost: increased system complexity, reduced observability and traceability, more difficult debugging and root-cause analysis.

At the executive level, peer-to-peer models introduce:

✅ higher innovation potential

✅ but also higher governance and operational risk.

This pattern is best reserved for exploratory, research-oriented, or low-risk domains, rather than core business operations.

4. Debate / Consensus Model (Independent Reasoning)

In the debate or consensus model, multiple agents independently analyze the same problem and generate their solutions or recommendations. A separate evaluation layer — either another agent or a human decision-maker — compares these outputs and selects or synthesizes the final result.

Technically, this pattern reduces single-agent bias and hallucinations, improves robustness of reasoning, and provides built-in redundancy for critical decisions. From a C-level viewpoint, the consensus model:

✅ significantly improves decision reliability

✅ aligns well with human review and approval processes

✅ supports high-stakes decision-making with clear accountability.

This model is particularly effective in financial analysis, legal reasoning, strategic planning, and other high-risk scenarios where incorrect outputs carry material consequences.

Human-in-the-Loop as a Foundation

Multi-agent systems are not magic. They introduce their set of challenges:

⚠️ Emergent behavior: the system begins to act in ways that were not explicitly designed or anticipated

🧩 Combinatorial growth of complexity: interactions between agents increase system unpredictability

🔍 Loss of explainability: decisions become harder to interpret and justify

📜 Regulatory and compliance challenges: meeting legal and governance requirements becomes more difficult.

For these reasons, multi-agent systems should never be fully autonomous in critical domains. Human-in-the-loop is not a sign of distrust in AI, but a foundational safety mechanism embedded in the system architecture.

The key question is not whether a human is in the loop, but where they are placed.

Core Human-in-the-Loop modes include:

- Approval-based: the agent proposes an action, and a human approves it

- Exception-based: human involvement is triggered only in non-standard or high-risk cases

- Audit-based: decisions are reviewed post hoc.

In mature systems, humans do not perform routine tasks, do not review every decision, and intervene only where errors are costly or irreversible.

Characteristics of a Mature Multi-Agent System

Not all multi-agent systems are production-ready. As AI systems move from experimentation to enterprise deployment, maturity is defined not by autonomy, but by control, observability, and risk management.

A mature multi-agent system is intentionally designed to scale while remaining auditable, governable, and aligned with business objectives. The following characteristics distinguish experimental implementations from systems that can be safely operated in real-world business environments.

Clear role decomposition

Each agent has a narrowly defined responsibility aligned with a specific function in the overall workflow. This separation reduces unintended behavior, and makes the system easier to reason about, maintain, and scale.

Principle of minimal authority

Agents are granted only the permissions and capabilities required to perform their assigned tasks. Limiting agent authority reduces the blast radius of failures, prevents unintended actions, and supports stronger security and governance controls.

End-to-end action traceability

Every agent decision, tool invocation, and intermediate output is logged and traceable. This enables auditability, simplifies debugging, supports regulatory requirements, and provides executives with confidence in how outcomes are produced.

Formalized human-in-the-loop control points

Human oversight is embedded at clearly defined stages of the workflow — particularly for high-impact, ambiguous, or irreversible decisions. These checkpoints ensure accountability without reintroducing manual bottlenecks into automated systems.

Quality and risk metrics beyond speed

System performance is measured not only by throughput or latency, but by outcome quality, error rates, confidence levels, and risk exposure. Mature systems prioritize decision reliability and business impact over raw execution speed.

Executive Best Practices for Building Multi-Agent AI Systems That Scale

Succeeding in the Agentic Age demands a disciplined approach to agent lifecycle management, modular governance, and measurable business outcomes.

As organizations move from isolated AI tools to distributed agent ecosystems, the primary challenge becomes coordination at scale — preventing agent sprawl, ensuring reliability, and maintaining executive-level control. The following best practices outline how leading organizations transform collections of AI agents into cohesive, high-performance digital workforces.

Treat Modularity as a Strategic Hedge

AI systems age faster than most enterprise platforms. Models evolve, regulations shift, vendors change, and business priorities outpace technical roadmaps. Architectures that assume stability quickly become liabilities.

Best-in-class multi-agent systems are designed to be modular. Agents are treated as replaceable units rather than tightly coupled components. Interfaces are explicit. Memory, tools, reasoning logic, and AI agent orchestration layers are decoupled.

This modularity allows organizations to upgrade, swap, or retire individual components without destabilizing the system. In practice, it reduces technical debt, accelerates iteration, and protects long-term ROI.

Decentralize Execution Without Sacrificing Control

Early multi-agent systems often rely on centralized orchestration and AI agent orchestration frameworks. One agent plans, delegates, and decides. This approach feels safe until scale exposes its limits. As workloads grow, centralized control becomes a bottleneck and a single point of failure. Mature systems distribute decision-making to specialized agents, enabling speed, resilience, and parallel execution.

However, decentralization without governance creates unpredictability. The strongest architectures strike a balance between autonomy and constraints. Agents operate independently within clearly defined boundaries and escalate to supervisors — whether human or machine — when their confidence drops or risk thresholds are exceeded.

Design Human-in-the-Loop as a Control Layer

Mature systems do not require human intervention in routine execution. Instead, they insert human judgment precisely where it matters most — where error cost is high, uncertainty is material, or accountability is required.

Approval-based, exception-based, and audit-based oversight models allow organizations to maintain speed without sacrificing responsibility. The critical question is not whether humans are in the loop, but where they are positioned.

Assume Emergence and Invest in Observability

High-performing organizations invest heavily in tracing, logging, and behavioral analytics across agent interactions. This enables early anomaly detection, root-cause analysis, and proactive adjustment of constraints.

Getting Started with Multi-Agent Systems

Instead of relying on a single, overextended model, businesses are moving toward coordinated teams of AI agents that can plan, execute, validate outcomes, and adapt to complex, multi-step processes.

This approach enables a higher level of automation, better decision quality, and significantly lower operational risk. When designed correctly, multi-agent systems turn AI from a tactical tool into a reliable, enterprise-grade capability.

At BotsCrew, we help companies move from experimentation to production-ready multi-agent systems. Our role goes beyond building agents — we design the full architecture required to operate AI reliably in real business environments. We work with teams to:

🏗️ Design and build multi-agent systems capable of executing complex, multi-step workflows

🧩 Engineer and structure prompts as part of the system's reasoning and decision logic

📚 Implement RAG architectures that enable agents to work reliably with documentation and knowledge bases

🔌 Integrate external tools and APIs so agents can take real, verifiable actions

📊 Measure, test, and monitor performance, quality, and risk across agent systems

🚀 Deploy and scale multi-agent solutions in production with enterprise-grade security and reliability.

The result is not just smarter AI — but AI systems that are observable, controllable, and built to scale.

Request a complimentary 30-minute AI strategy session