Key AI Metrics for Project Success and Smarter LLM Evaluation

Your practical guide to AI metrics and measuring AI success beyond the hype.

Imagine this: you just rolled out an AI-powered virtual assistant for customer support. It looks sleek, answers requests fast, and your team is excited. But a month later, customer complaints spike — "The bot gave me wrong info," "It didn't understand my question."

AI-powered projects often fail quietly because no one defines what "success" means in measurable terms. Without AI metrics, you're flying blind.

In this guide, we'll break down:

✅ What to measure in AI projects

✅ How to evaluate LLM outputs (chatbots, agents, RAG pipelines)

✅ Practical steps for setting up an AI evaluation framework

✅ How does human-in-the-loop improve AI accuracy

✅ What KPIs are best for measuring AI-driven efficiency?

Not sure if your AI initiatives are delivering real value? In just 15 minutes, we'll identify gaps, reveal missed opportunities, and give you actionable insights.

Why AI Metrics Matter for Your Business ROI

LLMs (Large Language Models) are transforming how enterprises operate — from automating customer support to generating financial reports. However, every response your model generates carries business risk.

A single hallucination — a fabricated fact or misinterpreted policy — can trigger consequences that go far beyond a bad user experience: lost revenue, legal exposure, compliance failures, and reputational damage that's hard to reverse.

For Fortune 500 companies, banks, insurers, and government agencies, the stakes are even higher. Each wrong answer scales risk across thousands of customer touchpoints and internal processes.

The uncomfortable truth? Most companies measure response time, not response accuracy. Internal teams rarely benchmark models against real-world compliance rules. Hallucination detection is almost always reactive — after the damage is done.

When you don't evaluate the LLM output, you can't detect drift (models degrading over time), prove compliance (a significant issue during audits), and quantify ROI because you don't know how much risk you avoided or created.

What KPIs Are Best for Measuring AI-driven Efficiency?

When evaluating AI projects, especially those leveraging Large Language Models (LLMs), understanding how the system performs technically is just as crucial as measuring business outcomes. So, what metrics help us to measure consistency and accuracy of results of an AI system?

Technical generative AI evaluation metrics provide a reliable lens into model quality, highlighting strengths, weaknesses, and hidden risks that could affect your ROI, compliance, or customer experience. Let's break down the most critical AI agent performance analysis metrics and what they tell you about your AI deployment.

AI Reply Correctness (Accuracy)

At its core, AI Reply Correctness (AI accuracy rate) measures the proportion of AI responses that meet predefined correctness criteria. These criteria may include factual accuracy, proper instruction-following, or outputs that align with internal workflows, business rules, or authoritative data sources.

Why it matters: an AI system can be technically advanced yet still fail in practice if its responses are incorrect or inconsistent. Low reply correctness erodes user trust, introduces operational friction, and in enterprise settings can create compliance, financial, or reputational risk.

Real-world benchmarks:

- For knowledge base assistants in enterprise environments: 85–90% task accuracy is considered strong.

- Customer support bots in high-volume contact centers often target 80–88% to balance automation efficiency and human fallback.

Suppose your AI assistant is trained to answer 100 questions using company documentation. If 90 responses meet the correctness criteria, the accuracy is 90%, reflecting solid baseline performance. Tracking this metric over time enables you to measure improvements after prompt adjustments, model updates, or knowledge base expansions.

In the Kravet Internal AI project, initial accuracy during the pilot phase was below 60%, despite the assistant being trained on large volumes of internal documentation. The primary causes were not model limitations, but data quality and retrieval issues: conflicting and outdated files, unreadable formats, inconsistent product specifications, and unpredictable source selection within the RAG pipeline.

To improve AI Reply Correctness, we introduced a measurement-first optimization loop:

✅ Defined correctness criteria jointly with the client and labeled responses as correct, partially correct, or incorrect.

✅ Tested the AI against a fixed set of real employee questions after each iteration.

✅ Improved retrieval logic by increasing the number of RAG sources, prioritizing structured systems (e.g., product databases and inventory APIs), and removing outdated or low-quality content.

✅ Tuned model behavior by adjusting temperature settings and switching to a large-context LLM to reduce hallucinations and answer volatility.

By tracking AI Reply Correctness after every change, the team was able to quantify progress and make targeted improvements. As a result, the internal AI assistant reached nearly 90% accuracy, aligning with — and in some cases exceeding — enterprise benchmarks for internal knowledge assistants.

Faithfulness (RAG Systems)

Faithfulness measures whether the AI's answer is factually aligned with the data retrieved from your knowledge base. In Retrieval-Augmented Generation (RAG) systems, the model doesn't just ''make things up'' — it retrieves data from your sources and then generates a reply. Faithfulness ensures the response is grounded in that data, not invented.

Imagine your knowledge base says: ''Standard delivery takes 3–5 business days.'' If a customer asks ''How long is delivery?'', a faithful answer would be: ''Delivery usually takes 3–5 business days.'' An unfaithful answer would be: ''Delivery takes 1–2 days'' — because that's not supported by your data.

Why it matters: hallucinations in regulated industries such as finance, healthcare, and government can have severe consequences: compliance violations, legal penalties, or loss of customer trust. Faithfulness directly reflects your AI's risk exposure.

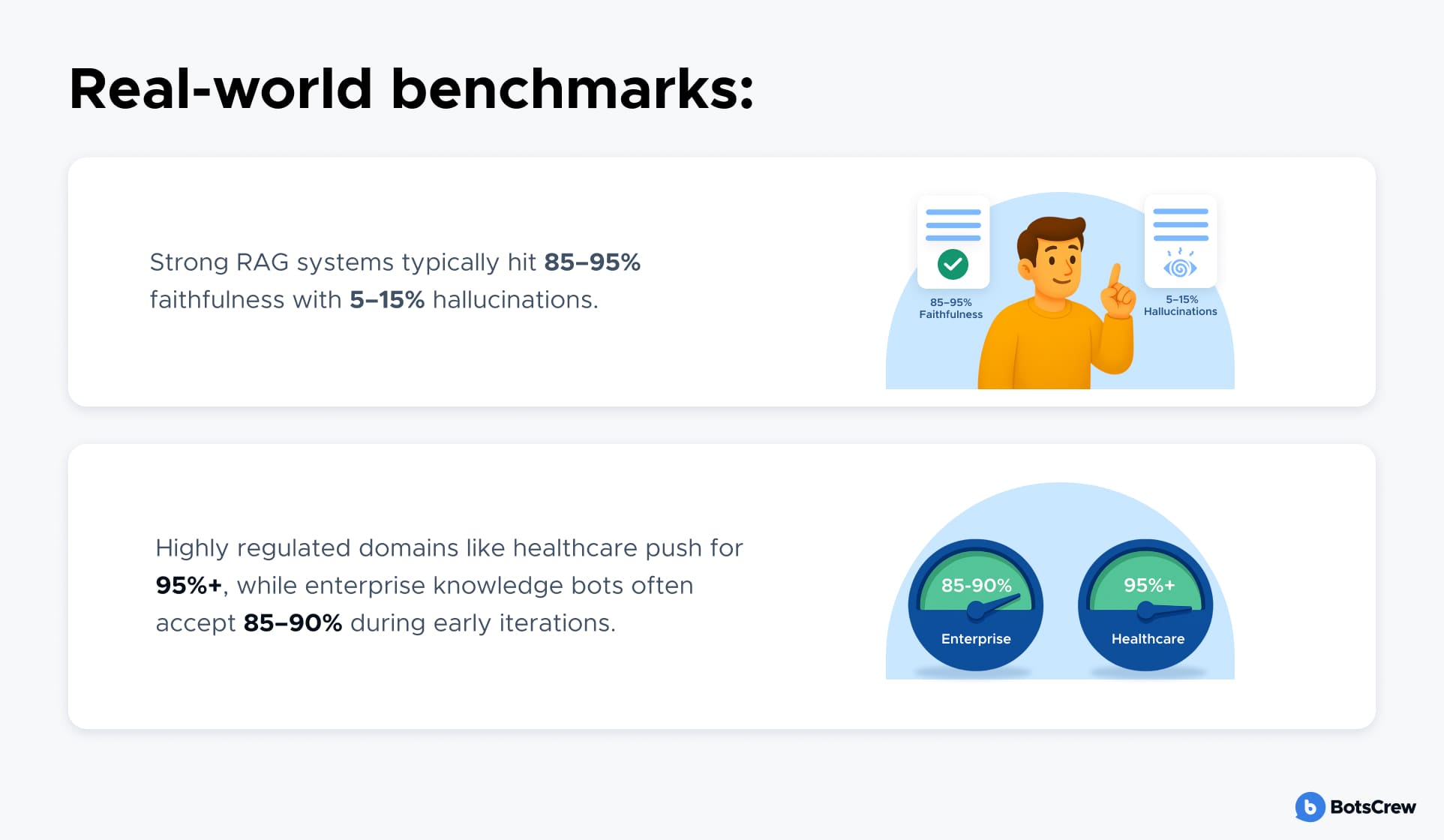

Real-world benchmarks:

- High-quality RAG systems should maintain 85–95% faithfulness, with hallucinations ideally below 5–15%.

- Benchmarks vary by domain complexity: healthcare assistants may target >95%, while internal enterprise knowledge bots might tolerate 85–90% as iterative improvements continue.

If 85 out of 100 knowledge-based responses accurately reflect retrieved data, faithfulness = 85%, meaning 15% of responses risk being hallucinated or misleading.

Contextual Relevance (RAG Systems)

Even the most accurate LLM can fail if it retrieves irrelevant information. Contextual Relevance evaluates whether the system selects the correct knowledge chunks or data points before generating an answer.

Why it matters: incorrect context leads to technically valid but operationally useless answers, undermining efficiency and customer experience. For example, a support bot pulling outdated product documentation may confidently provide wrong guidance, resulting in escalations.

Real-world benchmarks:

- Top-tier implementations achieve 90–95% relevance for retrieved content.

- Anything below 80% typically signals retrieval pipeline issues, not LLM flaws, highlighting the need for combined human review and automated AI evaluation.

In a customer service AI, if 92 out of 100 retrieval attempts bring relevant information, the system’s contextual relevance = 92%.

Hallucinations (Non-RAG)

Hallucination in non-RAG scenarios evaluates whether the AI creates content outside the provided static instructions or knowledge base. This metric is particularly critical for models used in regulatory reporting, contracts, or sensitive advisory applications.

Why it matters: unchecked hallucinations can compromise compliance, distort decision-making, and erode trust with end-users. Unlike RAG systems, where hallucinations can often be traced back to retrieval errors, non-RAG hallucinations are purely model-driven and harder to control without strict evaluation.

Real-world benchmarks:

- Best-in-class AI assistants maintain hallucination rates of <5%.

- Anything above 10–15% is typically unacceptable in high-stakes industries like finance, law, or healthcare.

If an AI generating contract clauses hallucinates in 3 out of 100 cases, the hallucination rate = 3%, within an acceptable threshold for enterprise deployment.

Tool Correctness (Agents)

For AI agents that perform actions — calling APIs, retrieving data, or executing workflows — Tool Correctness measures whether the right tool is used with the correct parameters.

Why it matters: a single misrouted API call or incorrect parameter can break workflows, produce invalid reports, or trigger security alerts. Accuracy here is essential for operational integrity.

Real-world benchmarks:

- Leading implementations aim for >95% tool execution success.

- Systems below 90% risk cascading errors and user dissatisfaction, especially in multi-step workflows like order processing, patient triage, or financial data updates.

If an AI agent performs 100 tasks and calls the correct tool in 97 cases, tool correctness = 97%, which is considered enterprise-grade AI reliability.

Conversational Relevance

Multi-turn conversations (those where the user and the system exchange multiple messages in sequence, rather than just a simple "one question — one answer." In such scenarios, it's important for the AI to "remember" the context of previous turns and take it into account when generating its responses) introduce complexity. Conversational Relevance evaluates whether the AI stays on topic and maintains coherence across dialogue turns.

Why it matters: even accurate, tool-compliant responses can frustrate users if the AI drifts off-topic or repeats irrelevant information. Maintaining relevance across turns ensures smoother interactions, faster problem resolution, and higher customer satisfaction.

Real-world benchmarks:

- High-performing assistants achieve 85–90% relevance across multi-turn dialogues.

- Benchmarks are stricter in support and advisory contexts where every turn carries risk or cost.

In a healthcare chatbot, if 86 out of 100 dialogue sequences remain coherent and relevant throughout, conversational relevance = 86%.

Practical AI Testing Framework to Start Measuring LLM Performance

Launching an AI project without a structured evaluation plan is like flying blind. To ensure your AI solution delivers measurable value, you need a repeatable framework that links business goals with technical performance. Here is a practical step-by-step approach.

Step 1: Define the Task

Begin by specifying exactly what you want the AI to do. This could be answering customer questions from your knowledge base, classifying incoming requests, generating product descriptions, or assisting agents with tool-augmented workflows. The key is clarity: a well-defined task ensures your evaluation focuses on outcomes that matter. For example, a retail AI assistant might be tasked with responding to FAQs about orders, returns, and promotions.

Step 2: Define What "Correct" Looks Like

Next, determine the criteria that define a successful output. For LLMs, correctness is rarely binary — it can encompass factual accuracy, alignment with company knowledge, adherence to regulatory guidelines, and tone. For instance, a response is "correct" if it cites the knowledge base accurately and follows the company's approved phrasing. Defining this upfront prevents subjective judgments later and ensures your AI metrics reflect business priorities.

Step 3: Create the Test Dataset

Once tasks and criteria are defined, gather the inputs on which your AI will be tested. These can be real-world examples (like historical customer queries), synthetic scenarios created to cover edge cases, or a combination of both. Each test case should include the input, expected output (if applicable), and any context needed for AI evaluation. Structuring this dataset in a tabular format makes it reusable, scalable, and easier to pass through automated evaluation scripts.

How much data do you need for Testing? Too small, and your results may be misleading; too large, and you risk wasting resources. The optimal size depends on the stage of development and the level of confidence required.

👉 Small datasets (50–100 cases) are suitable for quick checks during development. They help identify obvious errors, test initial prompts, and catch glaring hallucinations, but results may fluctuate and should not be used for final deployment decisions.

👉 Medium datasets (100–500 cases) provide reliable accuracy estimates. They allow you to measure performance across a representative set of scenarios, uncover patterns of failure, and benchmark improvements after model updates. For many enterprise pilots, this is the perfect balance between effort and insight.

👉 Large datasets (500+ cases) are necessary when you need high confidence for enterprise-scale projects. These datasets reduce sampling variance, provide statistically robust AI metrics, and can uncover edge-case failures that smaller sets might miss — critical for regulated industries or high-stakes deployments.

Step 4: Run the Evaluation

With your dataset ready, execute the evaluation. In the early stages of an AI project, manual evaluation is often the most reliable approach. Human reviewers compare AI outputs against your reference answers or "golden responses," checking for factual accuracy, completeness, adherence to tone, and alignment with internal knowledge or compliance rules.

As the AI matures and the volume of queries grows, organizations can integrate automated evaluation pipelines to scale the process while maintaining consistency. Automated evaluation can take several forms:

— Rule-based checks: Define rules or patterns that a correct answer must satisfy. This might include verifying that certain keywords or phrases appear in the output, or ensuring that prohibited content is never included.

— Embedding-based similarity: Measure how closely the AI's response aligns with the expected output using vector similarity. This allows the system to handle minor variations in phrasing while still assessing correctness.

— Model-as-a-judge (LLM-as-judge): A secondary AI evaluates the primary model's output against reference responses. This approach can combine semantic understanding with rule-based logic, making it possible to assess correctness, tone, and adherence to guidelines at scale.

Step 5: Aggregate & Analyze Results

After the evaluation, key AI performance metrics should be collected and analyzed in a structured way. Overall accuracy provides a high-level view, but it is equally important to break performance down by scenario or use case. For example, a model might handle common FAQs perfectly but struggle with edge cases or tool-augmented workflows. Identifying these gaps helps prioritize interventions where they matter most.

Consistency and variance are also critical. Running the same queries multiple times reveals whether the AI is reliable or produces inconsistent answers. Queries with high variance indicate areas where the model's behavior is unpredictable and therefore unsafe for production without further refinement.

Equally important is categorizing the types of errors. Some errors are factual — the model hallucinates or invents information. Others involve omissions, where responses are incomplete, or tone and style issues, where the answer is technically correct but does not match company guidelines.

In tool-augmented setups, errors can also occur if the model calls the wrong tool or provides incorrect parameters. By separating errors into these categories, it becomes clear whether problems arise from the model itself, the prompt design, or gaps in the knowledge base.

Where supported, confidence scores can add another layer of insight. Low-confidence outputs can be automatically flagged, allowing for fallback mechanisms such as escalation to a human agent or a safe default response. These AI agent performance analysis metrics, along with accuracy, variance, and error type, can be visualized in dashboards or heatmaps to track performance across categories and over time, revealing trends and guiding continuous improvements.

Step 6: Human Review of Edge Cases

Even the most advanced automated evaluation systems cannot handle every situation perfectly. There will always be nuanced or high-stakes queries where human judgment is essential. This includes outputs flagged as low-confidence by the model, high-value queries that carry regulatory or reputational risk, or cases where repeated testing reveals inconsistent answers.

Low-confidence outputs should constantly be reviewed before they are trusted. Similarly, a small fraction of queries — for example, compliance-related financial questions, legal or medical recommendations, or messages intended for VIP customers — may account for only 1% of total interactions but carry outsized risk if mishandled. Any query that produces inconsistent answers, even if occasionally correct, should also be escalated to subject matter experts (SMEs) for review.

The human review process is structured around a few key practices. SMEs maintain a set of gold-standard responses that define the benchmark for correct answers. Rather than reviewing every output, they focus on flagged cases — low-confidence, inconsistent, or business-critical queries. Human feedback is then incorporated into the test dataset, creating a feedback loop that continuously improves both automated evaluation and the knowledge base.

Consider an insurance chatbot. About 90% of user queries — such as password resets or basic policy questions — can be safely evaluated automatically. The remaining 10%, involving policy interpretation or claims advice, are routed to SMEs for manual review. Decisions made by SMEs are logged and used to refine both the evaluation process and the knowledge base. This hybrid approach balances cost and scalability with safety and AI reliability, ensuring that critical queries are always handled correctly.

Step 7: Track Metrics, Improve, and Repeat

The final step in a structured AI evaluation framework is to turn insights into continuous improvement. Collecting AI performance metrics is only helpful if you actively analyze them, identify patterns, and feed lessons back into the system to enhance performance over time.

Once AI agent performance analysis metrics are collected, the next step is pattern analysis. Identify recurring failure modes — for example, specific types of queries that consistently trigger hallucinations, incomplete answers, or tool misfires. This analysis informs precise interventions:

— Prompt adjustments: Refine prompts to be more specific, structured, or aligned with the AI's role. For instance, adding instructions like "always reference the internal policy manual" or "return output in a numbered list" can reduce errors and increase clarity.

— Knowledge base updates: Fill gaps or correct inconsistencies uncovered by the evaluation. Regularly updating the knowledge base ensures that the AI has access to complete, accurate information for future queries.

— Model retraining or fine-tuning: For larger-scale issues or systematic errors, retraining or fine-tuning the model on curated datasets can significantly improve performance. This is particularly valuable when deploying domain-specific AI that needs to understand internal jargon, complex workflows, or regulatory requirements.

This is an iterative cycle. Each round of evaluation informs the next, creating a feedback loop where AI agent evaluation metrics drive improvements, improvements generate better outputs, and outputs provide new data for further evaluation.

FAQ on Evaluation & Our Approach Revealed

AI doesn't always get it right on the first try. This FAQ walks through steps and strategies to ensure AI responses are accurate, consistent, business-aligned, and safe to use at scale.

Do you monitor outputs after deployment?

We do. Monitoring is continuous and real-time. Frameworks we use:

- LangGraph: tracks the reasoning path AI took.

- LangFuse: logs and analyzes all inputs/outputs.

- OpenAI Traces: native analytics for OpenAI-based apps.

If off-the-shelf tools don't fit, we build tailored dashboards with AI agent performance analysis metrics such as average response time, accuracy % against the golden dataset, % of blocked answers, error frequency & type. For instance, if logs show 20% of queries are slow due to tool latency, we can optimize the integration.

How do you define "good" vs. "bad" responses for a business use case?

— Discovery phase: Define pass/fail criteria together (accuracy, completeness, compliance, tone, format).

— Test dataset: Usually ~50 representative queries with labeled outputs.

— Golden answers: Client-approved "reference responses" created as benchmarks.

— Client input: Business teams provide examples of expected queries & replies.

Without shared criteria, "good" might mean detailed to one person and concise to another. This step removes ambiguity.

When is a model good enough to go live?

When it consistently reaches the target accuracy agreed with the client (often ≥85% on test data). Benchmarked against past projects to validate readiness.

Can evaluation be done without humans?

Not reliably. Humans define "gold standard." AI helps scale the evaluation.

How to test AI models?

- Build a diverse input set (frequent queries + edge cases).

- Run the model and the check pass rate.

- Expand over time with new real-world queries.

How can we estimate accuracy with only limited testing?

True accuracy (on all possible queries) is unknowable. Instead, use observed accuracy + margin of error (MoE). Example:

- 100 test queries, observed accuracy = 85%

- MoE = ±7%

- With 95% confidence, real accuracy lies between 78%–92%.

This gives a statistically sound range instead of a single guess.

How to check consistency if LLM outputs vary?

- Select critical queries (SME-defined).

- Run each query multiple times (5–10).

- Calculate the success rate per query and the overall mean.

- Flag queries as Stable (low variance) or Unstable (high variance).

This helps catch unreliable queries that work sometimes but not consistently.

By systematically tracking LLM performance, businesses transform AI from a high-tech experiment into a controlled, measurable driver of value, risk mitigation, and growth.

At BotsCrew, we help Fortune 500 companies, government institutions, and non-profits transform AI from a promising experiment into a business-ready asset. Our focus is on measurable impact: accuracy, compliance, efficiency, and customer satisfaction. When you book a free 15-minute consultation, you will:

1️⃣ Understand which AI agent evaluation metrics matter for YOUR use case. We help identify the key indicators that directly influence your business outcomes, so you focus on what drives real value.

2️⃣ Get a quick health check of your AI solution. Through a high-level evaluation of your current system, we pinpoint areas of risk such as hallucinations, inconsistent replies, or incorrect tool usage. This gives you a clear view of whether your AI is ready for production or needs iterative improvement.

3️⃣ Discover how to scale safely without hidden risks. Scaling AI without understanding its limitations can amplify errors, compliance breaches, or customer dissatisfaction. We outline actionable steps to safely expand AI adoption while maintaining accuracy, consistency, and trustworthiness across all use cases.

Your AI shouldn't just be smart — it should be measurable, accountable, and aligned with your business goals.