Small Language Models vs Large Language Models: How to Choose

A deep dive into architecture, efficiency, and deployment strategies for small language models vs large language models — and what every business leader needs to know before choosing the right model for their AI stack.

🤔 Does size matter? In AI software development, the strategic answer is: it depends. Especially when budgets tighten, expectations rise, and AI initiatives are under increasing scrutiny from the board.

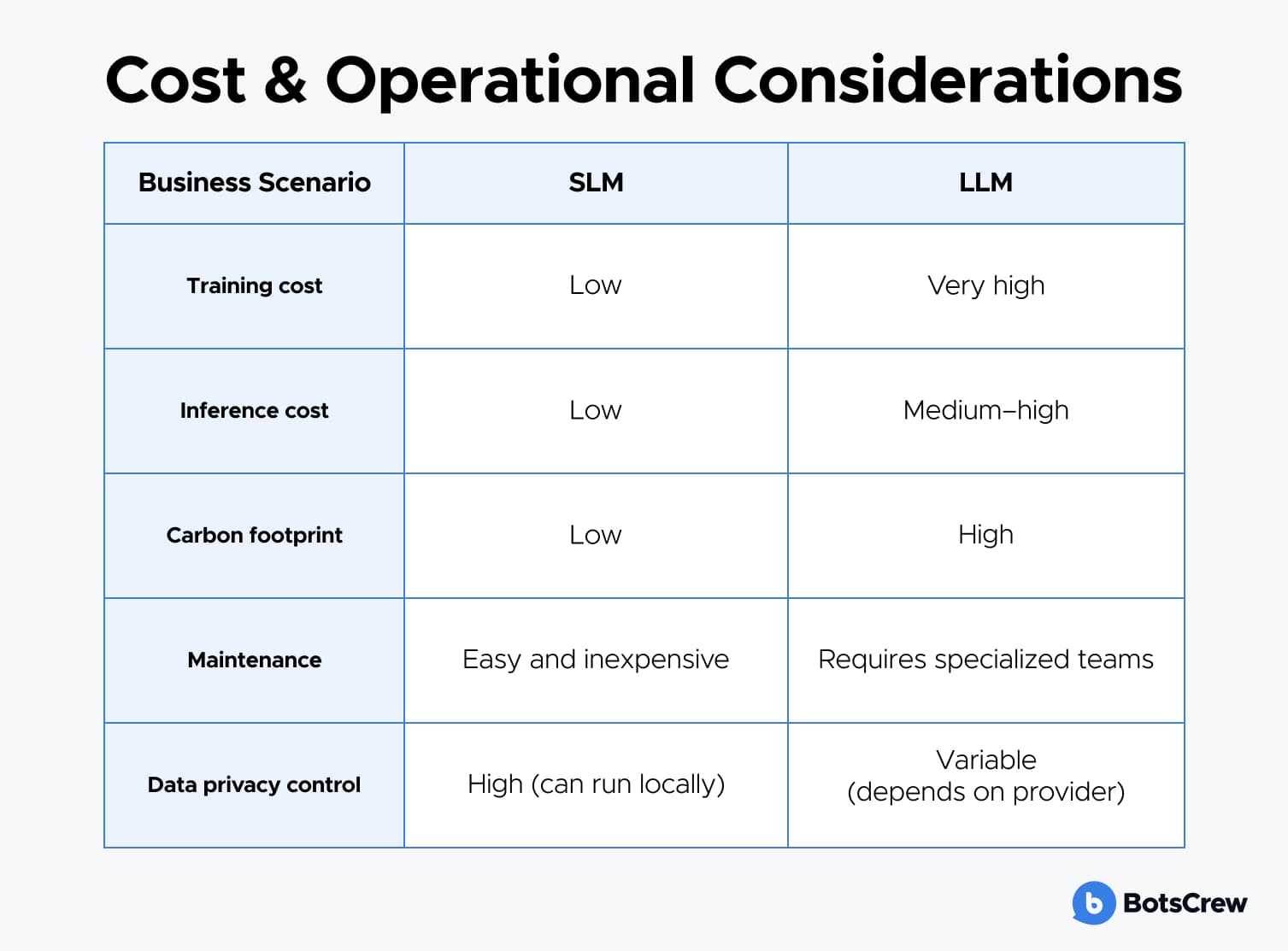

Today, businesses face a critical choice: invest in large language models (LLMs) with higher costs and complexity, or adopt small language models (SLMs) for a leaner, more cost-efficient path to scalable generative AI — without compromising business impact.

This tutorial delivers an executive-level breakdown of small language models vs large language models, examining architectural differences, cost structures, performance trade-offs, deployment complexity, governance considerations, and high-impact enterprise use cases.

What Are Language Models and Why They Matter for Your Business

A Language Model (LM) is an AI system designed to understand and generate human language by recognizing patterns and relationships within text. In practical terms, LMs allow machines to interpret context, generate coherent responses, and perform complex language tasks — from chatbots and search engines to automated reports and customer support.

LMs have a direct impact on operational efficiency, customer experience, and competitive advantage. The right model can reduce manual work, accelerate decision-making, and unlock new revenue streams, while the wrong choice can lead to escalating costs, delayed projects, and governance risks.

Language models can be categorized into two main types:

1️⃣A Large Language Model (LLM) is an Al system with billions of parameters, capable of understanding and generating complex language, delivering high performance across a wide range of natural language tasks.

2️⃣ A Small Language Model (SLM) is a lightweight Al model designed to understand and generate language while requiring far less computing power, memory, and cost than large language models.

For business leaders, selecting the right LM affects cost, deployment complexity, risk, speed to value, and long-term AI scalability. Making an informed choice ensures your AI initiatives deliver measurable ROI, build operational resilience, adapt in real time to market shifts, and seize opportunities before competitors recognize them.

The Arrival of Large Language Models

In recent years, large language models (LLMs) such as GPT-4o, Gemini, and Llama have transformed the AI landscape. Advances in deep learning, access to massive datasets, and increased computational power have enabled these models to achieve unprecedented capabilities.

LLMs are built with billions of parameters — weights the model adjusts during training to improve prediction accuracy. This massive scale allows LLMs to capture complex linguistic patterns and excel across diverse language-based tasks.

However, this power comes with a cost. LLMs require substantial computational resources for training and deployment, including high-end GPUs and significant electricity consumption. Operational costs can be high, and the environmental impact notable, making LLM deployment most practical for organizations with the necessary infrastructure and budget.

When LLMs are the strategic option:

✅ You operate at enterprise scale with high-complexity workloads

✅ You need broad generalization, not narrow task execution

✅ You serve global markets requiring multilingual intelligence

✅ Customer-facing applications require fluid, natural conversation

✅ Insights must be synthesized across massive unstructured data sets

✅ Teams need advanced decision-support systems.

The Rise of Small Language Models

What are small language models? When to choose them and what is an advantage of small language models? Small language models (SLMs) offer a leaner, more resource-efficient alternative. Scaled-down versions of their larger counterparts, SLMs deliver natural language processing capabilities without the heavyweight infrastructure that LLMs demand.

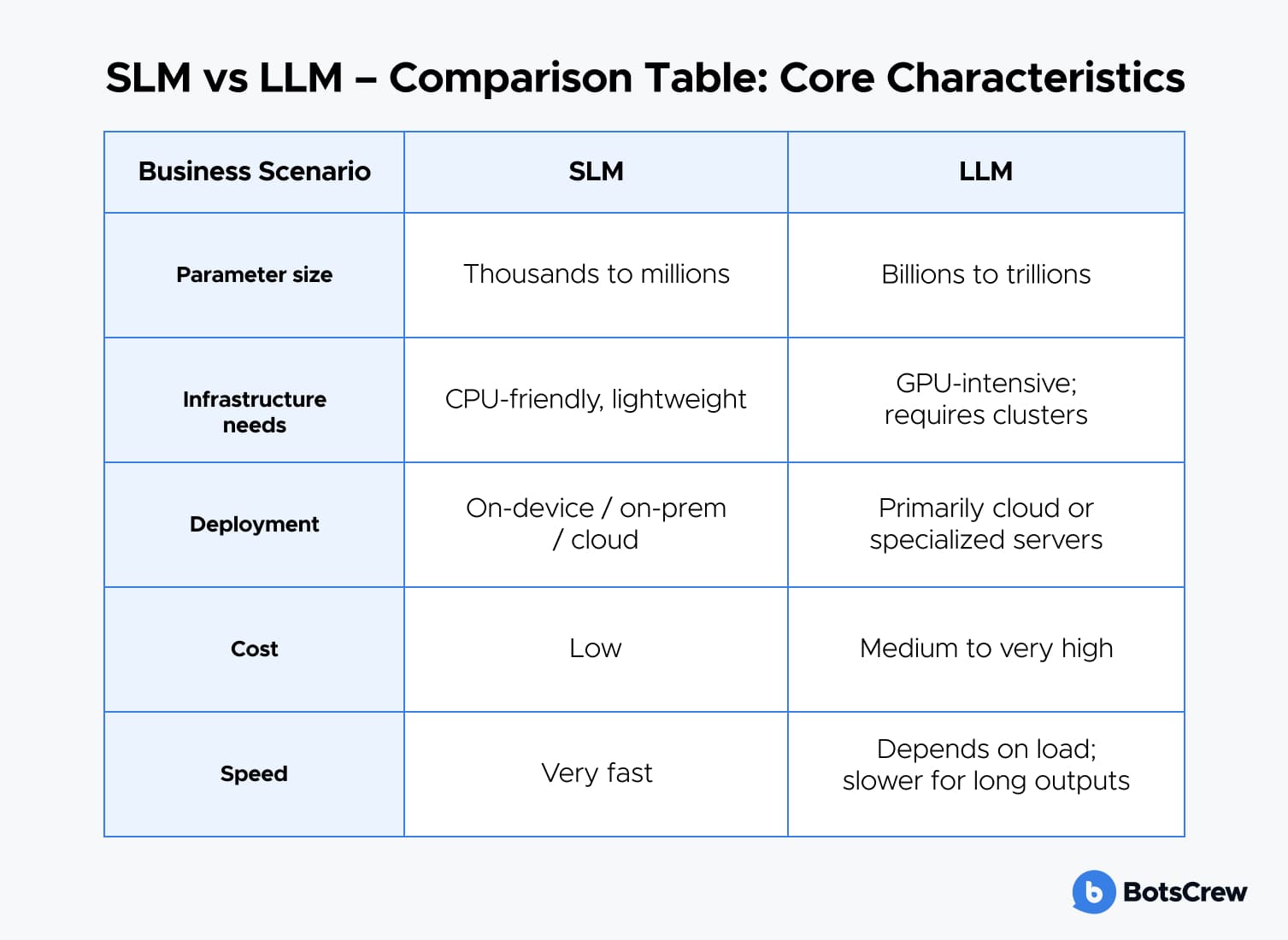

The primary distinction between SLMs and LLMs lies in parameter count:

- SLMs typically have thousands to a few million parameters, making them lightweight and easier to train and deploy.

- LLMs contain millions, billions, or even trillions of parameters — GPT-3, for instance, has 175 billion, while GPT-4 exceeds that.

Parameters are the aspects of a model that are learned from training data and adjusted to improve predictions or outputs. More parameters enable a model to capture complex patterns and subtle relationships in language, thereby enhancing its versatility and performance.

SLMs, while smaller, remain highly effective for specific, targeted tasks. They are faster to train, deploy more efficiently, and incur lower operational costs, making them ideal for organizations with budget or infrastructure constraints.

When SLMs make strategic sense:

✅ Limited budget but high need for automation

✅ Fast proof-of-concept development

✅ Regulated industries requiring on-prem or private-cloud setups

✅ Narrow, predictable use cases

✅ High-volume, low-complexity tasks where latency matters.

Small Language Models vs Large Language Models: Choosing the Right Path

There are three common enterprise strategies.

1. Start Small, Scale Fast (SLM → LLM)

Start with SLMs to validate ROI and organizational readiness. Move to LLMs once data maturity, governance, and operational workflows are established.

- Mid-sized companies

- Sectors with strict compliance or domain-specific knowledge, such as finance, healthcare, and legal

- Cost-conscious organizations

- Early-stage AI initiatives.

Advantages:

- Low-risk experimentation

- Fast deployment

- Clear, measurable ROI baseline

- Efficient internal knowledge building.

2. Go Big From Day One (Direct LLM adoption)

Choose LLMs when the use case inherently requires high intelligence.

Ideal for:

- Enterprises with strong technical resources

- Use cases involving reasoning, synthesis, or multimodal workflows

- Global customer bases

- High complexity tasks (legal, finance, research, analytics).

Advantages:

- Maximum capability

- Future-proof for expanding use cases

- Supports multi-department rollout.

3. Hybrid Model (LLM + SLM)

This is becoming the dominant model in modern enterprise AI architecture.

How it works:

- LLM handles complex reasoning and orchestration

- SLMs handle domain tasks securely within internal systems

- Sensitive data flows through SLMs only

- LLM acts as an “intelligent router + analyst + supervisor.”

Advantages:

- Reduced cloud spend

- Higher security and compliance

- Best mix of performance + control

- Scalability across business units.

Confused about your AI roadmap? Make confident, informed decisions about where to invest, what to build, and how to scale. Our experts will help you assess your current capabilities and shape a roadmap that aligns with your strategic priorities and budget.

Questions to Guide Your AI Model Selection: SLM, LLM, or Both?

Before selecting a model, leaders should evaluate the decision across three business lenses:

1. Strategic Fit

Ask: What problem are we solving, and how “intelligent” does the solution need to be?

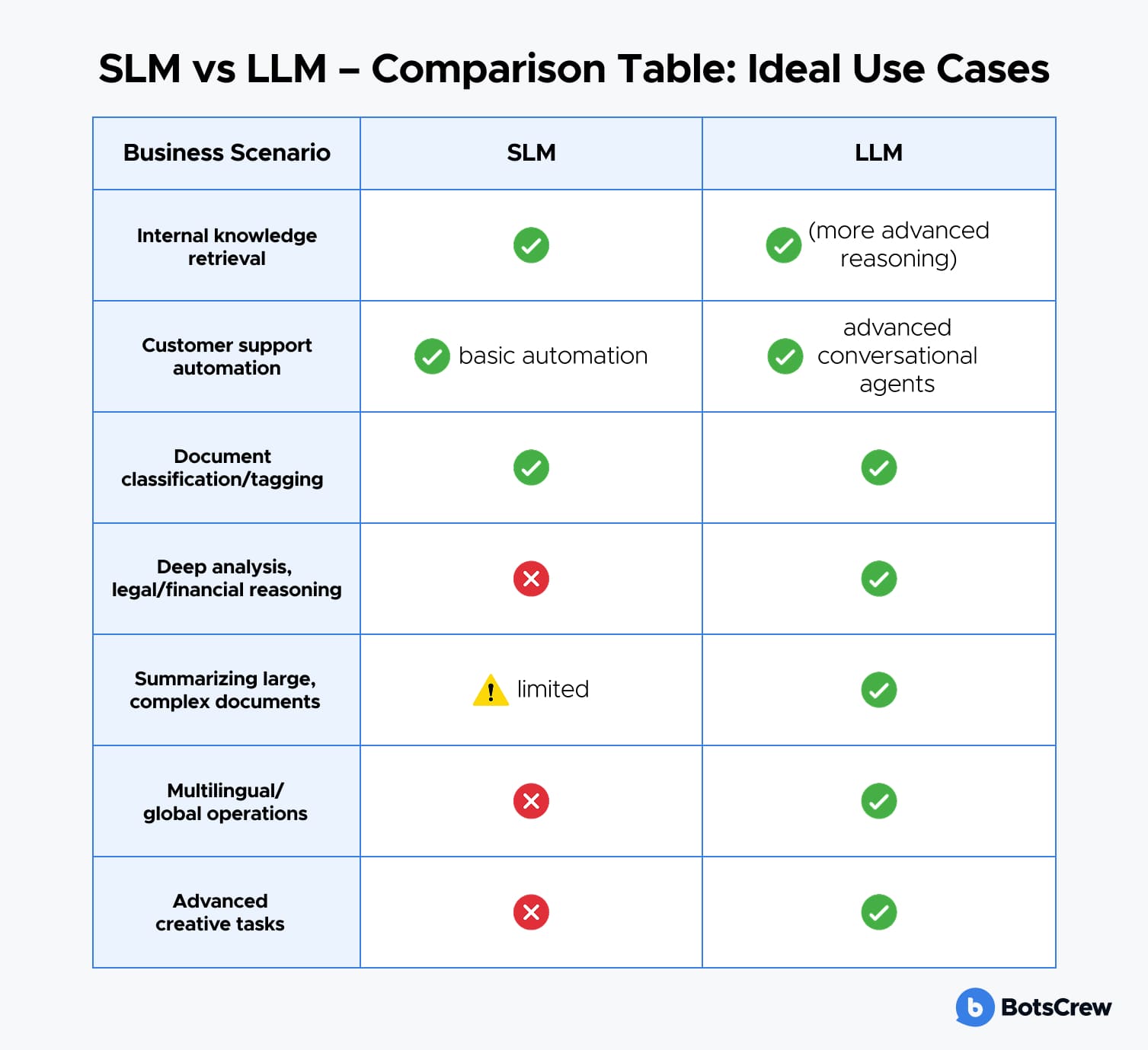

If the goal is to automate narrow, repeatable tasks (such as classification, routing, or extraction), an SLM is usually sufficient.

If the outcomes require deep reasoning, open-ended analysis, or complex language generation, an LLM becomes the strategic choice.

If the initiative is meant to scale across departments, you may require a hybrid architecture: low-cost SLMs for internal workloads + an LLM for advanced reasoning.

2. Operational Practicality

Ask: Can our existing infrastructure support this model — securely and reliably?

If you need complete data control (finance, healthcare, public sector) or want models running on private infrastructure, SLMs are the safer, more manageable option.

If your environment supports GPU clusters, modern cloud architectures, and strong DevOps, you can deploy LLMs for high-complexity tasks.

If expertise is limited or you're early in your AI maturity, begin with SLMs and expand when governance is in place.

3. Financial & Scalability Considerations

Ask: What cost structure makes sense for our usage patterns?

If you expect thousands or millions of frequent, short queries, SLMs offer dramatically lower inference cost.

If usage is high-value but low-frequency (R&D, legal analysis, financial modeling), LLM costs are easier to justify.

If future adoption will grow across business units, consider a layered approach:

- SLMs for internal automations

- LLMs for complex, cross-department intelligence.

From Possibility to Practicality — With a Partner You Can Trust

Once you understand the landscape, the next step is aligning what's possible with what's practical for your company. Both SLMs and LLMs can deliver meaningful impact when they are integrated into real workflows, appropriately governed, and tailored to the realities of your sector and geography.

For companies with constrained budgets, the need to validate an idea quickly, or a narrow, well-defined use case, a Small Language Model offers a low-risk, cost-efficient entry point. SLMs let you move fast, experiment cheaply, and solve targeted problems without committing to heavy infrastructure.

When the challenge requires deep contextual understanding, sophisticated reasoning, multilingual capabilities, or large-scale knowledge retrieval, an LLM becomes the more strategic choice, giving teams the breadth and depth needed to support complex operations.

Regardless of which direction you choose, the competitive advantage comes from how well the model is embedded into your processes, not the size of the model itself. Working with an experienced AI development partner ensures your solution is compliant, scalable, and aligned with both market expectations and regulatory requirements.

Unlock the full value of language models with a trusted AI partner