Unseen Threats: Navigating the Risks of Shadow AI & AI Sprawl

Can you truly trust the AI insights driving your critical decisions? Across enterprises, the rapid, unmanaged adoption of AI tools is creating 'Shadow AI' — unapproved systems processing sensitive data outside your control. This silent proliferation introduces profound risks: compromised data, compliance nightmares, and a fractured view of your business. In this article, we dissect the escalating dangers of Shadow AI and AI sprawl, and present a strategic blueprint for establishing a unified AI operating model and an AI Agent Control Tower to reclaim control and unlock sustainable value.

Three teams. Three AI tools. One simple question. Marketing asks AI what data can be shared externally. HR asks the same. Sales does too. Each gets a different answer — all confident, none authoritative. IT can't trace the source. Legal can't validate compliance. Finance sees multiple AI subscriptions solving the same problem. Leadership starts asking a dangerous question: can we trust what AI is telling us?

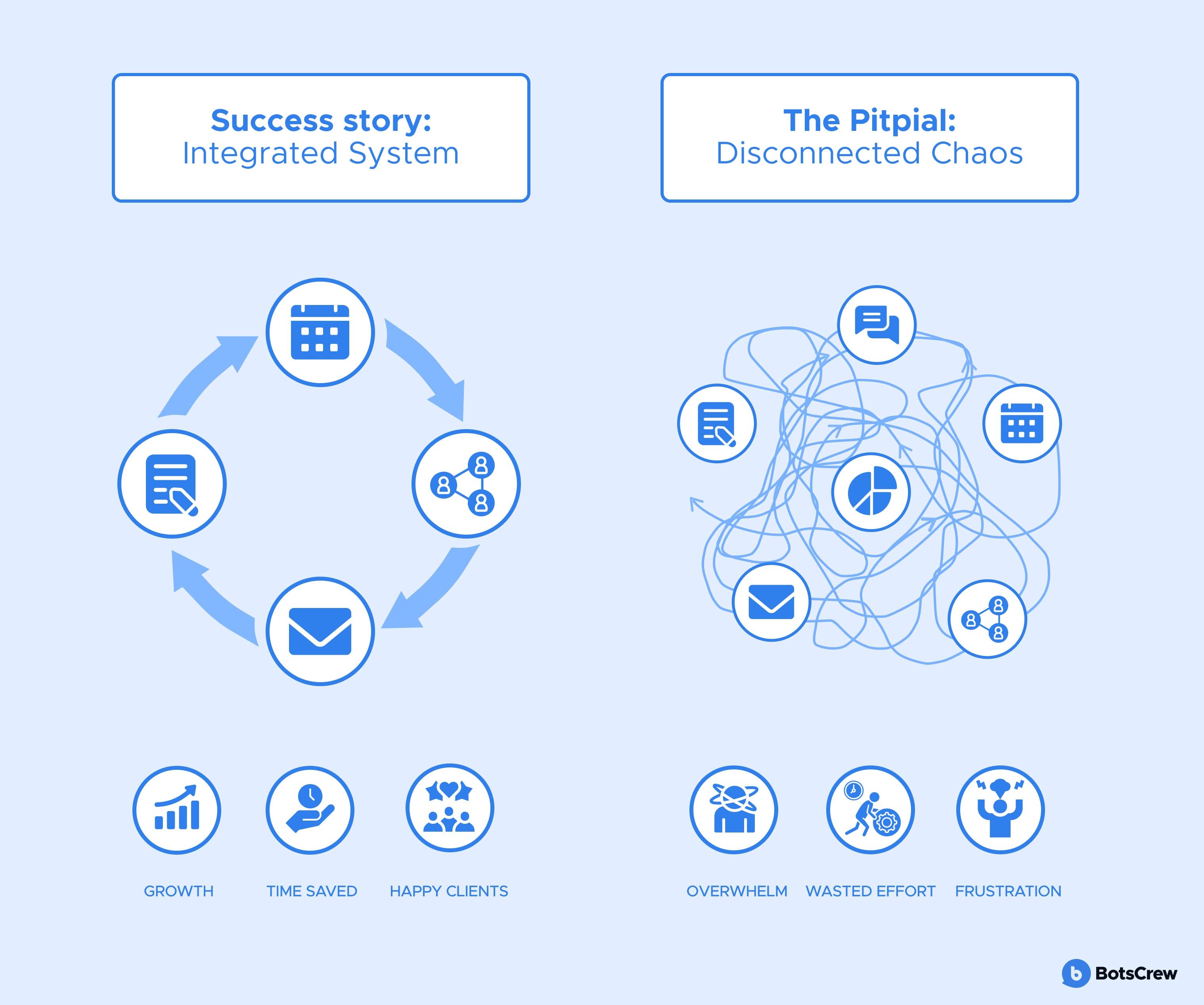

This is how AI tool chaos begins — not with failure, but with success that scales faster than governance. For executives, it's a decision-and-risk problem. For IT, it's an invisible architecture problem. Although AI didn't turn against the business, it was never given rules to operate by.

The repercussions of unmanaged AI are not theoretical. Recently, almost 5,000 GPT-5 users voiced their frustrations on Reddit, calling the changes a “downgrade.” Complaints included shorter replies, early usage limits, and restricted access to familiar, dependable versions they relied on.

This example underscores a critical C-level concern: when foundational AI tools shift without warning, the operational stability and strategic reliability of an enterprise are directly undermined. This article delves into shadow AI and what are the risks of shadow AI, offering a strategic imperative for executive leadership to reclaim control and drive sustainable value from AI.

AI Sprawl and Shadow AI: Understanding the Interconnected Risks

As artificial intelligence adoption accelerates, organizations are increasingly facing two closely related — yet distinct — challenges: AI sprawl and Shadow AI. Understanding their nuances and interconnectedness is crucial for effective AI governance and risk management.

What is AI Sprawl?

AI Sprawl refers to the uncontrolled and unmanaged proliferation of AI models, tools, apps, and AI agents across a company's infrastructure. It occurs when various departments, teams, or even individual employees independently adopt and deploy AI without centralized oversight, coordination, or a unified strategy. This can include a wide array of AI assets, from off-the-shelf SaaS AI tools and open-source models to custom-built AI applications and intelligent agents. Key characteristics of AI sprawl include:

😶 Decentralized Adoption. AI tools are acquired and implemented by different business units without a central IT or AI governance team's knowledge or approval.

😶 Lack of Visibility. The business lacks a comprehensive inventory of all AI assets in use, making it difficult to track, monitor, and secure them.

😶 Redundancy and Duplication. Multiple teams may invest in similar AI solutions, leading to duplicated efforts, wasted resources, and inconsistent outcomes.

😶 Fragmented Management. Security, compliance, and operational policies are applied inconsistently, if at all, across the diverse AI ecosystem.

Tired of AI Experiments Failing to Deliver? Book a free consultation — our experts turn enterprise AI ambitions into production-grade results.

What is Shadow AI?

Shadow AI is a specific, and often more insidious, component of AI Sprawl. It refers to the use of AI tools and services within a company that are entirely unknown, unapproved, and unmanaged by the central IT, security, or governance teams.

This phenomenon begins innocuously, as individual employees independently adopt AI tools to address immediate operational challenges. The intent is rarely malicious; instead, it is driven by a desire for efficiency and problem-solving. However, this decentralized adoption often bypasses established protocols, creating significant vulnerabilities. For instance:

😶 Employees using public generative AI tools (e.g., ChatGPT, Gemini) to process sensitive company data for tasks like summarization, content creation, or code generation.

😶 Departments subscribing to AI-powered SaaS solutions without IT approval, potentially bypassing security reviews and data privacy agreements.

😶 Developers integrating open-source AI libraries into internal applications without proper vetting or security hardening.

This phenomenon begins innocuously, as individual employees independently adopt AI tools to address immediate operational challenges.

The intent is rarely malicious; rather, it is driven by a desire for efficiency and problem-solving. However, this decentralized adoption often bypasses established protocols, creating significant vulnerabilities.

What are the risks of Shadow AI?

— Heightened security risk. Shadow AI expands the organization's attack surface by introducing unvetted tools and uncontrolled data flows. Sensitive documents, customer data, and strategic information are often shared with public or third-party AI systems, making it difficult — or impossible — to track where data goes or how it is used. This significantly increases the risk of data breaches, IP theft, and competitive exposure.

— Greater compliance and regulatory exposure. Because Shadow AI operates outside formal oversight, compliance with regulatory frameworks such as GDPR, HIPAA, CCPA, and industry-specific requirements becomes extremely difficult. Companies lack auditable records of how data is processed, creating compliance blind spots. These gaps increase the risk of regulatory fines, legal action, and loss of customer trust — issues already present with AI sprawl, but far harder to manage when AI usage is hidden.

— Rising and often invisible costs. Shadow AI drives financial waste through duplicate tools, overlapping licenses, and redundant efforts. More critically, it increases the cost of security incidents. Studies show that Shadow AI alone can add an average of $670,000 to the cost of a data breach — excluding remediation, legal fees, reputational damage, and lost business.

— Erosion of trust and data integrity. Uncontrolled AI usage leads to inconsistent outputs, questionable data quality, and biased or conflicting decisions. When AI tools deliver different answers — or when a breach is traced back to an unapproved system — trust erodes quickly. This affects not only internal confidence in data but also external perceptions among customers, partners, investors, and potential hires.

— Loss of visibility and control. At its core, Shadow AI represents a loss of control over critical assets and processes. When leadership cannot clearly see which AI tools are in use, what data they access, or how they influence decisions, effective governance becomes impossible. This lack of visibility undermines strategic decision-making and weakens the foundation of responsible AI adoption.

📝 AI sprawl is the uncontrolled growth of AI tools across an organization; Shadow AI is the unapproved and invisible portion of it.

Who Experiences the Consequences The Most

Mid-sized organizations and fast-growing enterprises often experience the consequences of Shadow AI and AI sprawl most acutely. Unlike large corporations with formal AI governance frameworks and centralized procurement controls, these companies frequently lack precise oversight mechanisms. As a result, teams independently adopt AI tools to solve immediate problems — prioritizing speed and convenience over alignment, compliance, and long-term strategy.

The financial impact extends far beyond duplicate subscriptions. Redundant spending accumulates. Security incidents become more likely. Regulatory exposure increases. Rework and retraining dilute promised efficiency gains. Strategic AI initiatives stall because resources are consumed managing uncontrolled adoption.

Tired of AI chaos slowing your business and creating hidden risks? Book a free 30-minute session — our AI experts will guide you to gain full visibility, control, and measurable impact from your AI.

How to Detect Shadow AI: Key 5 Phases

How to prevent Shadow AI, and what steps should companies take if they detect unauthorized AI tools in use? This involves monitoring network traffic for unauthorized API calls to AI providers, auditing expense reports for unapproved AI subscriptions, and using cloud access security brokers (CASBs) to gain visibility into SaaS usage.

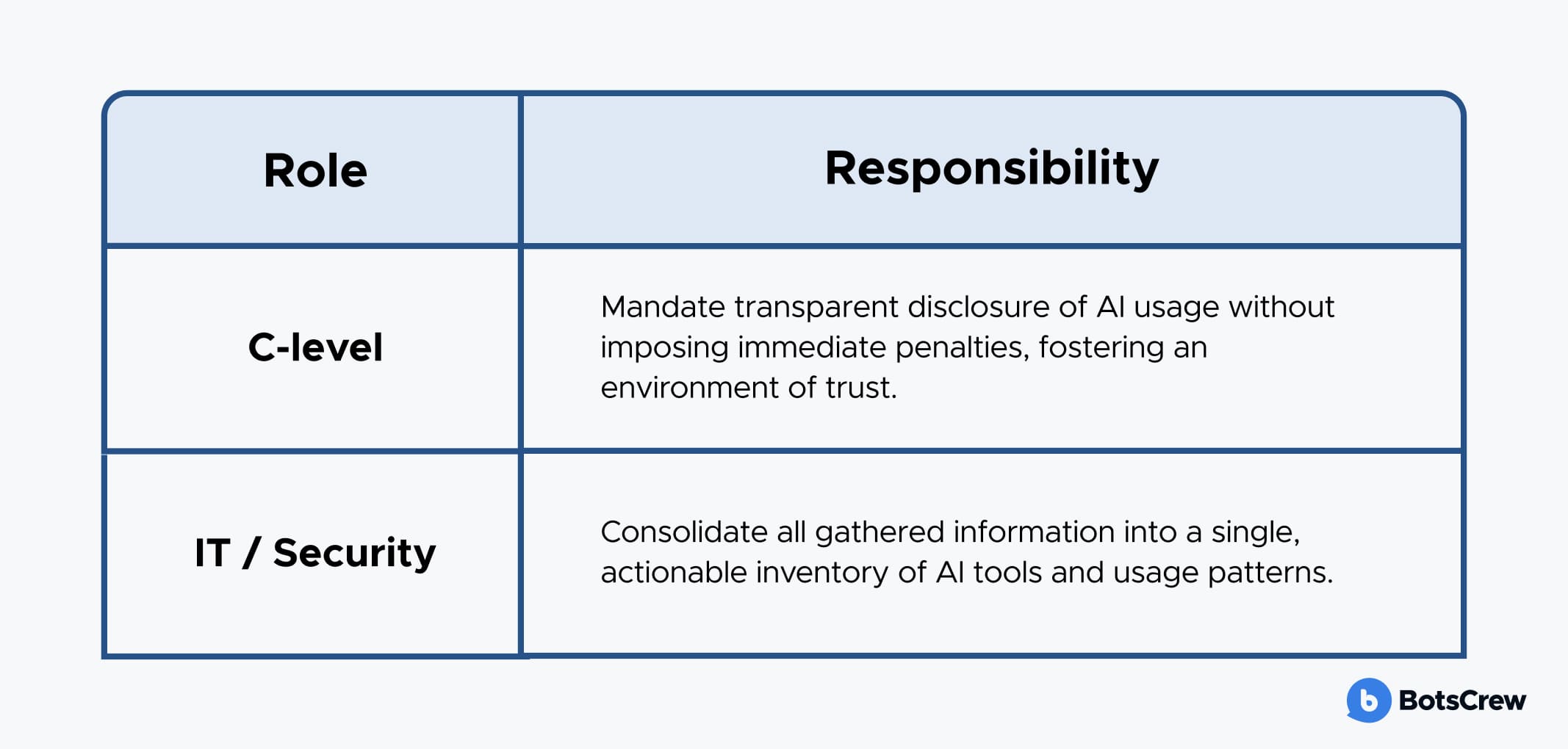

Phase 1: Establishing Baseline Visibility

The foundational step in mitigating Shadow AI involves gaining a clear understanding where AI is currently being utilized across the enterprise. Organizations should initiate several key activities to establish this baseline:

Enterprise-Wide AI Usage Survey. Deploy a survey focused on tasks performed with AI and the types of data being input into AI tools, rather than merely listing tools. This approach helps uncover usage patterns that might otherwise be overlooked.

Departmental AI Tool Inventory. Mandate department heads to compile a list of all AI tools employed by their teams. This must include free versions, trial subscriptions, and any other AI-driven services, regardless of their official procurement status.

Expense Report Review. Conduct a thorough review of expense reports to identify AI-related subscriptions, usage-based charges, and other expenditures that may indicate the presence of unrecorded AI tools.

Indicators of Shadow AI at this phase include:

- The absence of a centralized, comprehensive inventory of AI tools.

- AI tools appearing in financial records (e.g., expense data) but not within official IT asset management or procurement inventories.

- Discrepancies where business units describe AI usage that IT and security teams are unaware of.

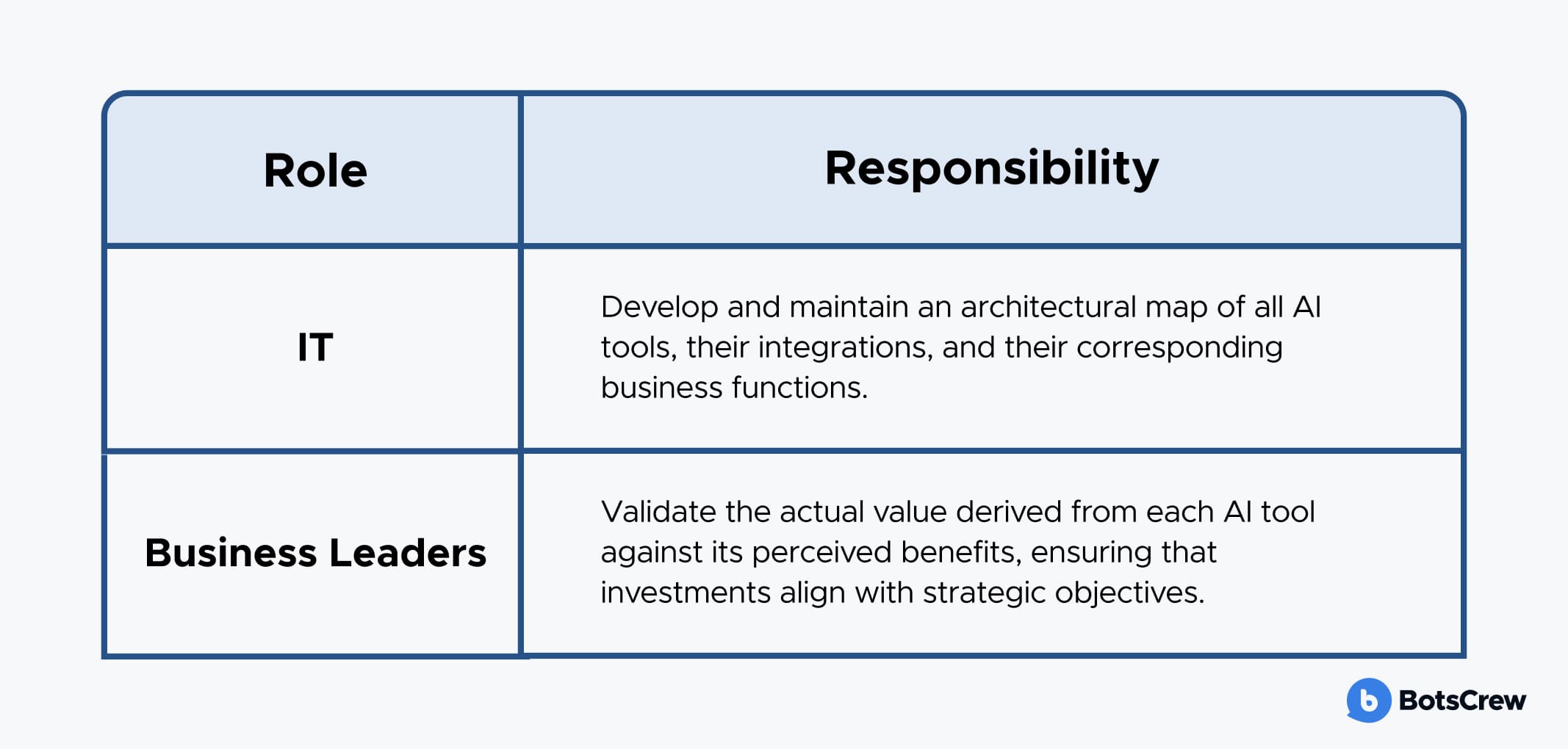

Successful execution of Phase 1 requires clear delineation of responsibilities:

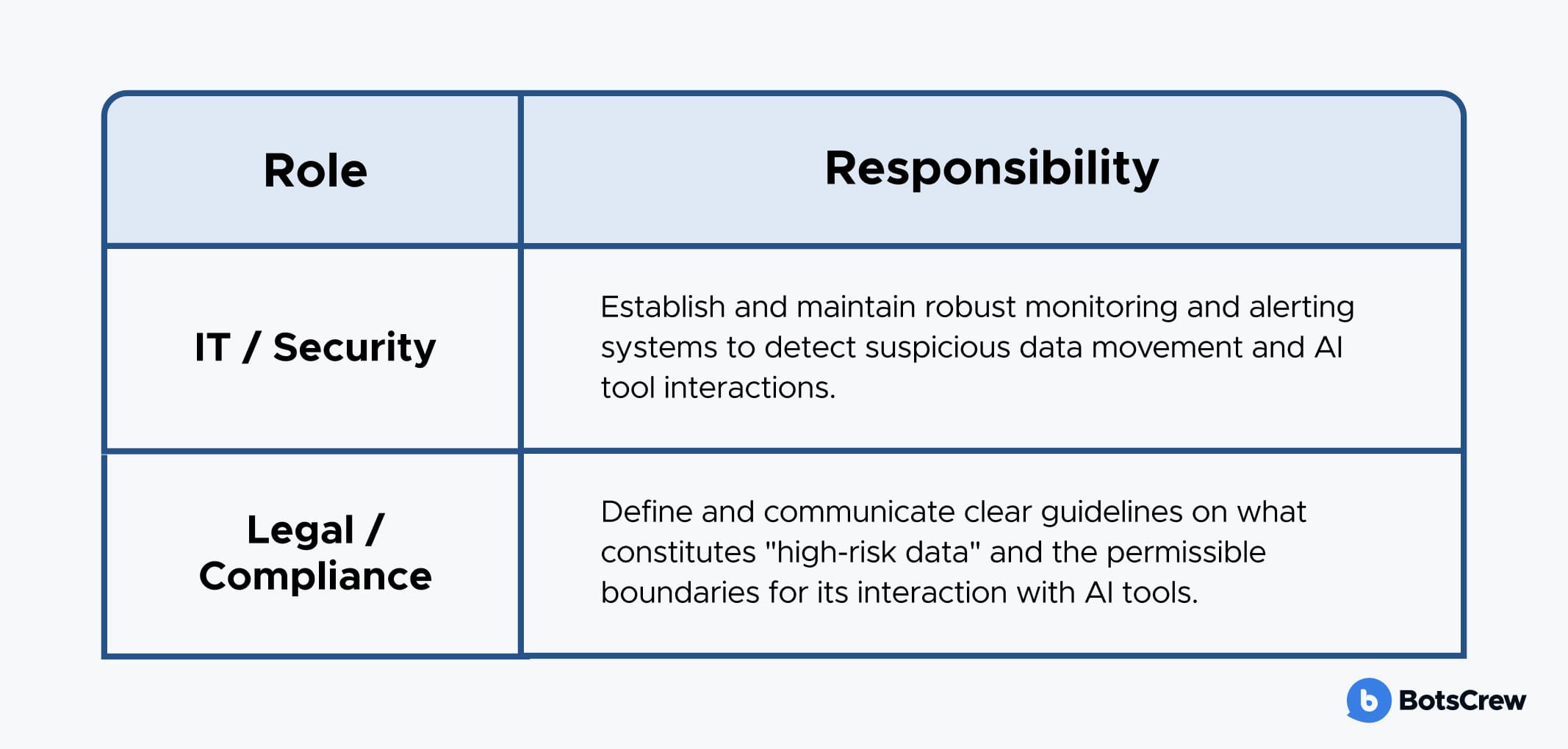

Phase 2: Monitoring Data Movement and Access Patterns

Once a baseline understanding of AI usage is established, the next critical step is to monitor how data interacts with these tools, particularly focusing on sensitive information that might be exposed to unapproved AI platforms.

Outbound Traffic Analysis. Implement network monitoring to analyze outbound traffic for uploads or data transfers to known public AI platforms. This can help identify instances where employees are feeding internal data into external AI services.

Data Loss Prevention (DLP) Review. Enhance and review DLP alerts specifically tied to copy-paste operations or file uploads. These alerts can signal attempts to transfer sensitive information from internal systems to external AI interfaces.

Corporate Device Interaction Analysis. Identify and analyze frequent interactions with browser-based AI tools originating from corporate devices. This can reveal patterns of usage that might indicate reliance on unsanctioned AI for daily tasks.

Key indicators during this monitoring phase include:

- Access to sensitive files immediately preceding or coinciding with interactions with external AI tools.

- Repeated instances of copy-pasting internal documents or proprietary information into external AI applications.

- Circumvention of established data classification policies during interactions with AI tools.

Ownership and Responsibilities

Effective data monitoring requires collaboration between technical and legal teams:

Phase 3: Identifying Overlapping and Redundant AI Use Cases

Beyond security and compliance, Shadow AI often leads to inefficiencies through tool sprawl and functional duplication. This phase focuses on rationalizing AI tool usage across the organization. To achieve this, organizations should undertake the following:

AI Tool-to-Business Use Case Mapping. Create a comprehensive map linking identified AI tools to their specific business use cases. This provides clarity on the purpose and function of each tool.

Redundancy Identification. Actively identify multiple AI tools that are being used to solve the same problem or fulfill identical functions across different departments or teams.

Comparative Analysis. Conduct a comparative analysis of redundant tools, evaluating their pricing models, access levels, data exposure risks, and overall effectiveness to determine the most suitable solution.

Indicators of tool sprawl and redundancy include:

- The presence of three or more distinct AI tools being utilized to accomplish the same task or address the same business need.

- A lack of standardized prompt engineering guidelines or consistent output validation processes across similar AI applications.

- Inconsistent or contradictory answers generated by different AI tools when presented with the same internal query.

Validate Your AI Use Cases for Maximum ROI — work with experts who've delivered measurable AI impact since 2016.

Ownership and Responsibilities

This phase requires close cooperation between IT and business leadership:

Phase 4: Assessing Output Consistency and Trustworthiness

Shadow AI can manifest as inconsistent or unreliable outputs, leading to flawed decision-making. This phase focuses on evaluating the quality and trustworthiness of AI-generated content:

Cross-Team AI Querying. Instruct multiple teams to pose the same business-critical questions to the AI tools they utilize. This allows for a direct comparison of responses.

Output Discrepancy Review. Systematically review differences in the answers, tone, and recommendations provided by various AI tools. Significant variations can indicate unmanaged AI usage.

Authoritative Source Verification. Verify whether AI outputs consistently reference or align with authoritative internal sources, policies, or documentation. Discrepancies here are a strong indicator of untrustworthy AI.

Signs of inconsistent or untrustworthy AI outputs include:

- Conflicting or contradictory answers provided by different AI tools or teams to identical questions.

- AI outputs that cannot be traced back to a verifiable source of truth or factual basis.

- AI responses that directly contradict official company policies, established documentation, or regulatory requirements.

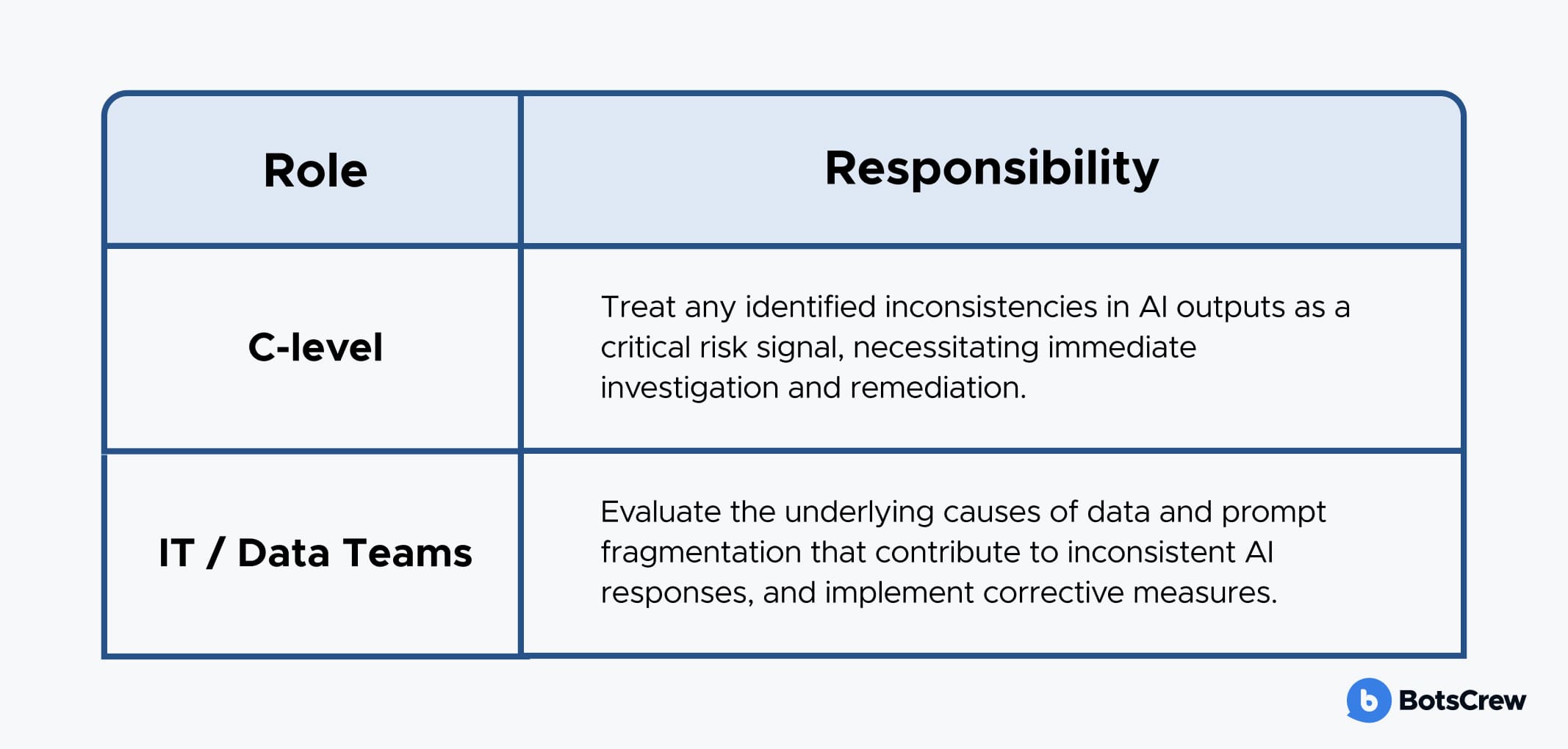

Ownership and Responsibilities

This phase requires executive oversight and technical expertise:

Phase 5: Assigning Accountability and Continuous Oversight

The final phase focuses on establishing a robust governance framework to prevent the re-emergence of Shadow AI and ensure ongoing compliance and effective management. To embed continuous oversight, organizations should:

Clear AI Governance Ownership. Assign explicit ownership for AI governance, moving beyond vague notions of "shared responsibility." A dedicated individual or team should be accountable.

Streamlined Approval Paths. Introduce lightweight, efficient approval processes for adopting new AI tools. This encourages official channels while maintaining agility.

Periodic AI Usage Reviews. Integrate regular AI usage reviews into existing security and vendor review cycles. This ensures that AI governance is an ongoing process, not a one-time event.

Indicators of governance failure and potential Shadow AI re-emergence include:

- The appearance of new AI tools within the organization without undergoing the established review and approval processes.

- Ambiguous or disputed ownership for AI-related decisions, leading to a lack of clear accountability.

- Evidence that AI governance policies exist only on paper but are not effectively implemented or enforced in practice.

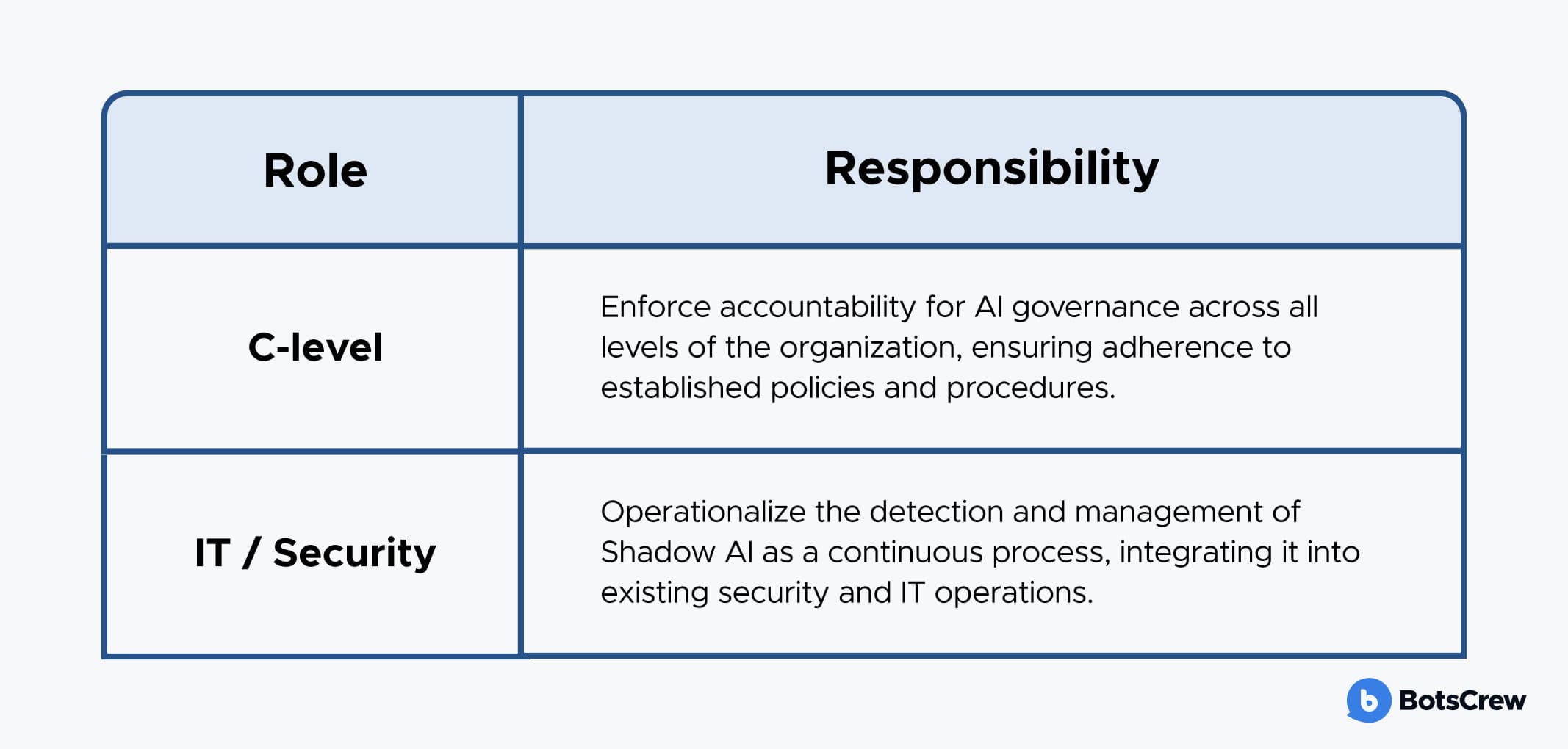

Ownership and Responsibilities

Sustained control over Shadow AI requires strong leadership and operational integration:

The Path Forward: Closing the Executive Blind Spot with an AI Control Tower

To regain control, enterprises need more than policies or tool restrictions. They need a centralized oversight layer: an AI Control Tower — a strategic governance framework designed to monitor, manage, and align all AI tools and agents operating across the organization. This is how executives move from reactive discovery to proactive leadership.

Complete Visibility & Lifecycle Control

AI tools and agents are adopted across marketing, HR, operations, and R&D without centralized awareness. No consolidated inventory exists. A unified registry tracks every AI system — its purpose, ownership, data access, dependencies, and status. Leaders gain a real-time map of their AI ecosystem, enabling structured deployment, auditing, and retirement.

Governance & Policy Enforcement

Decentralized AI use creates unclear accountability, compliance exposure, and data privacy risks. Centralized AI governance and clear policies define what AI systems can access, generate, and automate. Role-based permissions, data governance rules, and ethical standards are enforced consistently — with the ability to intervene if violations occur.

Real-Time Monitoring & Risk Detection

Issues are discovered only after security, financial, or reputational damage occurs. Continuous monitoring provides visibility into AI behavior, outputs, and resource consumption. Anomalies are flagged early, enabling proactive intervention instead of post-incident investigation.

Cost & Resource Discipline

Duplicate subscriptions, redundant capabilities, and uncontrolled LLM usage inflate spending without clear ROI. Central oversight tracks utilization and optimizes resource allocation, ensuring AI investments remain economically sustainable and strategically aligned.

Human-in-the-Loop (HITL) Integration

High-impact decisions — such as approving financial transactions, generating client-facing proposals, screening job candidates, or responding to customer complaints at scale — may already be partially automated without executive awareness. In isolation, each use case may seem operational. Collectively, they shape revenue, brand reputation, compliance exposure, and workforce dynamics.

Clear escalation pathways define which AI-driven actions require human validation. For example:

- Financial thresholds that trigger CFO review

- Customer communications that require brand or legal approval

- Hiring or termination recommendations that mandate HR oversight

- Pricing or contractual changes that require executive sign-off.

Battling Innovation — or Battling Your Own AI Ecosystem?

You should not be afraid of AI. What leaders should be concerned about is losing visibility and control over how AI is being used inside their company. The real risk is not the technology itself — it is unmanaged adoption.

When teams independently introduce tools without oversight, AI sprawl begins to form. Systems become fragmented, data flows become unclear, and accountability weakens. Over time, executives find themselves reacting to surprises instead of steering strategy.

As AI adoption accelerates, the gap widens between what leadership needs — reliability, governance, alignment — and what decentralized usage delivers: inconsistency, duplicated spend, and hidden compliance exposure.

The question isn't whether to use AI. It's whether you’re leading its adoption — or discovering it after the risks have already materialized.

Join global brands like Samsung, Honda, and Adidas — let our experts guide your AI from vision to enterprise-grade solutions.