LLM Comparison: Choosing the Right Model for Your Use Case

Choosing the right Large Language Model (LLM) for your business can feel like navigating a maze of endless options, technical jargon, and hidden trade-offs. To invest in a generalist model or a specialized one tailored to your industry? How to balance performance with cost — and ensure the model integrates seamlessly with your existing systems? In this article, we'll address these challenges head-on, offering a clear LLM comparison and actionable insights to help you make a confident, informed decision.

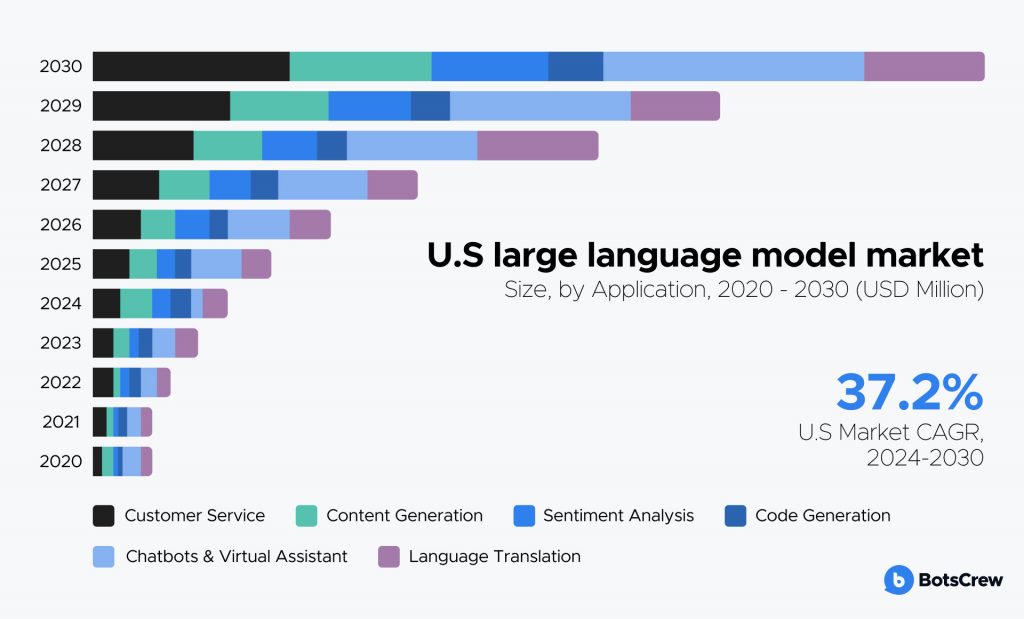

The global Large Language Model (LLM) market is experiencing explosive growth. According to Grand View Research, its market size was valued at USD 4.35 billion in 2023 and is expected to expand at a remarkable compound annual growth rate (CAGR) of 35.9% from 2024 to 2030.

This review dives into the detailed LLM comparison and the key factors that should guide your decision-making. Whether you are automating customer service, generating insights from unstructured data, or enhancing creative workflows, this guide will help you cut through the complexity and find the best fit for your business needs.

We've crafted this LLM models comparison guide to be accessible and non-technical, recognizing that your focus lies in using these models effectively — not building them from scratch.

Why Choosing the Right LLM Matters

Large Language Models have revolutionized the way businesses handle language-related tasks, from customer support automation to content generation and data analysis. However, selecting the right LLM is not a one-size-fits-all decision. The choice of model significantly impacts business outcomes, as performance, task specificity, and deployment considerations vary widely across LLMs. Here is why making an informed decision is vital:

1️⃣ Various Architecture Variations

The architecture of an LLM fundamentally shapes its capabilities and performance:

Transformer-based models. Most LLMs, such as OpenAI's GPT series, Google's PaLM, and Meta's LLaMA, use the transformer architecture. This innovative design uses attention mechanisms to analyze and prioritize relationships between words in a sentence or document. These LLMs are perfectly scalable, enabling models with billions of parameters. By processing text contextually, transformers excel in tasks like language understanding, text generation, and summarization.

Retrieval-augmented models. Retrieval-augmented models combine AI with external systems to pull in relevant information from sources like databases or search engines. This helps improve the quality of responses. Models like DeepMind’s RETRO and OpenAI’s retrieval plugins use real-time data to provide more accurate and current answers. By relying on external sources, these models reduce the need for large training datasets, improving performance and relevance.

Multimodal models. Multimodal models are designed to handle multiple types of input — text, images, and audio — enabling richer interactions and more versatile applications. With models like OpenAI's GPT-4 (which includes image capabilities) and Google DeepMind’s Gemini, these systems can process and generate outputs from different data formats, making them suitable for tasks like image captioning, video analysis, and cross-modal applications.

Pre-trained vs. Fine-tuned vs. Instruction-tuned models. Pre-trained models like GPT-4 are trained on vast, diverse datasets and can perform a range of tasks out of the box. Fine-tuned models, like domain-specific versions of BERT, are optimized for specific tasks or industries, such as healthcare or legal analysis, offering greater accuracy for niche applications. Instruction-tuned models are optimized to follow human-provided instructions, making them highly interactive and capable of understanding user intents.

2️⃣ Open-source vs. Closed-source Model

Are you looking for an open-source model you can deploy in-house to maintain full control and maximum data protection? Or are you open to leveraging powerful commercial, cloud-hosted models already available on the market, balancing convenience with capability?

Open-source LLMs allow users to deploy and manage the model in their environment, which can lead to better control over data privacy and security if implemented properly. However, this advantage heavily depends on how the model is deployed and managed. For instance, if the open-source LLM is deployed on-premises, data privacy can be tightly controlled.

Open-source models are generally valued for: customization (you can modify and fine-tune the model to meet specific business needs), transparency (the code and architecture are openly accessible for review and auditing), and cost flexibility (no licensing fees, though infrastructure and compute costs may apply).

Closed-source LLMs are proprietary solutions offered by commercial providers, often hosted in the cloud. These models are ready-to-use, requiring no maintenance or infrastructure setup, making them ideal for businesses seeking ease of integration and powerful performance.

Their key benefits include convenience (no need for in-house infrastructure or technical expertise), high performance (proprietary models often lead in benchmarks for language understanding, summarization, and generation tasks), great scalability (hosted solutions are designed to handle varying workloads seamlessly), and technical support (access to dedicated customer service and regular updates).

3️⃣ Performance and Accuracy

Not all LLMs are created equal, and their performance varies depending on the task at hand. Therefore, businesses should evaluate:

- Language proficiency. Models differ in their ability to understand nuances like cultural context, industry jargon, or multi-lingual requirements. For example, a global eCommerce company may prioritize models that excel in multiple languages.

- Precision vs. Generalization. Tasks like legal document analysis or financial forecasting demand high precision. A general-purpose large language model might miss critical subtleties in such scenarios, while a specialized model could excel.

4️⃣ Latency and Throughput

For applications like chatbots, customer service systems, or interactive tools, latency, and throughput are critical performance factors:

- Low latency. Real-time systems (AI virtual assistants or customer support chatbots) demand response times under a second. Deploying a lightweight, fine-tuned model on-premise or using an optimized API can help reduce latency.

- High throughput. Tasks like processing large datasets or document summarization prioritize throughput over speed. Batch processing with larger models deployed in the cloud might be more efficient for these use cases

5️⃣ Task Specificity

Some LLMs are fine-tuned for specific industries or tasks, such as healthcare, law, or customer service. These task-specific models offer:

- Improved efficiency. They are better at understanding domain-specific terminology and context, reducing the need for extensive training or manual intervention.

- Faster ROI. Specialized models often require less customization, enabling quicker deployment and measurable returns.

For instance, a healthcare-focused large language model can assist in clinical decision support systems far better than a generic LLM. Businesses should assess whether their needs are better served by a general-purpose model or a specialized one.

6️⃣ Scalability, Costs, and Privacy

The practical aspects of deploying an LLM can significantly affect a business's ability to integrate and scale it effectively. Key factors include:

Infrastructure requirements. Cloud-based models might be ideal for businesses without extensive computational infrastructure. Providers like OpenAI and AWS offer scalable access to LLMs, eliminating the need for upfront investment in hardware. At the same time, on-premise LLMs are suitable for businesses with strict data privacy requirements or large-scale operations. While the initial hardware costs are high, this option offers greater control over latency and data handling.

Cost vs. Value. Advanced LLMs with high performance often come with substantial costs, whether deployed via API or on-premise. Therefore, businesses need to evaluate whether the benefits justify the investment.

Data privacy and security. Industries like finance and healthcare demand strict adherence to data protection regulations. Choosing an LLM that aligns with these requirements is essential to avoid compliance risks.

The deployment environment must align with both business operations and long-term growth strategies.

7️⃣ Ease of LLM Integration

LLMs must seamlessly integrate with existing infrastructure, whether through APIs, SDKs, or fine-tuning pipelines. Compatibility with existing software tools and workflows is pivotal to minimizing disruptions during deployment.

Oleh Pylypchak, Chief Technology Officer and Co-Founder at BotsCrew

"When selecting an LLM for your business, it's essential to balance speed, quality, and cost. The market offers both large models with a high number of parameters and smaller, more lightweight models.

Larger models typically deliver better performance and smarter results for complex tasks but are often slower and more expensive. On the other hand, smaller LLMs are faster and more cost-effective but may not achieve the same level of sophistication.

To find the best solution for your specific needs, consider testing multiple models:

#1. Start simple and scale up. Begin with smaller, faster, and less expensive models, gradually moving to more complex and powerful ones until the results meet your expectations.

#2. Start with the best and scale down. Begin with the most advanced model available and work your way down to simpler models, stopping when you reach a satisfactory balance of quality, speed, and cost."

✨ Get more productive with AI! Join the ranks of Fortune 500 companies already leveraging our custom AI solutions and gain a competitive edge with cutting-edge technology.

LLM Comparison: The Most Popular and Niche Options & Nuances to Consider

With so many options available — each with unique strengths, capabilities, and trade-offs — choosing the right model can feel overwhelming. We've highlighted the most notable LLMs on the market, comparison of these LLMs, describing their specialties, and how they can be tailored to meet your business goals.

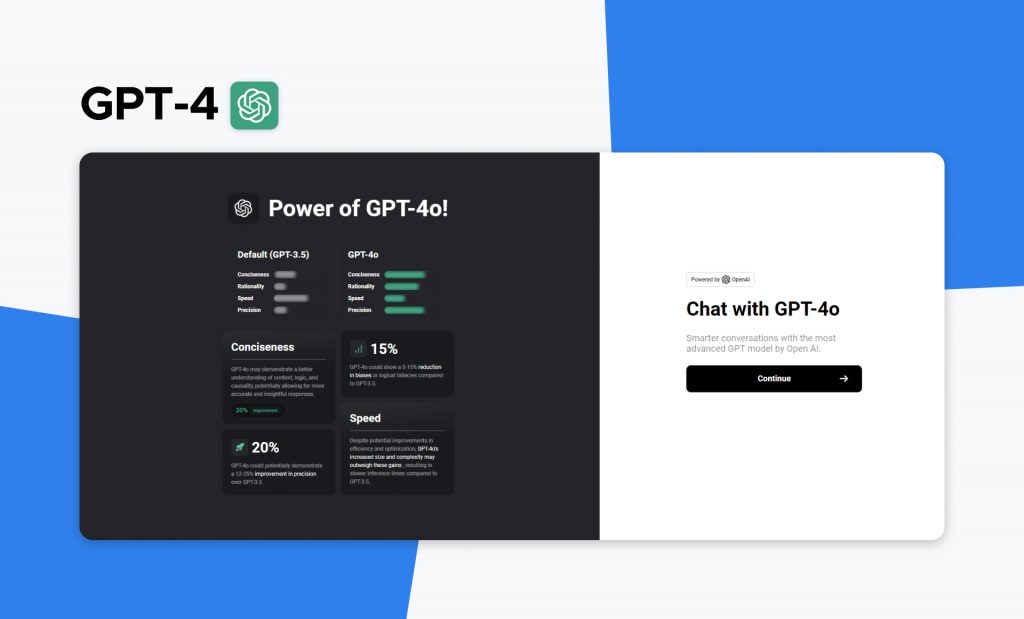

GPT-4 + updated versions (OpenAI)

A versatile and highly capable general-purpose model designed for a wide range of natural language processing (NLP) tasks.

📝 Use cases:

- Customer support automation

- Content creation

- Multilingual translation and localization

- Document summarization

- Business intelligence and analytics

- Coding assistance and debugging.

💬 Key features:

- Large context window (up to 25,000 words)

- Multi-language support (supports over 25 languages)

- API and integration

- Memory management (optimizing the use of computational resources, such as RAM, GPU memory, and storage, to efficiently train and deploy LLMs. Given the immense size of LLMs, effective memory management is essential for smooth performance, cost efficiency, and scalability)

- Improved context understanding

- Great knowledge base

- Reduced biases & Hallucinations

- Fine-tuning capabilities (can be fine-tuned on custom datasets, allowing businesses to tailor the model for specific tasks, industries, or company needs).

💪🏻 Strengths:

- Advanced reasoning capabilities

- Smooth and accurate answers

- Great context understanding

- Good language when prompted well

- Subversion 4o can interpret and read documents, presentations, and images accurately

- Great scalability (is designed to scale according to the user's needs, whether for a small startup or a large enterprise with high processing demands).

🤔 Considerations:

- Cost can be high for frequent or large-scale usage

- Reliance on cloud services may raise privacy concerns for sensitive data

- Struggling with humor.

💸 Pricing:

Varies based on usage

API Access

- GPT-4 (8k context): $0.03 per 1,000 prompt tokens, $0.06 per 1,000 completion tokens

- GPT-4 (32k context): $0.06 per 1,000 prompt tokens, $0.12 per 1,000 completion tokens

These rates apply to developers and businesses using the GPT-4 API.

ChatGPT Plus

$20/month for GPT-4 access via ChatGPT, designed for personal or casual use (not for API access).

LLaMA (Meta AI)

A series of open-source LLMs designed to offer a balance between efficiency and performance, catering to both research and practical applications. LLaMA models are smaller in size compared to some of the larger models like GPT-4, yet they deliver competitive performance in various natural language processing tasks.

📝 Use cases:

- Research assistance

- Custom NLP solutions for startups and small businesses

- Content creation

- Language translation

- Writing assistance

- Customer service

- Marketing & PR

- Technical documentation

- Brainstorming.

💬 Key features:

- Sentiment analysis

- No personal opinions

- Continuous improvement

- Contextual understanding

- Competitive performance on multiple NLP benchmarks despite the smaller size

- Supports fine-tuning for domain-specific tasks and applications.

💪🏻 Strengths:

- Open-source availability enables deployment on-premise

- Lower compute requirements compared to other models

- Can be fine-tuned for specific industries or tasks

- Competitive Performance: despite being smaller, LLaMA holds its own in many NLP tasks, offering high performance with lower computational requirements.

🤔 Considerations:

- Lacks prebuilt APIs, requiring more technical expertise for deployment

- May not match GPT-4's out-of-the-box capabilities

- May not perform as well as larger models like GPT-4 in highly specialized or complex tasks

- Generally understands only about 70% of user queries correctly

- Hallucinations are quite common

- Fine-tuning LLaMA requires significant computational resources, especially for larger versions.

💸 Pricing:

Free. LLaMA models are available for free under open-source licenses, but running, fine-tuning, or deploying them may incur computational costs, especially for larger configurations.

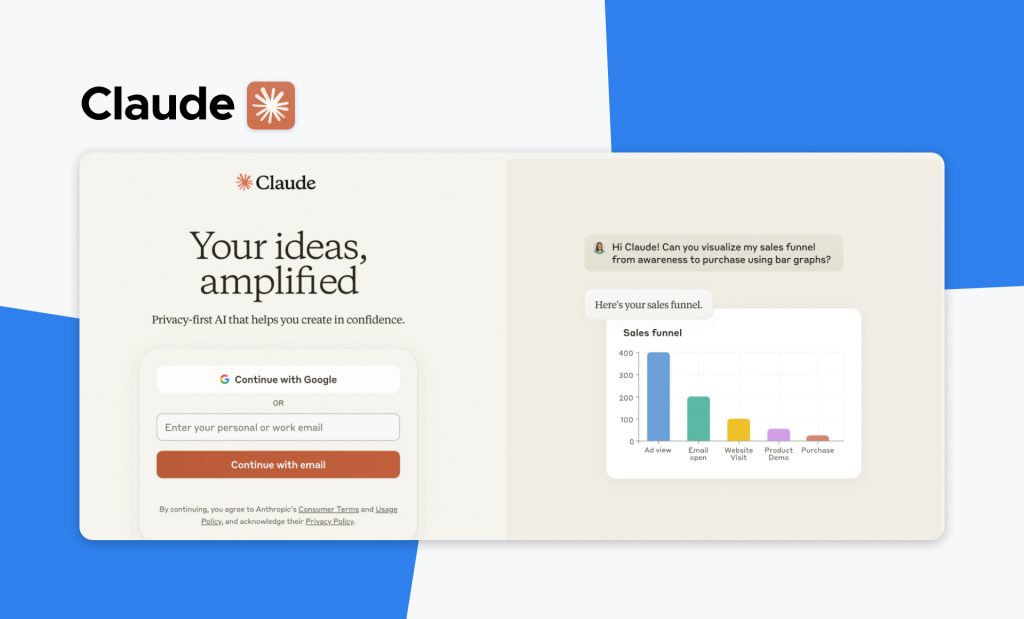

Claude (Anthropic)

An LLMl designed with a strong emphasis on safety, reliability, and ethical AI. It is particularly well-suited for conversational AI and tasks requiring a high degree of sensitivity and accuracy. Claude is accessible via cloud-based APIs, making LLM integration easy into various applications.

📝 Use cases:

- Chatbots for sensitive industries like healthcare or finance

- Customer support

- Content creation

- Prompt engineering

- Document summarization and data extraction.

💬 Key features:

- Adapting communication style to the user's needs and preferences

- Constantly expanding knowledge base through training on high-quality data

- Robust safeguards to minimize harmful or biased outputs

- Optimized for natural, conversational interactions

- Supports document summarization, text classification, and other NLP tasks.

💪🏻 Strengths:

- Strong focus on ethical AI and minimizing harmful outputs

- Performs well in conversational contexts and summarization tasks

- Easily accessible via cloud-based APIs.

🤔 Considerations:

- Performance in highly technical or niche tasks may lag behind models like GPT-4

- Requires phone number and age verification despite Google login

- Quite long answers for simple questions

- Has memory, but doesn't build up on it

- Limited customization options compared to open-source models like LLaMA.

💸 Pricing:

Vary based on usage and API subscription plans. Specific rates are available upon request from Anthropic.

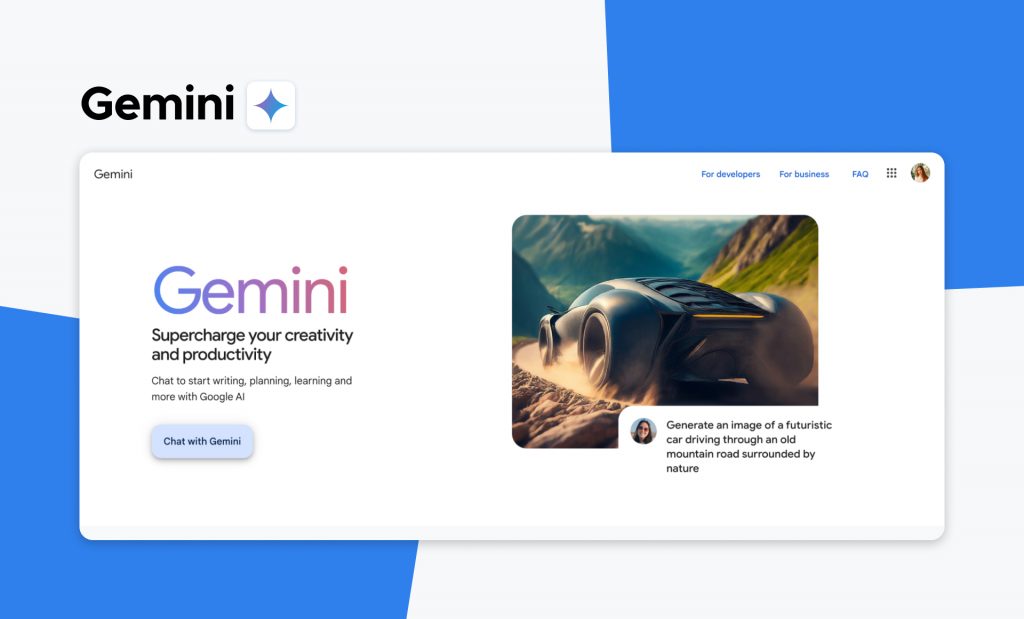

Gemini (Google DeepMind)

An advanced multimodal LLM designed to combine the power of language understanding with deep contextual reasoning and problem-solving capabilities. It represents Google's cutting-edge efforts to create AI systems that can process and integrate data from multiple modalities, such as text, images, and even code. Gemini is engineered for diverse applications, emphasizing accuracy, adaptability, and collaboration.

📝 Use cases:

- Research assistance

- Advanced data analysis combining textual and visual information

- Multimodal customer support systems capable of handling image-based queries

- Coding and development

- Content creation that involves text and visual assets.

💬 Key features:

- Multimodal capabilities, enabling understanding and generation across text, images, and other data types

- Seamless integration with Google's suite of tools and APIs

- Extensive training on diverse datasets, ensuring proficiency in various domains.

💪🏻 Strengths:

- Multimodal capabilities allow integration of visual and textual data

- Optimized for diverse applications, from natural language understanding to complex AI tasks

- Constantly updated and improved through Google's advanced AI research.

🤔 Considerations:

- Availability may be limited to Google Cloud and enterprise ecosystems

- Responses take longer to generate

- Responses are often generic

- May require substantial resources for fine-tuning and integration

- Performance in specialized domains may need further validation

- Pricing is typically tailored to enterprise clients and may not suit smaller organizations.

💸 Pricing:

Details are not publicly disclosed and vary based on usage and integration needs. Interested businesses can inquire through Google Cloud's enterprise services for custom quotes.

📝 Depending on the task, even models from the same provider can vary in suitability. For example, OpenAI offers GPT-4, which excels in conversations with its advanced language understanding and generation capabilities. Meanwhile, GPT-4V (Vision) extends GPT-4's abilities, supporting multimodal tasks by processing not only text but also images and voice. Each model is tailored for different use cases, so selecting the right one depends on the specific requirements of your project.

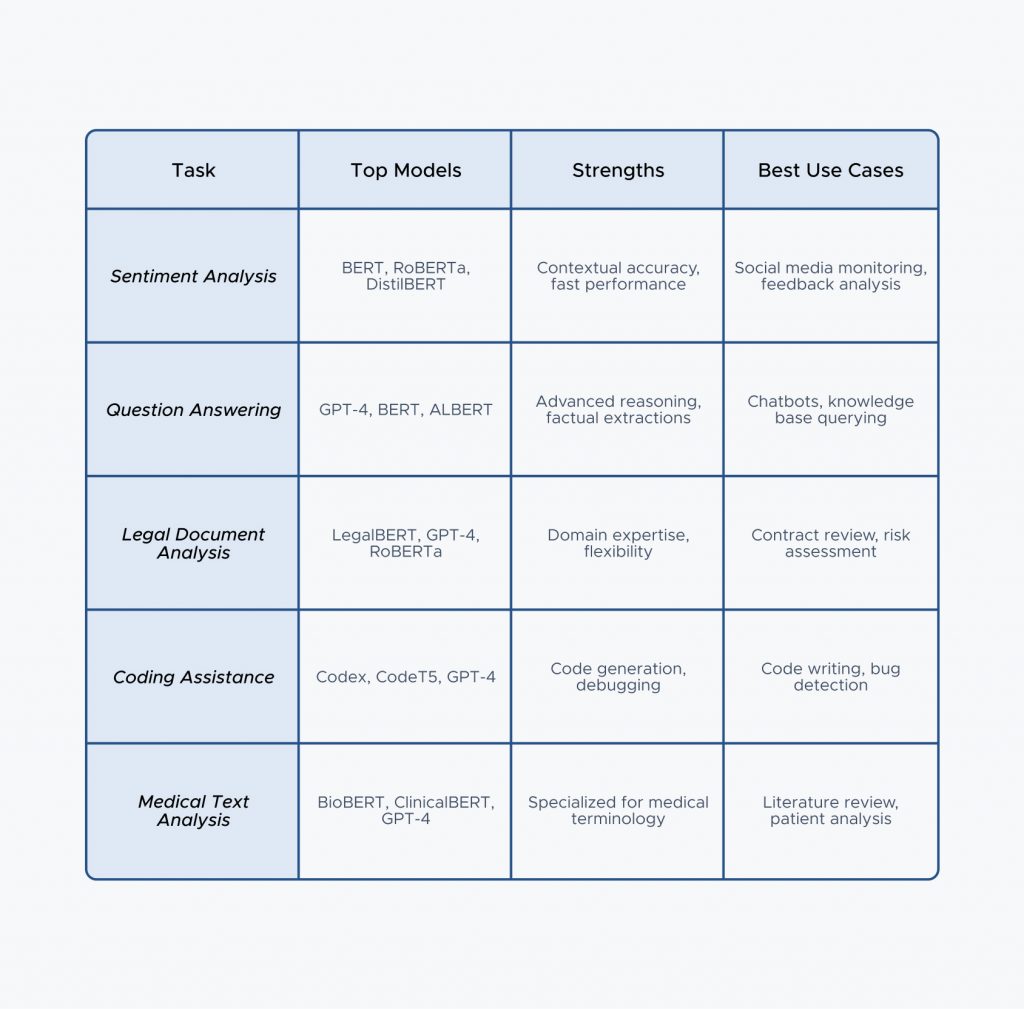

Choosing the Right Model for Specific, Narrow Tasks

When it comes to addressing narrow tasks, the right Large Language Model depends on the nature of the task, the required accuracy, and the domain-specific expertise. Below is an LLM model comparison and their suitability for specific use cases:

Sentiment / Customer Feedback Analysis

Best models:

- BERT: Excels in understanding context and nuances in text.

- RoBERTa: A variation of BERT that improves performance on short and long text inputs.

- DistilBERT: A lighter, faster alternative for real-time applications.

Why they are effective:

- Pre-trained in bidirectional context.

- Fine-tuning can make these models highly accurate for identifying emotions or opinions in text.

Question Answering (QA), Customer/Technical Support & Knowledge Base Querying

Best models:

- GPT-4: Superior reasoning and general knowledge.

- BERT: Strong for extractive QA, pulling relevant answers from a text.

- ALBERT: A lightweight, scalable alternative to BERT, optimized for QA tasks.

Why they are effective:

- GPT-4's generative abilities allow for nuanced, contextual answers.

- BERT-like models are great for factual or domain-specific QA.

Legal Document Analysis & Risk Assessment in Compliance

Best models:

- LegalBERT: Pretrained on legal text, optimized for tasks like clause extraction and contract analysis.

- GPT-4: Effective for interpreting legal language and providing summaries or insights.

- RoBERTa: Works well for identifying key terms or clauses when fine-tuned.

Why they are effective:

- LegalBERT excels in domain-specific understanding.

- GPT-4 can bridge gaps by explaining legal jargon to non-experts.

Medical Text & Patient Record Analysis

Best models:

- BioBERT: Pre-trained on biomedical datasets, optimized for tasks like text mining.

- ClinicalBERT: Fine-tuned on clinical data for healthcare applications.

- GPT-4: Versatile for summarizing or interpreting medical research and reports.

Why they are effective:

- Domain-specific models like BioBERT and ClinicalBERT provide superior accuracy in medical language.

- GPT-4 can simplify and explain complex medical information.

Selecting the right LLM for narrow tasks hinges on the specifics of your use case. Specialized models often outperform generalists in accuracy and cost-effectiveness, while general-purpose models like GPT-4 shine in versatility and ease of use.

Best Practices for Implementing LLMs in Business

Integrating Large Language Models into business operations can unlock transformative efficiencies and insights. However, successful implementation requires careful planning and adherence to best practices. That is how to maximize the value of LLMs while mitigating risks:

#1. Evaluate your business needs. Clearly define the problem the LLM will solve, the output you expect, and how it aligns with your business goals. This will help avoid overspending on unnecessary features. Moreover:

Prioritize use cases: focus on high-impact applications like customer service automation or process optimization to maximize ROI.

Assess accuracy requirements: choose a simpler, cost-effective model if tasks like summarization or sentiment analysis don't require cutting-edge accuracy. For critical tasks like legal document review, consider higher-performing models.

#2. Ensure Seamless Integration. Evaluate whether your current systems (e.g., CRM, ERP, or workflow management tools) support LLM integration. APIs often provide easier integration compared to in-house deployments.

Implement middleware to bridge compatibility gaps between your existing tech stack and the LLM, simplifying the integration process. Ensure your infrastructure can handle peak demands, especially if the LLM will be used for real-time applications like chatbots.

#3. Prioritize Data Privacy and Compliance. Data security is a critical consideration, especially in industries like healthcare, finance, and law.

Anonymize data when needed: mask or anonymize sensitive data before feeding it into the model to minimize risks.

#4. Train or Fine-Tune for Specific Use Cases. While pretrained models are powerful, fine-tuning them with your business data can significantly enhance their performance.

Training: train the model on domain-specific data to improve accuracy in your field. For instance, a retail company could fine-tune a model for inventory analysis and customer sentiment tracking.

Regular updates: continuously retrain the model to adapt to changing data trends or business needs.

#5. Monitor and evaluate model performance. Regular performance evaluation ensures the LLM delivers consistent value.

Set benchmarks: establish KPIs such as response accuracy, processing speed, and cost-efficiency.

Human oversight: include human review processes to catch errors, especially in high-stakes tasks like legal or medical analysis.

Feedback loops: use real-world feedback to identify areas for improvement and enhance model performance over time. It can also highlight specific issues or suggest enhancements that may not surface during internal testing. You can even launch a beta-testing group of internal teams or loyal customers who provide ongoing feedback on LLM performance.

#6. Anticipate "model drift" early. Over time, the relevance of an LLM's responses may degrade due to changing data patterns or evolving user needs. However, staying ahead of model drift ensures consistent performance and user trust.

Schedule periodic audits, monitor accuracy, and implement ongoing retraining pipelines to refresh the model with the latest data.

#7. Start small and scale strategically. Begin with smaller pilot projects to test the LLM's capabilities before expanding its scope.

Proof of concept: use a pilot project to measure the model's ROI and address any challenges early on.

Phased rollout: gradually scale implementation to other areas of the business once the model proves successful.

Oleh Pylypchak, Chief Technology Officer and Co-Founder at BotsCrew

"My key advice is to start with simpler implementations and progress gradually toward more complex ones. For instance, when it comes to fine-tuning, it's wise to postpone advanced techniques until later stages. Begin with simple prompt engineering approaches, refine them, and then explore more advanced methods (such as fine-tuning) as needed."

These ideas can help you uncover unique opportunities, foster innovation, and future-proof your AI initiatives.

Start building your Al projects with BotsCrew today. Book a free consultation with our experts.