How to Run a Successful AI POC in 30 Days

In this article, we’ll break down a proven 30-day framework used by leading AI consulting companies, outlining exactly how enterprises can design, build, and validate a successful AI POC in just one month.

For many enterprises, building an AI Proof of Concept (POC) is the fastest, lowest-risk way to validate value before investing in full-scale implementation. A well-structured 30-day AI POC helps teams prove feasibility, demonstrate ROI, and align stakeholders around what AI can accomplish - without long timelines or heavy engineering.

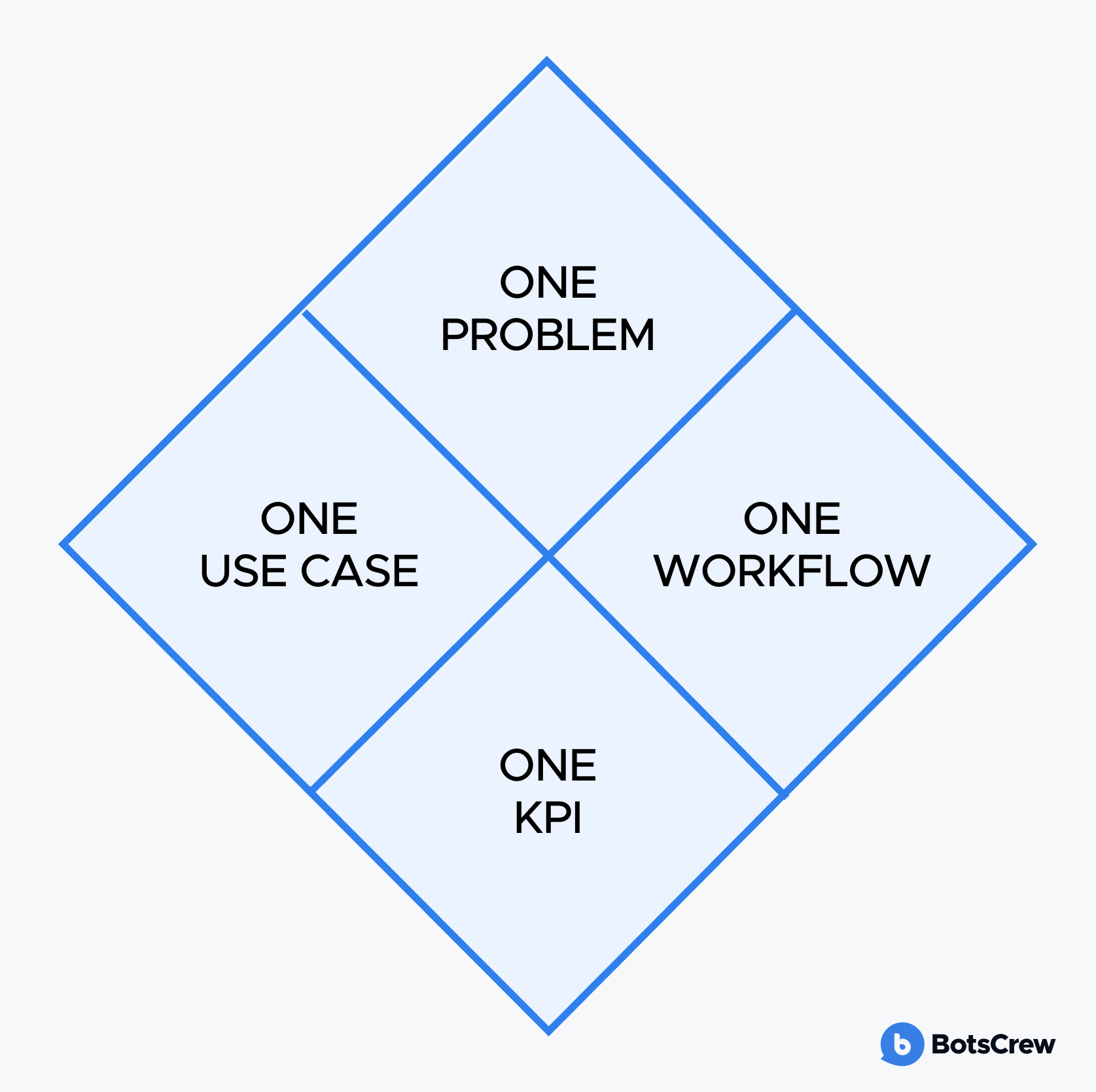

What Is an AI POC?

An AI POC (AI Proof of Concept) is a short, low-risk project designed to test whether a specific AI idea can deliver real business value before an organization invests in a full-scale solution.

Instead of building a complete system, an AI POC focuses on:

- One problem

- One use case

- One workflow

- One KPI to measure success

It usually runs for 2–6 weeks and answers the most important question:

“Will this AI solution work for our business, and is it worth scaling?”

Why AI POCs Matter in 2025

As enterprises accelerate AI adoption, leadership teams face three interconnected challenges:

- Too many potential AI ideas, unclear priorities

- Slow decision cycles and unclear success metrics

- Fear of investing in the wrong tools, vendors, or platforms

These operational gaps are already affecting competitiveness - 41% of leaders say slow AI rollouts have caused them to fall behind, while 39% report missing out on major productivity gains due to stalled or ineffective adoption efforts.

The consequences are tangible. Organizations cite delayed ROI (37%), inconsistent customer experiences (36%), and missed market opportunities (34%) as direct outcomes of poor AI execution. At the same time, leaders remain cautious about the risks tied to selecting and implementing AI solutions. Many point to high vendor costs (45%), limited trust in vendor security (38%), and concerns around vendor lock-in (33%), where systems become incompatible with internal infrastructure or too dependent on a single provider.

Together, these issues underscore a growing need for structured, low-risk validation methods like focused AI POCs - that help enterprises de-risk decisions, generate evidence fast, and build internal confidence before making large-scale investments.

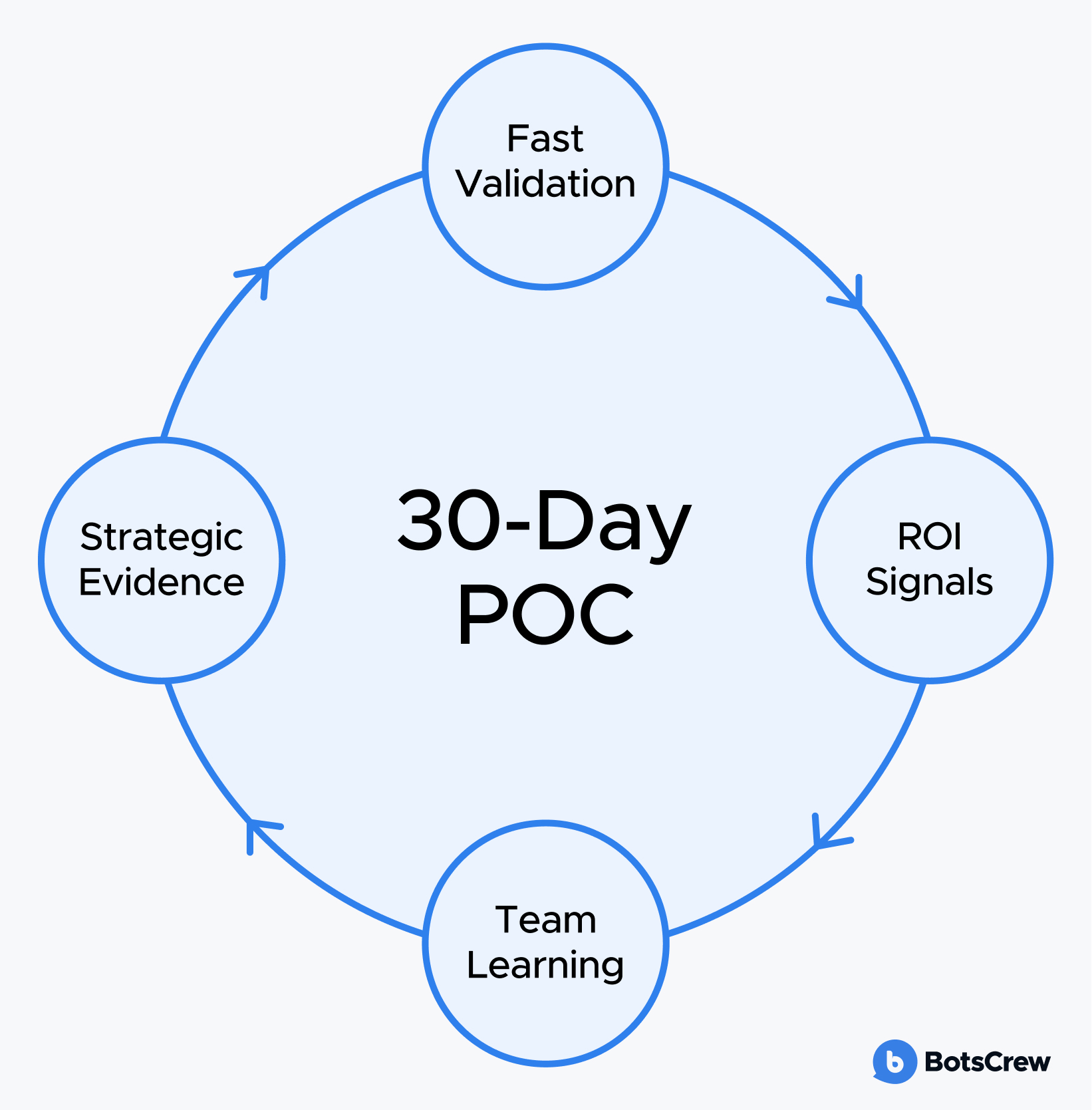

A 30-day POC solves these issues by providing:

- Fast validation of one high-impact use case

- Clear ROI signals before committing to a full build

- Hands-on learning for cross-functional teams

- Real evidence to guide AI strategy and roadmap decisions

When done well, an AI POC becomes the bridge between “AI exploration” and enterprise-scale execution.

Want expert support to accelerate your AI POC?

Our team specializes in rapid prototyping, KPI design, and building enterprise-ready validation models in 30–60 days. Skip the guesswork—move straight to results.

The 30-Day AI POC Framework (Used by Top AI Consulting Teams)

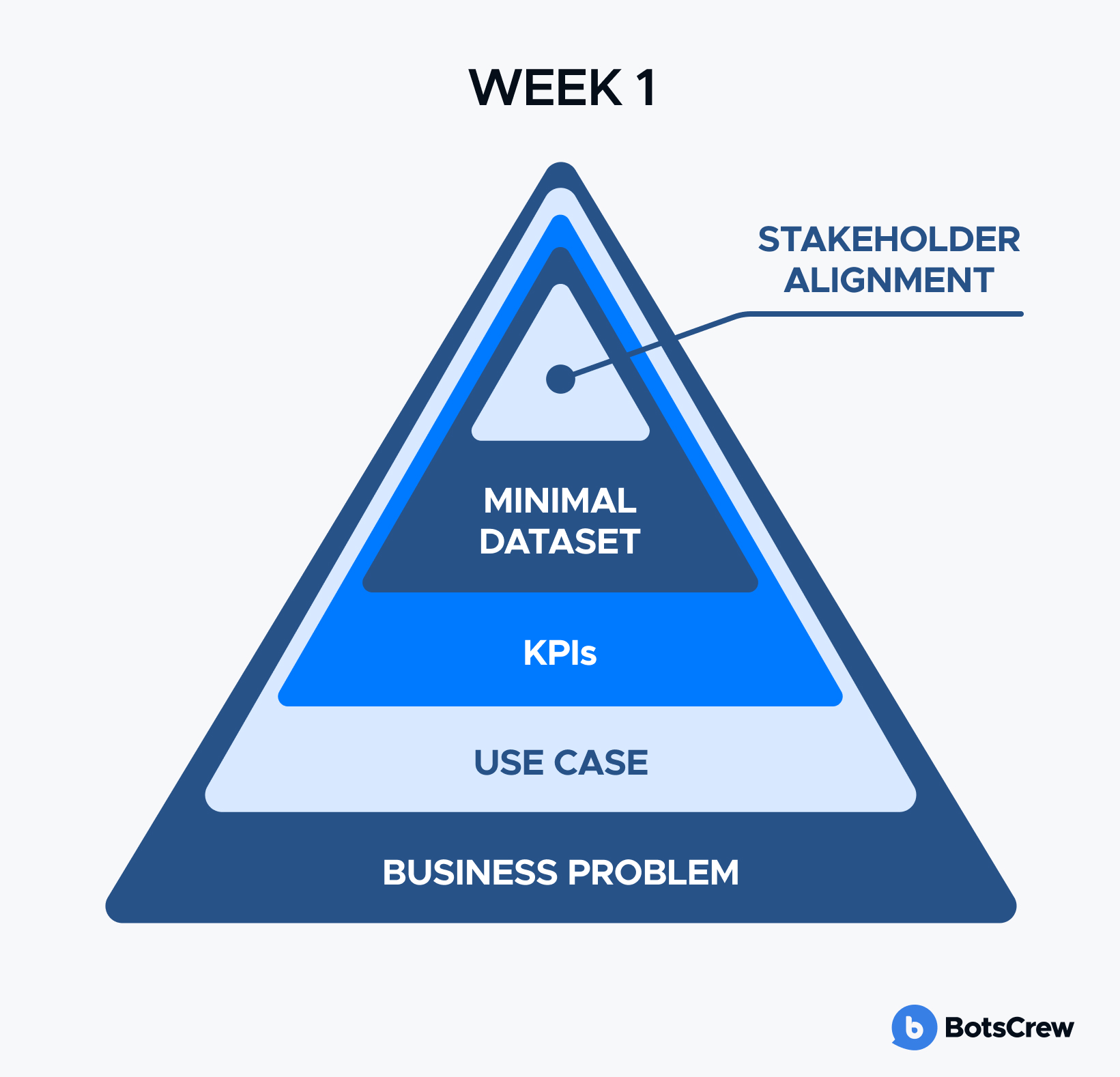

Week 1: Define the Problem, Scope, and Success Metrics

The first week sets the foundation for everything that follows. No model tuning or prototyping matters if the team isn’t united around a clearly defined problem, a narrow use case, and a measurable definition of success. The most successful AI POCs aren’t the ones with the most advanced architectures - they’re the ones with the sharpest clarity.

This week is about alignment, not engineering.

1. Start With One Clear Business Problem

Avoid starting with vague themes like “improving customer experience” or “reducing operational inefficiency.” These sound strategic, but don’t give a POC anything tangible to validate.

Instead, identify one business problem that is painful, measurable, and significant enough to matter. This is usually something that slows teams down, introduces errors, or consumes a disproportionate amount of human effort.

Choose something specific, measurable, and painful, such as:

- Reducing ticket resolution time

- Automating manual document reviews

- Improving forecasting accuracy

- Accelerating content creation

Example of a Good Business Problem:

“Our support team spends an average of 4 minutes manually classifying every inbound ticket, creating delays during peak hours and increasing our overall first-response time.”

Or

“Accounts payable teams manually match 70% of invoices because they arrive in different formats, slowing reconciliation by 2–3 days.”

2. Choose a Single High-Impact Use Case

Once the problem is defined, the next step is to select the specific use case you want AI to address. This is where many teams overreach. They try to “test everything at once” and end up validating none of it.

A strong POC focuses on one scenario that can reasonably be improved in 30 days. That focus is what allows teams to prototype quickly, test effectively, and demonstrate meaningful results that executives can trust.

Choosing the wrong use case slows everything down.

Discover how to evaluate AI opportunities with clarity and confidence using our AI Use Case Evaluation Framework.

3. Define 1–2 Success Metrics That Matter

Success metrics (KPIs) give your POC a target and keep decision-making objective. Without them, outputs are evaluated subjectively - “it feels good,” “it looks promising” - which undermines stakeholder confidence.

Pick one or two metrics that directly quantify improvement for the chosen use case. These might measure speed, quality, accuracy, or throughput. Common examples include reducing manual effort, accelerating output creation, or improving accuracy against a human-reviewed baseline. Once set, these KPIs should act as the north star for every decision in Weeks 2–4.

Example of a Good KPI:

“Reduce manual ticket classification time from 4 minutes to under 30 seconds per ticket.”

Or

“Achieve 85%+ accuracy compared to human-reviewed outputs.”

Want to choose the right metrics for your POC?

Explore the key AI and LLM evaluation metrics every enterprise should track.

4. Identify the Minimal Viable Dataset

A major misconception is that AI POCs require perfect, clean, fully structured data. They don’t. What they need is just enough real-world data to simulate the problem authentically.

This means identifying the smallest dataset that captures the complexity of the use case - documents, logs, transcripts, forms, images, customer interactions, etc. What matters is representativeness, not volume. This dataset should also meet governance standards: appropriate access permissions, data masking if needed, and approval from security teams.

By the end of Week 1, you should have:

- A clearly defined business problem

- A single razor-focused use case

- One or two KPIs that anchor the POC

- The minimum dataset required to prototype

- Stakeholder alignment on scope and expectations

This alignment is the biggest predictor of POC success. When Week 1 is done well, Weeks 2–4 move quickly. When Week 1 is rushed, every later step becomes slower, riskier, and harder to evaluate.

Week 2: Build the First Working Prototype

Week 2 is where the POC shifts from planning to execution. The goal isn’t to build a polished solution - it’s to create the fastest possible version that proves the idea works. At this stage, velocity matters more than architecture, and realism matters more than perfection. This is where teams transform a defined use case into something tangible that stakeholders can interact with.

1. Start With Rapid Prototyping

Instead of committing to one technology too early, Week 2 should start with rapid prototyping on flexible platforms like Azure AI Foundry, AWS Bedrock, Vertex AI, OpenAI, or Claude. These tools let you test assumptions quickly, switch models, and experiment with prompts without heavy infrastructure decisions.

The goal isn’t to build the perfect solution—it’s to create a simple, working version that demonstrates real potential and can evolve as data and feedback come in.

2. Build the Simplest Possible Working Version (V0.1)

Once you have a clear direction, the goal is to build a simple Version 0.1—a working prototype that processes real inputs and produces meaningful outputs. It doesn’t need full workflows or integrations; it just needs to prove technical viability. This might mean extracting fields from a few documents, classifying sample tickets, generating content, or answering internal queries.

The objective is immediate, visible value, even if the UI is rough or manual steps remain behind the scenes.

3. Introduce Basic Guardrails Early

Even in an experimental POC, governance matters.

This is the time to add lightweight guardrails and think about AI security - masking sensitive data, applying prompt controls, logging activity, and limiting access. These safeguards don’t need full engineering yet, but they should reflect the organization’s security and compliance expectations. The strongest POCs balance speed with responsible experimentation.

4. Involve Subject-Matter Experts Throughout the Process

One of the biggest success factors in Week 2 is consistent involvement from subject-matter experts. SMEs provide the business context and domain knowledge that AI models need to be accurate and relevant. They should review outputs early, test edge cases, flag inaccuracies, and define what “good enough” looks like. Without SME input, prototypes often miss real-world expectations; with it, they become grounded, practical, and aligned with actual workflows.

By the end of Week 2, you should have a working prototype that can demonstrate feasibility, even in a simplified form. It doesn’t need to be beautiful or automated—but it must be real, testable, and representative of the chosen use case. If Week 1 created clarity, Week 2 creates momentum.

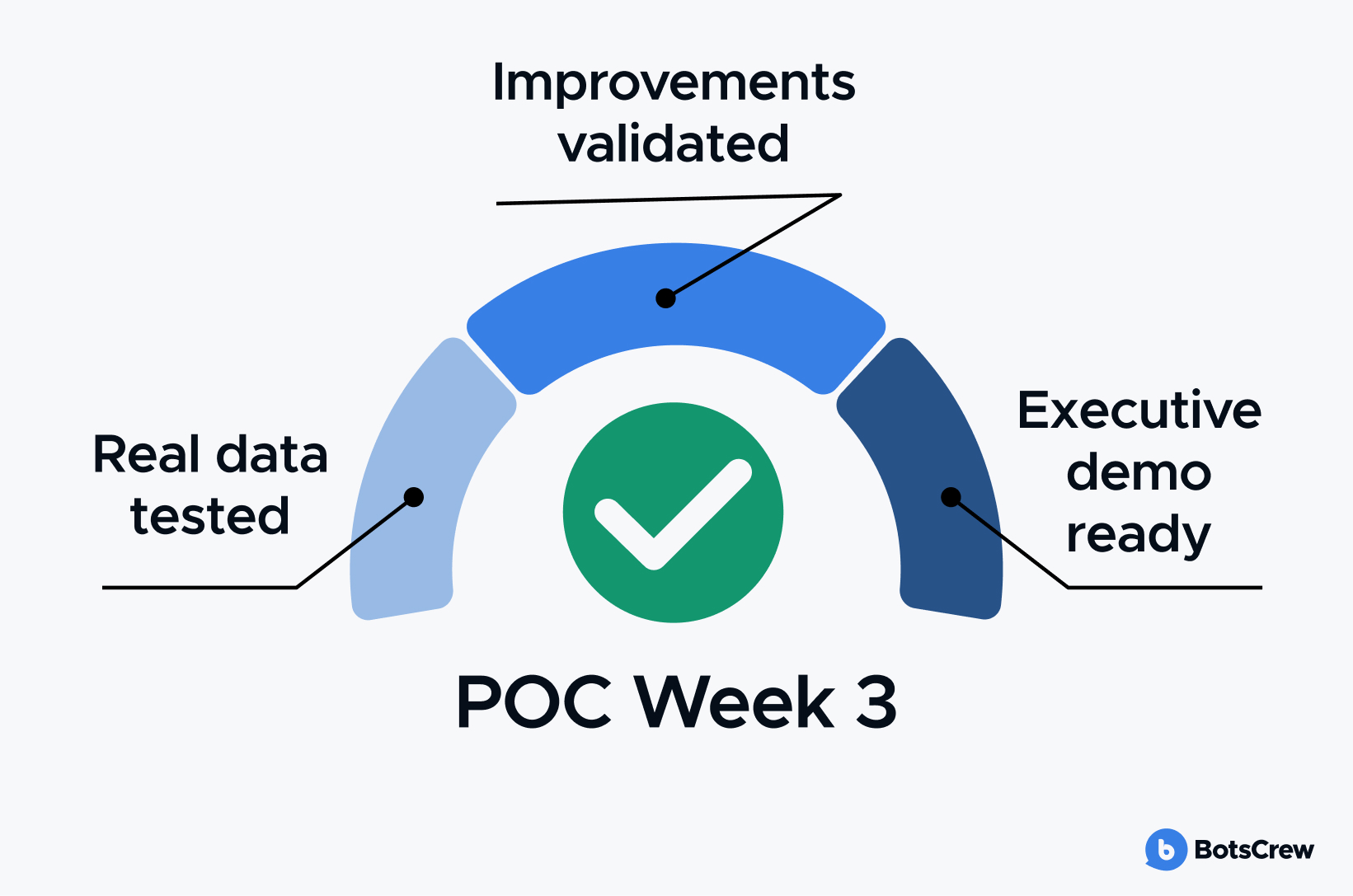

Week 3: Iterate, Improve, and Test with Real Data

Week 3 is where a POC truly proves its value. This is the moment when early prototypes meet the complexity of real operational data, when weak assumptions surface, and when strong ideas become validated. Most AI POCs succeed or fail here—not because of technology, but because this is where real-world conditions expose what works and what doesn’t.

1. Test Using Real Operational Data

The first step in Week 3 is to move beyond curated samples and test the prototype with real operational data - messy inputs, edge cases, inconsistent formats, and incomplete records. This exposes how the model behaves under true production conditions, revealing where it struggles, what it misinterprets, and whether the use case is genuinely viable or needs adjustment.

2. Refine, Improve, and Strengthen the Workflow

As real data exposes weaknesses, Week 3 becomes a cycle of refinement. Teams adjust prompts, improve retrieval, streamline workflows, or add integrations like RAG or internal APIs. In some cases, light fine-tuning may help. The goal isn’t perfection—it’s steadily moving the prototype closer to the KPIs defined in Week 1 and improving consistency with each iteration.

3. Measure Results Against a Clear Baseline

Week 3 also requires a structured evaluation. Compare output quality, speed, accuracy, or cost against the baseline metrics from Week 1 to turn subjective impressions into objective evidence. Improvements like reduced manual effort, faster turnaround times, higher accuracy, or fewer errors make progress easy to quantify—and make Week 4 decisions far more confident.

4. Begin Building the Demo Experience

As iteration continues, Week 3 is also the time to shape the executive demo. A strong POC demo tells a clear story—what problem it solves, how the workflow works, and how results improved—through a simple, intuitive interface. Even if the prototype is still rough behind the scenes, the experience should be easy for leaders to grasp.

By the end of Week 3, your POC should feel real: grounded in real data, validated by real improvements, and packaged in a way that leadership can quickly understand.

Week 4: Validate, Document, and Present the Results

Week 4 is where the POC transforms from a working prototype into a business decision. The goal is no longer to improve the model—it’s to prove, with clarity and evidence, whether the solution is ready to become a full pilot or should be paused. This week is all about validation, storytelling, and aligning leadership around the next step.

1. Validate the Solution Thoroughly

Before making recommendations, the prototype needs to be validated under realistic conditions. This means stress-testing it with higher volumes, running cross-team reviews, and checking edge cases that may not have appeared earlier. It’s also the time to confirm governance basics - compliant data handling, controlled access, and protection of sensitive information.

2. Prepare a Clear, Compelling Business Case

Once validated, Week 4 shifts to documenting impact in business terms—time saved, accuracy improved, cost avoided, and risks reduced. It also defines what’s needed to move from POC to production: resources, timelines, and expected ROI. A strong business case isn’t technical—it’s an investment pitch that clearly shows value, highlights remaining gaps, and equips leadership to make a confident decision.

3. Make a Clear Go / No-Go Decision

The final outcome of Week 4 is a decision—ideally a simple, confident one. By this point, the team should be able to answer four essential questions:

- Did we hit the KPI we defined in Week 1?

- Is the necessary data accessible and reliable long-term?

- Is the workflow viable beyond a prototype?

- Do stakeholders support moving forward?

If the answer to most of these is “yes,” the POC graduates into a pilot. If not, the outcome is still valuable: the organization avoids wasted investment and gains clarity on what should be improved, postponed, or re-scoped.

Need help defining your use case or planning your 30-day POC?

BotsCrew’s AI consulting team helps enterprises validate ideas fast, align stakeholders, and turn AI opportunities into measurable business outcomes.

Common Reasons AI POCs Fail

AI POCs rarely fail because of the technology. They fail because the surrounding strategy, scoping, and workflow decisions prevent the prototype from ever having a fair chance. Most breakdowns are avoidable—and they tend to follow the same predictable patterns.

Scope Creep

The fastest way to derail a POC is letting the scope expand beyond the original plan. What should be a focused 30-day experiment quickly turns into a mini product build as new features, workflows, and “nice-to-haves” get added.

To avoid this, define the scope on day one—and defend it. Freeze requirements, push new ideas into a “later” list, and keep every conversation anchored in the one problem and one KPI you committed to.

Want to avoid scope creep in your next AI project?

Learn how to define clear, actionable AI requirements from day one.

No Access to Real Data

A POC built on sanitized or overly curated data might look great in demos, but it won’t survive real-world conditions. Without actual documents, tickets, queries, and messy edge cases, the prototype can’t prove whether it truly works.

To avoid this, secure access to real operational data from day one. Prioritize representative samples over perfectly clean ones, involve data owners early, and make sure the dataset reflects the complexity of the workflow you’re trying to improve.

Too Many Stakeholders

A POC derails quickly when every department and every leader needs to weigh in. What starts as a fast validation turns into endless debates, shifting expectations, and unclear ownership—and progress grinds to a halt.

To avoid this, establish a small, empowered core team with a clear executive sponsor. Give them decision rights, streamline approvals, and keep conversations focused on the outcomes—not on internal politics.

Lack of SME Involvement

A POC falls apart the moment it’s evaluated without the people who actually understand the work. When subject-matter experts aren’t involved, the prototype may look impressive on the surface but fails the realities of day-to-day operations.

To avoid this, bring SMEs into the process from day one—and keep them involved. Have them review outputs, flag inaccuracies, validate assumptions, and define what “good enough” really means. A POC grounded in SME feedback stays aligned with real workflows, not guesses.

Building for Perfection Instead of Validation

Some teams approach a POC as if it were a full product—adding integrations, refining UX, optimizing pipelines, and fixing every edge case. What should be a fast feasibility test turns into months of engineering.

To avoid this, keep the POC intentionally lightweight. Resist the urge to polish. Skip non-essential integrations. Focus on proving the core idea works, not building something production-ready. A POC should be scrappy, fast, and ruthlessly simplified.

No Clear Business KPI

A POC without a measurable KPI is guaranteed to produce vague, inconclusive results. Instead of proving value, the team ends up debating whether the outputs “look good” or “seem promising.” Leadership can’t make decisions based on impressions or intuition.

To avoid this, define one KPI on day one—and tie every decision to it. Make success measurable, visible, and binary. When everyone is aligned on a single metric, the POC stops being a guess and starts becoming evidence leadership can act on.

Jumping Into Tooling Before Understanding the Problem

It’s easy to get excited about models and frameworks and start with the technology instead of the need. But when a POC begins with “let’s try Claude” or “let’s build something with RAG,” it drifts into experimentation for its own sake.

To avoid this, start with the business pain, not the platform. Define the problem, the workflow, and the KPI first. Only then choose the tooling that serves that goal. Technology should follow the need—not lead it.

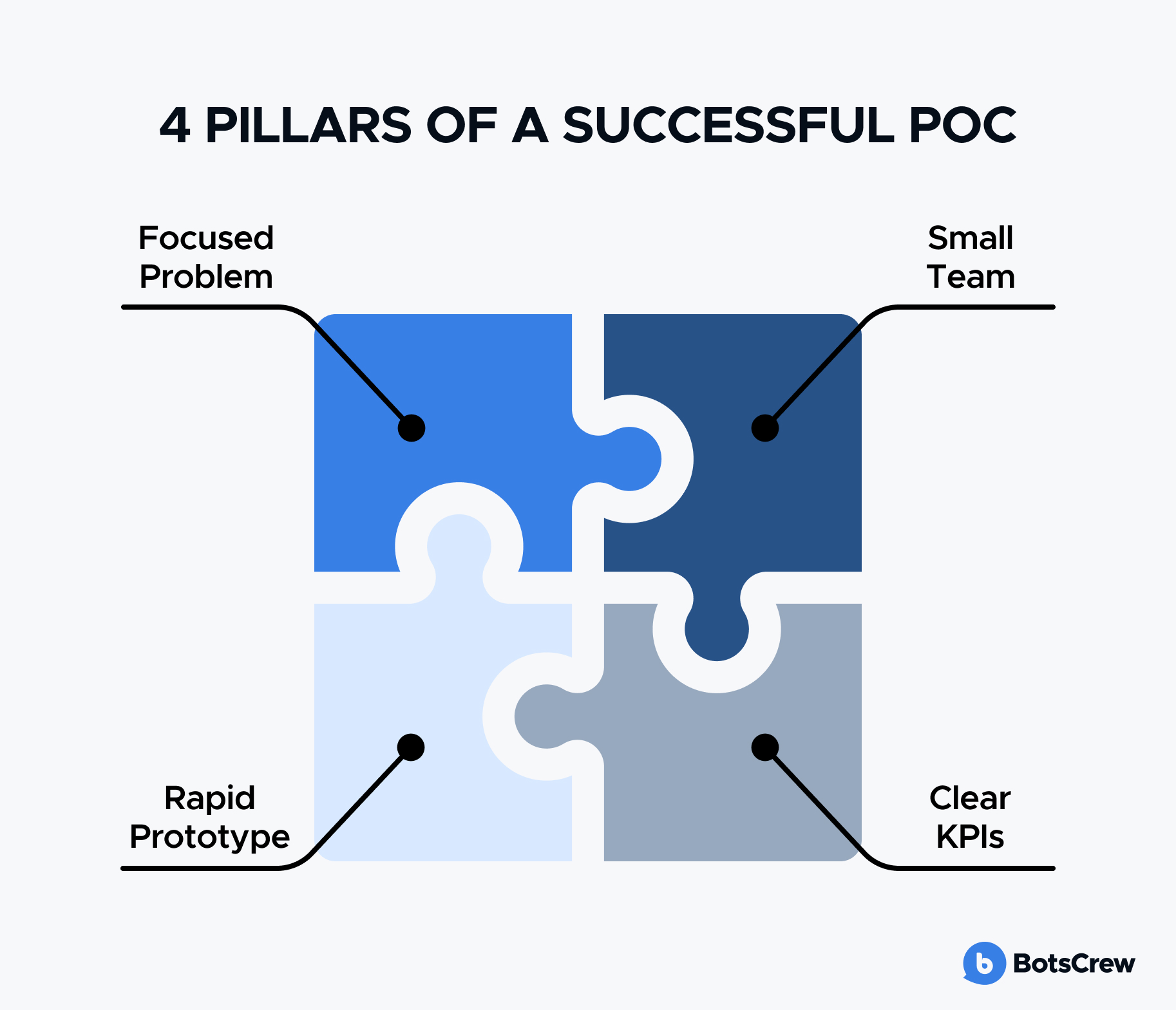

Final Thoughts

A successful AI POC doesn’t require a large team, months of planning, or heavy investment. It requires:

- a focused business problem,

- a small cross-functional team,

- rapid prototyping, and

- clear KPIs tied to measurable value.

Enterprises that follow this structure can move from AI curiosity to meaningful impact in just 30 days.

If you’re considering a POC, this framework is the safest, fastest way to validate AI before scaling across teams and systems.

Ready to Validate Your AI Idea in 30 Days?

A well-executed POC can save months of uncertainty, uncover real ROI, and de-risk your AI investment. If you want expert guidance, faster execution, or help aligning your stakeholders around a clear AI roadmap, BotsCrew can support you every step of the way.

BotsCrew AI Consulting Services

BotsCrew helps enterprises identify the right use case, design a strong POC plan, and build a working solution integrated with their ecosystem.

Our consulting team brings deep experience across strategy, architecture, and applied AI - giving you clarity, structure, and measurable outcomes.

We specialize in:

- AI Strategy & Readiness Assessments

- AI POC & Pilot Development

- AI Workflow Automation & AI Agents

- LLM Evaluation, RAG Design & Model Quality Frameworks

- Secure, Enterprise-Grade Architecture & Cloud Implementation

Whether you’re validating your first AI use case or testing a high-stakes initiative, we bring the frameworks, expertise, and execution power to help you prove value fast—and scale responsibly.