Bias in AI: What It Is, Why It Happens & How to Reduce It

AI comes with an uncomfortable reality: bias woven into its very logic. And when deployed at scale, bias in AI ripples into how people think, act, and make decisions.

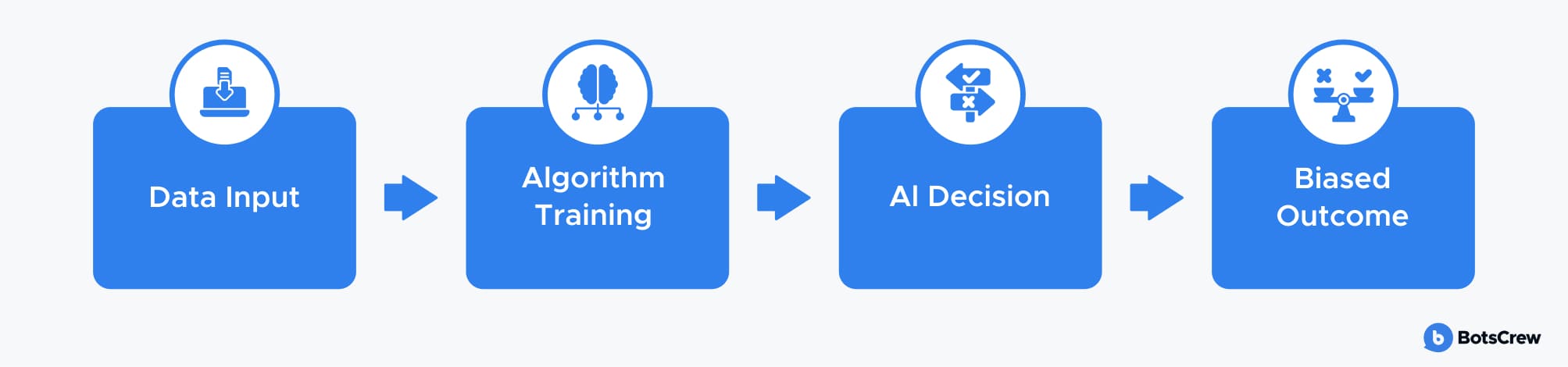

AI doesn't emerge in a vacuum. It's designed by people and trained on data — both shaped by human beliefs, assumptions, and priorities. The choices engineers make, from model objectives to the datasets they curate, inevitably influence how these systems behave. Feed an algorithm skewed or incomplete data, and it can mirror those biases at scale, producing distorted insights, flawed predictions, or repeat versions of human error.

In this article, we take a closer look at the hidden forces that shape bias in AI and offer a roadmap for leaders who want to deploy intelligent systems without compromising accuracy or trust.

Bias in AI Explained

What is bias in AI? Bias in AI is what happens when algorithms start echoing the blind spots of the humans and data that shaped them. Instead of delivering clean, objective insights, the system leans — sometimes subtly, sometimes dramatically — toward certain outcomes or interpretations.

It often starts innocently: a model is trained on data that's incomplete, imbalanced, or shaped by decades of human decisions. The AI absorbs those patterns as if they are universal truths. From there, the system can unintentionally favor one group over another, mislabel information, or make decisions that replicate the same old human mistakes.

Consider a healthcare system implementing an AI tool to identify patients who require urgent follow-up. The model is trained on years of operational data shaped by historic access disparities. Patients from higher-income areas had traditionally received more screenings, so the system learned to treat those cases as higher priority.

When the AI goes live, it mirrors that pattern. A patient from an affluent neighborhood with moderate symptoms is flagged immediately, while another patient with the same clinical profile from a lower-income area is not.

Where Does AI Bias Originate?

Bias in generative AI grows quietly inside the very systems leaders depend on to automate decisions, streamline operations, and shape customer experiences. It's embedded in the data we collect, the models we design, and the human judgment we assume is objective.

By the time an AI system produces a biased decision, the damage is already done: a customer is denied a loan, a candidate is filtered out, a community is over-policed, or a user is misrepresented. And because AI operates at scale, even a small bias can become a massive, automated inequality engine.

The core problem comes from several places — and they often reinforce one another:

1. Data Bias

The biggest driver of bias in AI is the data you feed it. And data brings all the world's imperfections with it. When your data is biased, your AI will be too. When the past was unfair, your AI becomes unfair.

Where the problem starts:

➖ Sampling bias: Your training data doesn't represent your real customers or employees — some groups show up too much, others barely at all. The AI learns to optimize for whoever is “seen” the most.

➖ Historical bias: AI learns from yesterday. If yesterday was biased — like decades of hiring mostly men or granting loans mainly to higher-income ZIP codes — the system “locks in” those patterns.

➖ Labeling bias: Human annotators bring human opinions. Their stereotypes, errors, assumptions, and cultural blind spots get embedded as “truth” inside the model.

The real-world business risk: A hiring AI trained on past resumes might quietly learn that “leadership” = “male,” and start downgrading equally qualified women. You don't see the bias in generative AI until it's already hurting talent pipelines, diversity goals, and brand trust.

2. Bias in AI Algorithms

Even if your data is reasonably balanced, the algorithm itself can create blind spots. The way engineers define success, select features, or tune performance can tip the system toward inequitable outcomes.

Where the problem starts:

➖ Feature selection bias: What variables are included — or excluded — can unintentionally favor certain groups or contexts.

➖ Objective function bias: If you optimize for accuracy alone, the system may sacrifice fairness, especially for small or underrepresented groups.

➖ Model complexity bias: Algorithms tend to cling to dominant patterns. Minorities in the data get “washed out,” making errors worse for them.

The real-world business risk: A loan model might rank applicants from wealthier neighborhoods as safer borrowers — not because they are more creditworthy, but because the algorithm weights those patterns more heavily. You think the model is objective. In reality, it's reflecting structural inequities.

3. Human Decision Bias

AI doesn't eliminate human bias — it scales it. Every person who touches the system leaves fingerprints: developers, data scientists, domain experts, product managers, and decision-makers.

Where the problem starts:

➖ Confirmation bias: Teams choose datasets that support the results they expect to see.

➖ Cultural bias: Systems reflect the worldview of the people who built them — which might not match the diversity of your customers or workforce.

➖ Operational bias: Subjective decisions about model thresholds, validation, and feedback loops determine what the AI treats as “acceptable.”

The real-world business risk: A facial recognition team made up of one demographic group might think their model is performing well — only to discover later that error rates double or triple for other ethnicities, exposing the organization to reputational and legal risk.

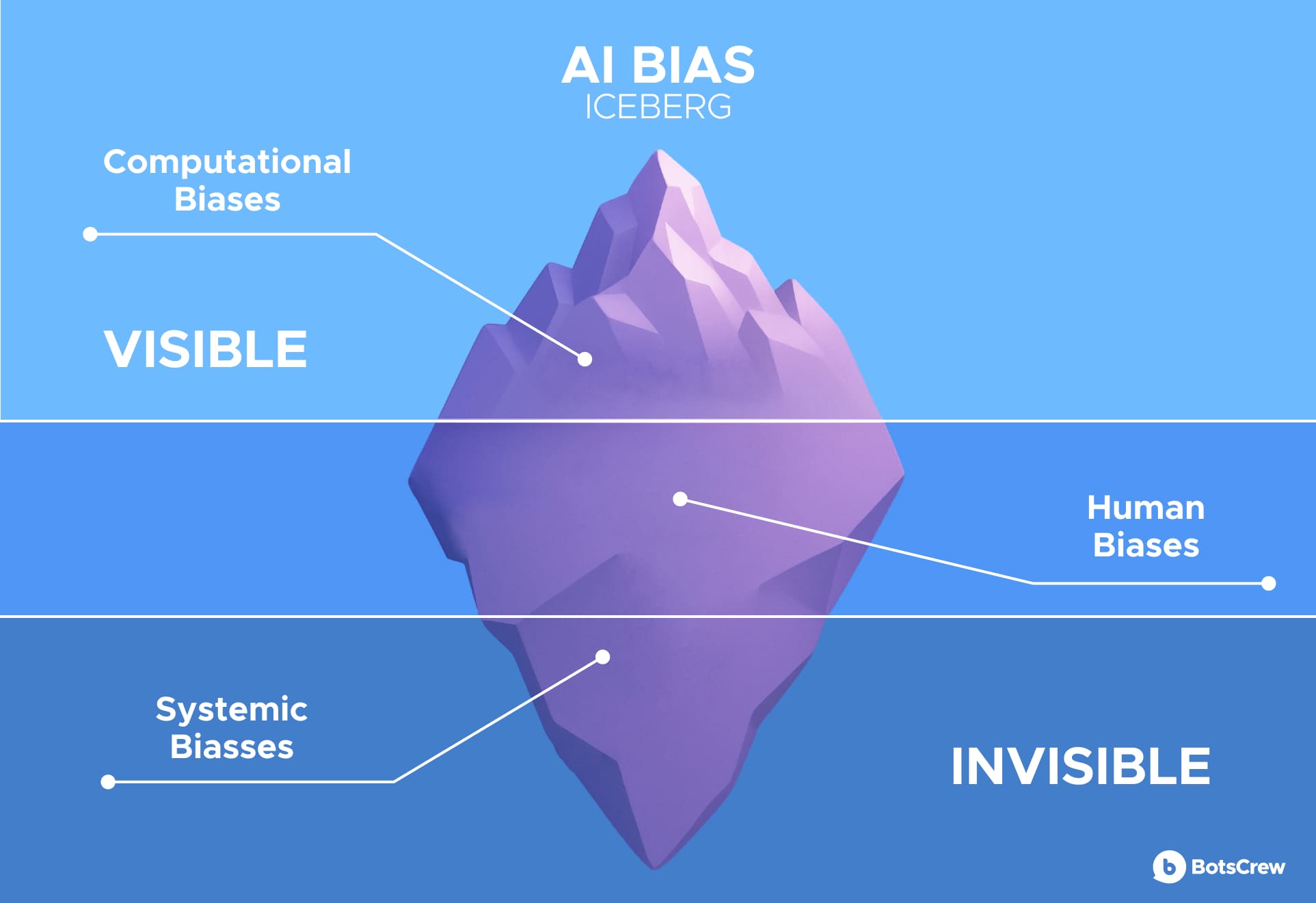

Bias in AI is often framed as a purely technical issue, but the NIST report highlights that much of it actually originates from human prejudices and entrenched systemic or institutional biases. Credit: N. Hanacek/NIST

Case Study: Amazon's AI Recruiting Model

Amazon's AI recruiting tool, trained on resumes from past hires — mostly male engineers — unintentionally discriminated against women. Resumes containing words like “women” (e.g., “women's chess club captain”) were downgraded because the system favored patterns seen in male-dominated data.

Key Lessons Learned:

✅ Data Diversity Matters: AI training data must reflect the real-world workforce. Curate datasets to include underrepresented groups and balance representation.

✅ Audit Algorithms: Regularly review AI models for bias using fairness metrics and human evaluation.

✅ Transparency Is Critical: Clear disclosure of AI design and decision-making helps spot biases early and builds trust.

✅ Human Oversight Is Essential: AI should assist, not replace, human judgment. Recruiters ensure evaluations remain fair and nuanced.

Don't let bias shape your decisions, your people's experiences, or your reputation. Book a free 30-minute consultation to discover how we can help you audit and improve your datasets, design fairness-aware AI systems, implement continuous monitoring and oversight, and embed ethical AI practices across your company.

Types of Bias in AI With Examples

Behind every unfair loan denial, every CV that never surfaces, every misdiagnosis, there's a pattern — a specific kind of bias quietly shaping an AI system's decisions. Below, we break down the major categories and examples of bias in AI that make them impossible to ignore.

🩸 Violence Bias

Models trained on violent or aggressive content can normalize or reproduce harmful language, potentially amplifying hostility in real-world interactions. For instance, a poorly moderated language model exposed to inflammatory text might generate outputs that encourage aggression, perpetuate harassment, or escalate conflicts in digital spaces. In customer service, social media, or chatbot applications, this can lead to reputational risk, legal exposure, and erosion of trust.

⚖️ Controversial Topic Bias

AI trained on polarizing datasets may take extreme or one-sided stances. For example, a model exposed to datasets dominated by a single perspective on gun rights, climate change, or social policy may generate content that misrepresents alternative viewpoints or reinforces ideological echo chambers. This bias can influence public opinion, shape internal decision-making, or even affect regulatory compliance if unchecked.

👩💻👨💻 Gender Bias in AI

One of the most recognizable forms of bias, gender bias occurs when AI models reinforce outdated stereotypes. Classic examples include associating “nurse” with women and “engineer” with men.

Beyond job titles, this can influence hiring algorithms, career guidance tools, and generative content. When left unaddressed, gender bias can reinforce inequality in the workplace, harm brand perception, and limit opportunities for underrepresented groups.

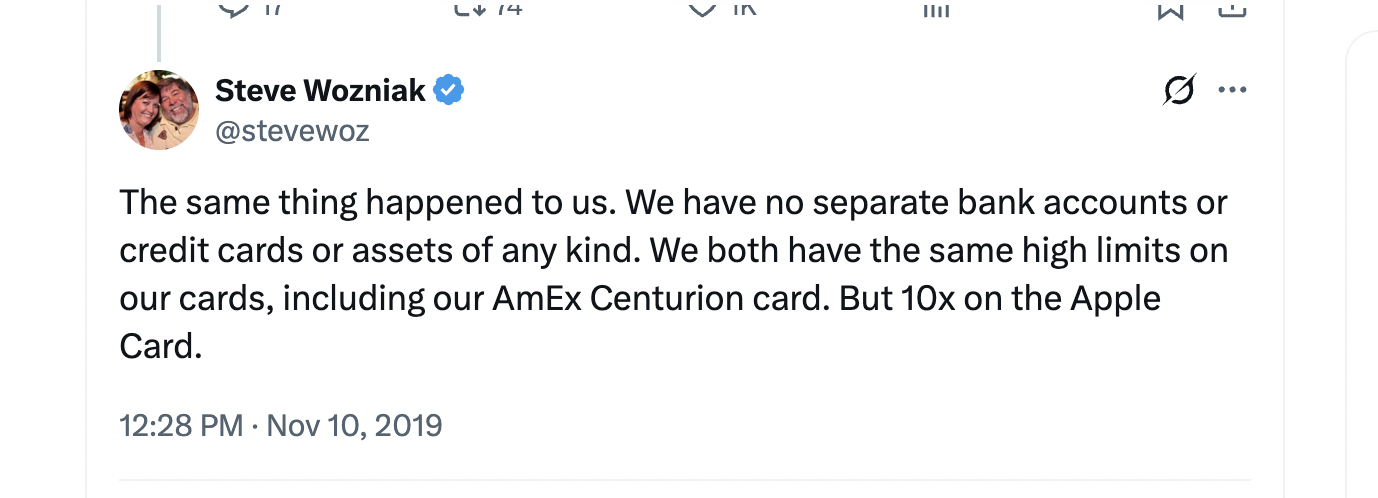

Apple's credit card, managed by Goldman Sachs, came under fire for offering women significantly lower credit limits than men, even when women had higher credit scores and incomes.

Tech entrepreneur David Heinemeier Hansson reported receiving a credit limit 20 times higher than his wife's, despite her stronger financial profile. Similarly, Apple co-founder Steve Wozniak received a limit ten times greater than his wife's, highlighting how AI can unintentionally reinforce gender bias in financial decisions.

✊🏽✊🏻 Racial & Ethnic Bias

Generative AI models and predictive systems often fail to represent racial and ethnic diversity accurately. For instance, when asked to depict “CEOs” or “leaders,” a model may overwhelmingly produce images of individuals from a single racial group, ignoring the true diversity in corporate leadership. In policing, finance, or healthcare applications, such biases can result in discriminatory outcomes, misrepresentation, and legal or ethical violations.

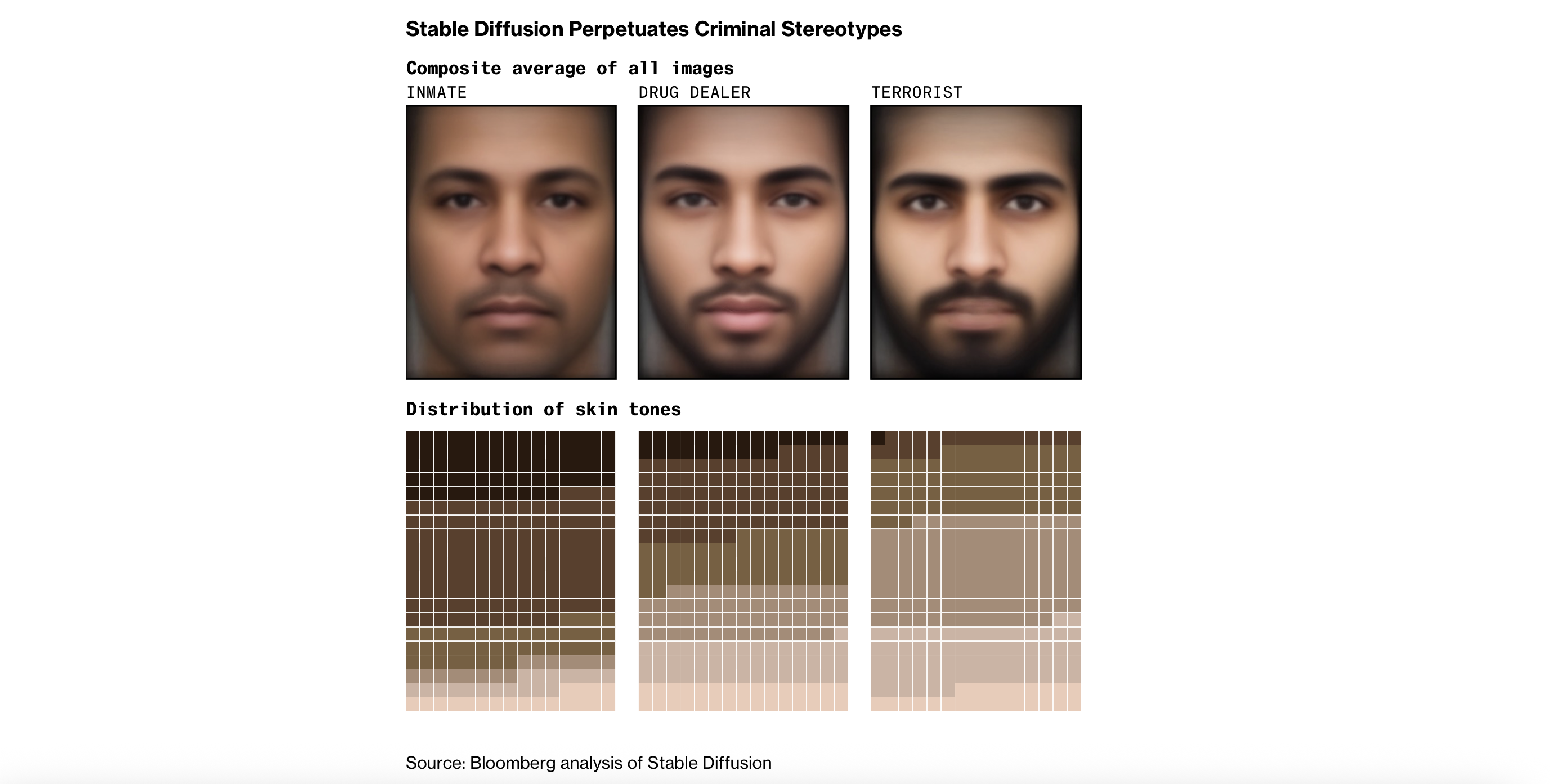

Bloomberg used Stable Diffusion to generate images for the terms “inmate,” “drug dealer,” and “terrorist.” Over 80% of the images labeled “inmate” depicted people with darker skin, despite people of color comprising less than half of the U.S. prison population, according to the Federal Bureau of Prisons.

However, the disproportionate output also reflects a harsh reality: Black Americans are incarcerated in state prisons at nearly five times the rate of White Americans, after accounting for population differences, according to the Sentencing Project.

A 2025 Cedars-Sinai study found that leading large language models — including Claude, ChatGPT, Gemini, and NewMes-15 — tend to provide less effective psychiatric treatment recommendations for African American patients. While diagnoses were largely unbiased, treatment plans showed clear disparities, with NewMes-15 performing worst and Gemini best. These findings highlight the urgent need for bias evaluation and oversight in AI-driven medical tools.

👵👴 Age Bias

AI systems can unconsciously exclude older adults by assuming they are less engaged with technology. This bias may appear in content recommendations, product personalization, or digital services, effectively silencing or neglecting a significant demographic. Age bias can reduce market reach, skew analytics, and contribute to generational inequities in service delivery.

A ProPublica investigation revealed Facebook's targeted job ads allowed employers to exclude older workers, limiting visibility to users under 40. This practice blocked older adults from job opportunities and raised legal concerns under the Age Discrimination in Employment Act (ADEA), with companies like Verizon and Amazon facing scrutiny.

✝️☪️✡️ Religious Bias

Models can overrepresent or favor one religion while misrepresenting or ignoring others. In recommendation engines, text generation, or image creation, this bias can distort information, propagate stereotypes, or alienate user groups. For businesses operating in diverse markets, religious bias can pose reputational risks and undermine inclusivity goals.

♿ Disability Bias

AI that fails to consider accessibility needs can marginalize users with disabilities. For example, a health-advice AI might omit adaptive workout suggestions or generate recommendations unsuitable for individuals with mobility limitations. Disability bias not only limits the utility of a system but also risks non-compliance with accessibility laws and ethical standards.

How is AI Bias Measured?

To mitigate bias, organizations need to know how their models behave across different groups — whether based on race, gender, or socioeconomic status — and ensure that predictions are equitable.

A truly fair AI model performs consistently, giving similar accuracy and opportunities to all groups. To track and improve fairness, data scientists rely on quantitative metrics that compare outcomes between historically privileged and non-privileged groups. Here are three widely used measures:

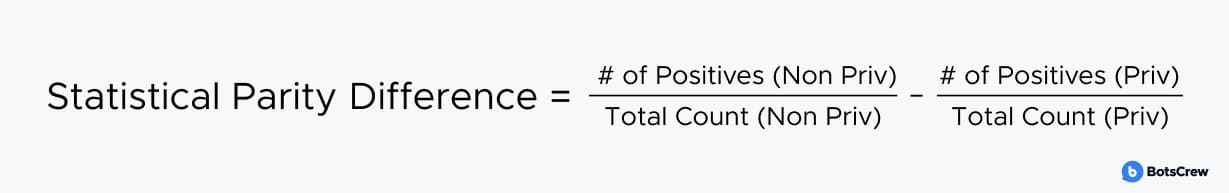

Statistical Parity Difference. This metric compares the ratio of favorable outcomes between groups. It shows whether a model's predictions are independent of sensitive attributes, aiming for equal selection rates. For instance, in hiring, this ensures that men and women have an equal chance of being shortlisted.

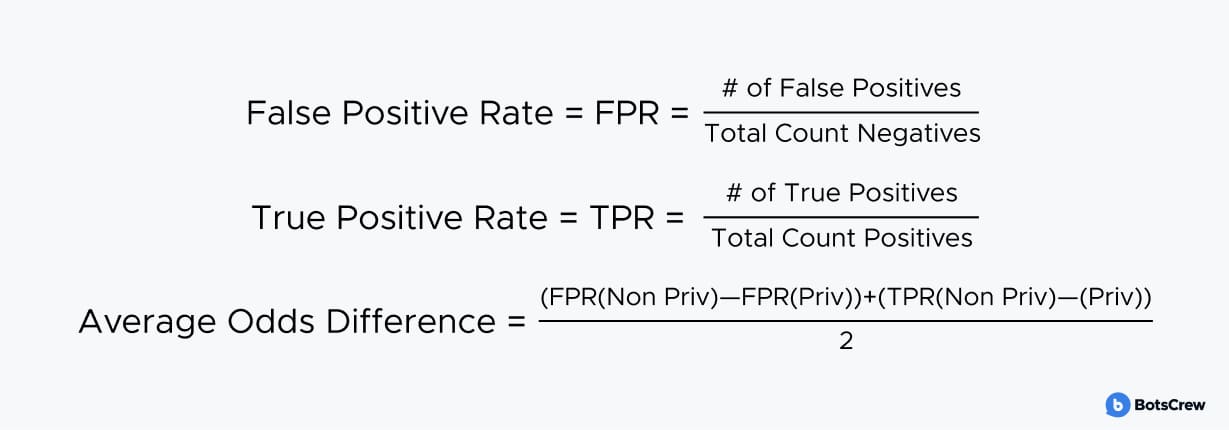

Average Odds Difference. A stricter metric, this compares false positive and true positive rates across groups. It ensures that errors — both over- and under-selection — are distributed fairly. This is critical in high-stakes areas like criminal justice, where unequal error rates can have serious consequences.

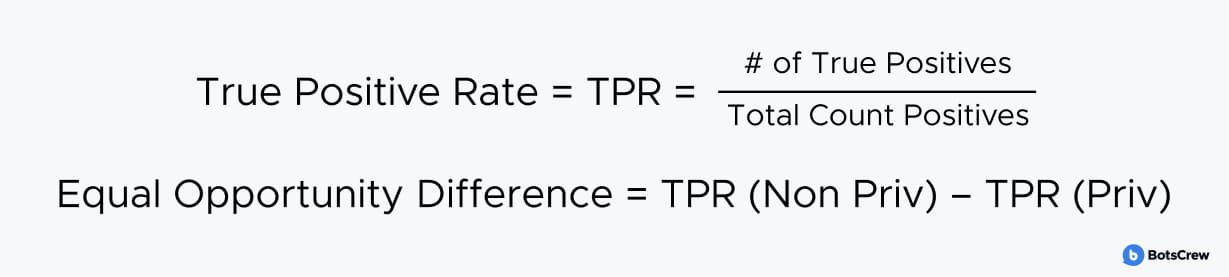

Equal Opportunity Difference. This focuses on true positive rates, ensuring that qualified candidates from all groups have an equal chance of being selected. While it does not account for false positives, it is useful in contexts where providing opportunities to the right people is the priority.

Data scientists can calculate these metrics using tools like Microsoft's Fairlearn or IBM's AI Fairness 360. A score of zero indicates a mathematically fair outcome.

How to Keep AI Fair: AI Bias Mitigation Strategies, Practices, and Controls

Keeping AI fair requires a combination of thoughtful practices, rigorous controls, and continuous oversight to ensure artificial intelligence operates responsibly at scale. In this section, we'll explore the concrete steps and AI bias mitigation strategies leaders can take to prevent bias, protect people, and make AI a force for fairness, turning what could be a liability into a strategic advantage.

Start with Better Data

Skewed datasets carry the biases of history and human judgment; clean, representative data can prevent these biases from being baked into AI models in the first place.

Best practices:

- Audit datasets for demographic gaps or overrepresentation.

- Balance data to ensure underrepresented groups are fairly included.

- Remove historical biases wherever possible, e.g., discriminatory hiring or lending patterns.

Business impact: Ensures decisions — like hiring, lending, or marketing recommendations — don't unintentionally favor one group over another.

Build Fairness Into the System

Fairness should be part of AI from the start, not an afterthought. This means embedding rules, constraints, and fairness-aware algorithms that guide decision-making, even when the input data is imperfect.

Best practices:

- Incorporate fairness constraints during model training.

- Test models for disparate impacts across demographics.

- Adjust weighting or decision thresholds to balance outcomes.

Business impact: Reduces the risk of biased decisions that could harm your employees, customers, or reputation.

Audit AI Decisions Continuously

Even with clean data and fairness-aware design, AI can drift over time or produce unexpected outcomes. Continuous monitoring ensures small biases are caught before they escalate.

Best practices:

- Audit outputs regularly for bias indicators.

- Implement real-time monitoring for high-stakes decisions.

- Filter and flag offensive, harmful, or discriminatory content generated by AI.

Business impact: Maintains trust in AI-driven processes and prevents small issues from becoming large-scale problems.

Increase Transparency and Accountability

Executives need visibility into how AI arrives at decisions. Understanding the “why” behind an algorithm's outputs is crucial for accountability and strategic decision-making.

Best practices:

- Provide explainable AI outputs for key stakeholders.

- Document decision-making processes and model logic.

- Audit AI systems regularly and report findings to leadership.

Business impact: Builds confidence with regulators, investors, and customers, while giving leadership the information needed to make strategic decisions.

Embed Bias Awareness Into Culture

Inclusive teams, diverse perspectives, and an ethical governance framework ensure fairness is a company-wide priority, not just a technical checkbox.

Best practices:

- Encourage diversity in AI development teams.

- Conduct inclusive testing with representative users.

- Create governance policies and accountability frameworks for AI use.

Business impact: Encourages ethical AI use across the company, strengthens corporate reputation, and supports sustainable growth.

Why BotsCrew? Building AI That's Fair, Inclusive, and Trustworthy

Other consultants deliver reports. We deliver real-world AI solutions — built to be fair, inclusive, and trustworthy. At BotsCrew, our legacy is turning enterprise AI ambitions into production-grade, bias-aware systems. Since 2016, we've partnered with global enterprises to go beyond recommendations, guiding senior executives step-by-step through strategic planning, roadmap alignment, and the successful implementation of AI initiatives that are not just high-performing but ethically sound.

AI Opportunity Assessments & Use Case Discovery

We dive deeply into your business, engaging executives and teams to uncover critical insights and risk points for bias. By asking thoughtful questions, analyzing data, and challenging assumptions, we identify AI use cases that are linked to business outcomes while remaining equitable.

Ethical AI Frameworks

BotsCrew goes beyond technical excellence by embedding Ethical AI principles into the development process. Their frameworks help organizations identify, mitigate, and prevent bias before it impacts decision-making. By aligning with global best practices, BotsCrew ensures that AI systems meet rigorous standards for fairness, transparency, and accountability — turning AI into a tool that organizations can trust.

Integration Planning & Technical Architecture

We collaboratively design technical blueprints and integration plans to ensure that AI systems are built to prevent bias from the ground up, aligning with your business context and operational goals.

Custom AI Development for Real-World Impact

Every organization has unique needs, and BotsCrew specializes in custom AI model development. Whether it's chatbots or generative AI tools, our solutions are designed to be inclusive, fair, and bias-aware from the ground up. By tailoring AI to specific business contexts, BotsCrew ensures that ethical considerations are fully integrated alongside performance objectives.

Quick-Win Pilots

Targeted pilots demonstrate immediate, bias-conscious value, allowing teams to iterate based on real-world feedback and validate fairness before scaling.

We don't just deliver AI. We deliver AI you can trust, giving organizations the confidence to make smarter, fairer, and more inclusive decisions at scale.

Transform AI from a Risk into a Strategic Advantage. Partner with BotsCrew to build AI that is fair, transparent, and aligned with your company's values.